As long as the subject in the foreground in sharp and in focus, most people will never know you’re playing fast and loose with the background blur.

So back to the question, “Why does Apple limit this to people?”

Apple tends to under-promise and over-deliver. There’s nothing stopping them from letting you take depth photos of everything, but they’d rather set the expectation, “Portrait Mode is only for people,” than disappoint users with a depth effect that stumbles from time to time.

We’re happy that this depth data — both the human matte and the newfangled machine-learned depth map — was exposed to us developers, though.

So the next question is whether Machine Learning will ever get to the point that we don’t need multi-camera devices.

The Challenge of ML Depth Estimation

Our brains are amazing. Unlike the new iPad Pro, we don’t have any LIDAR shooting out of our eyes feeding us depth information. Instead, our brains assemble information from a number sources.

The best source of depth comes from comparing the two images coming from each of our eyes. Our brains “connects the dots” between the images. The more they diverge, the further an object must be. This binocular approach is similar to what powers depth on dual camera iPhones.

Another way to guess depth is through motion. As you walk, objects in the distance move slower than objects nearby.

This is similar to how augmented reality apps sense your location in the world. This isn’t a great solution for photographers, because it’s a hassle to ask someone to wave their phone in the air for several seconds before snapping a photo.

That brings us to our last resort, figuring out depth from a single (monocular) still image. If you’ve known anyone who can only see out of one eye, you know they live fairly ordinary normal lives; it just takes a bit more effort to, say, drive a car. This is because you rely on other clues to judge distance, such as the relative size of known objects.

For example, you know the typical size of a phone. If you see a really big phone, your brain guesses it’s pretty close to your eyes, even if you’ve never seen that specific phone before. Most of the time, you‘re right.

Cool. Just train a neural network to detect these hints, right?

Unfortunately, we’ve described an Ill Posed problem. In a Well Posed problem, there is one, and only one solution. Those problems sound like, “A train leaves Chicago at 60 MPH. Another train leaves New York at 80 MPH…”

When guessing depth, a single image can have multiple solutions. Consider this viral TikTok. Are they swinging toward, or away from, the observer?

Some images just can’t be solved, either because they lack enough hints, or because they’re literally unsolveable.

At the end of the day, neural networks feel magical, but they’re bound by the same limitations as human intelligence. In some scenarios, a single image just isn’t enough. A machine learning model might come up with a plausible depth map, that doesn’t mean it reflects reality.

That’s fine if you’re just looking to create a cool portrait effect with zero effort! If your goal is to accurately capture a scene, for maximum editing latitude, this is where you want a second vantage point (dual camera system) or other sensors (LIDAR).

Will machine learning every surpass a multi-camera phone? No, because any system will benefit from having more data. We evolved two eyes for a reason.

The question is when these depth estimates are so close, and the misfires are so rare, that even hardcore photographers can’t justify paying a premium for the extra hardware. We don’t think it’s this year, but at the current pace that machine learning moves, this may be years rather than decades away.

It’s an interesting time for photography.

Try it yourself

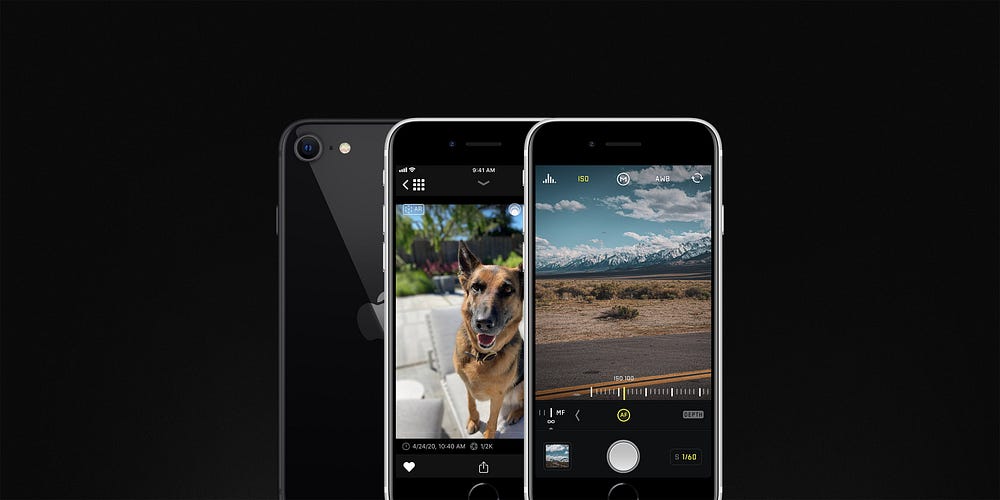

If this made you more curious about the cool world of modern depth-capture. we launched our update for Halide last Friday — iPhone SE launch day.

If you have any iPhone that supports Portrait mode, you can use Halide to shoot in our ‘Depth’ mode and view the resulting depth maps. It’s pretty fun to see how your iPhone tries to sense the wonderfully three-dimensional world.

Thanks for reading, and if you have any more iPhone SE questions, reach out to us on Twitter. Happy snapping!

from Hacker News https://ift.tt/2VEMPIO

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.