Enlarge / Public toilets in the Roman port city of Ostia once had running water under the seats. Ostia is where the researchers took a soil core sample to analyze lead pollution from pipe runoff.

The ancient Roman plumbing system was a legendary achievement in civil engineering, bringing fresh water to urbanites from hundreds of kilometers away. Wealthy Romans had hot and cold running water, as well as a sewage system that whisked waste away. Then, about 2,200 years ago, the waterworks got an upgrade: the discovery of lead pipes (called fistulae in Latin) meant the entire system could be expanded dramatically. The city's infatuation with lead pipes led to the popular (and disputed) theory that Rome fell due to lead poisoning. Now, a new study reveals that the city's lead plumbing infrastructure was at its biggest and most complicated during the centuries leading up to the empire's peak.

Hugo Delile, an archaeologist with France's National Center for Scientific Research, worked with a team to analyze lead content in 12-meter soil cores taken from Rome's two harbors: the ancient Ostia (now 3km inland) and the artificially created Portus. In a recent paper for Proceedings of the National Academy of Sciences, the researchers explain how water gushing through Rome's pipes picked up lead particles. Runoff from Rome's plumbing system was dumped into the Tiber River, whose waters passed through both harbors. But the lead particles quickly sank in the less turbulent harbor waters, so Delile and his team hypothesized that depositional layers of lead in the soil cores would correlate to a more extensive network of lead pipes.

Put simply: more lead in a layer would mean more water flowing through lead pipes. Though this lead probably didn't harm ocean wildlife, it did leave a clear signature behind.

Dating the core sediments revealed a surprisingly detailed record of Rome's expansion over several centuries of development between 200 BCE and 250 CE. Examining the core from Ostia, the researchers found a sudden influx of lead in 200 BCE, when aqueducts made of stone and wood gave way to lead pipe. In later layers, the researchers found a mix of lead with different isotopic compositions. This suggests water was flowing into the harbor from a wide variety of lead pipes, crafted from leads of different ages and provenances.

Enlarge / The hexagonal Portus can be seen here, slightly above the mouth of the Tiber on the right.

The very existence of the pipe system was a sign of Rome's fantastic wealth and power. Most lead in Rome came from distant colonies in today's France, Germany, England, and Spain, which meant the Empire needed an extensive trade network to build out its water infrastructure. Plus, the cost of maintenance was huge. All pipes were recycled, but the city still had to repair underground leaks, check water source quality, and prevent the massive aqueducts from crumbling. In the first century CE, Roman water commissioner Julius Frontinus wrote a two-volume treatise for the emperor on the city's water system, including a discussion of how to prevent rampant water piracy, in which people would tap the aqueducts illegally for agricultural use—or just for drinking.

Because it was so expensive, the city's plumbing system is a good proxy for Rome's fortunes. In their soil core from Ostia, Delile and his team even discovered evidence of the Roman Empire's horrific civil wars during the first century BCE. As war sucked gold from the state's coffers, there was no money to build new aqueducts nor to repair existing ones.

Around that time, the researchers saw a dramatic decrease in the amount of lead-contaminated water in the Ostia harbor—in fact, it drops about 50 percent from previous years. Write the researchers:

[This] provides the first evidence of the scale of the contemporaneous reduction in flows in Rome's lead pipe distribution system—of the order of 50%—resulting in decreased inputs of lead-contaminated water into the Tiber. [Augustus']... progressive defeat of his rivals during the 30s BCE allowed his future son-in-law, Agrippa, to take control of Rome's water supply by 33 BCE. Over the next 30 years, they repaired and extended the existing aqueduct and fistulae system, as well as built an unprecedented three new aqueducts, leading to renewed increase in [lead] pollution of the Tiber river.

Once the city had recovered from the hardships of the wars, the researchers saw a steady increase in lead over the years that span the Empire's height during the 1st and 2nd centuries CE. This was when Frontinus led an effort to rebuild the city's plumbing system while wealthy Romans added crazy water features to their homes. Second-century Emperor Hadrian reportedly had a fountain in his villa that flowed into a reservoir next to the dinner table; he would serve guests food from little boats floating on it.

Delile and his colleagues saw the slow decline of Rome in the Ostia soil core, too. There was a strong drop in lead after the mid-3rd century CE, when the researchers note "no more aqueducts were built, and maintenance was on a smaller scale." They add that this phase of "receding [lead] contamination corresponds to the apparent decline of [lead] and [silver] mining and of overall economic activity in the Roman Empire."

PNAS, 2017. DOI: 10.1073/pnas.1706334114 (About DOIs).

from Hacker News https://ift.tt/lWXPjif

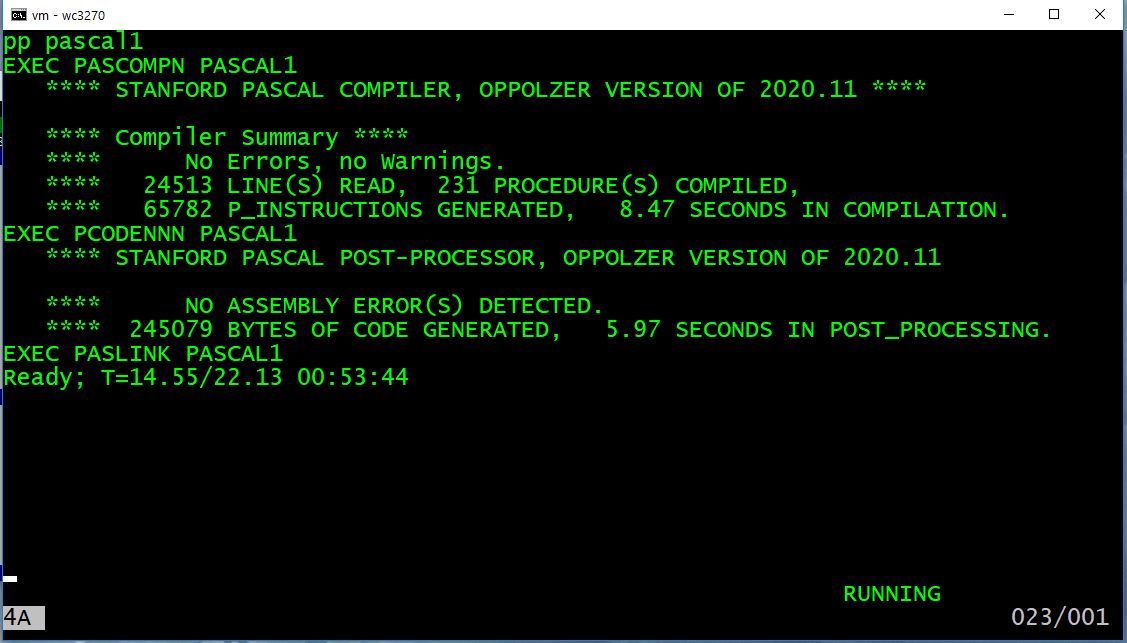

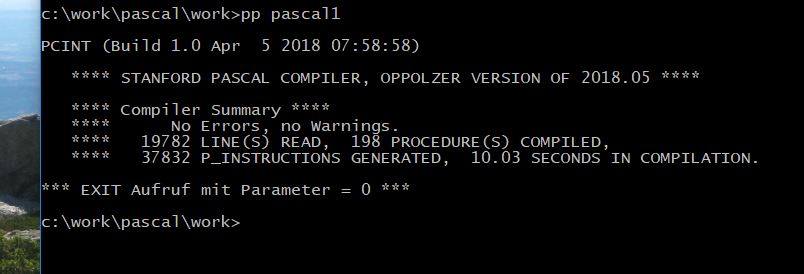

are not yet completed ... maybe implemented, but documentation is missing.

are not yet completed ... maybe implemented, but documentation is missing.