Last week I recorded a podcast with John Spiegel and Jaye Tillson (available here) and one of the first things we discussed was the emergence of SD-WAN (Software-Defined Wide Area Networks) as I saw it. It’s a story with a lot of history that provides the jumping off point for this week’s newsletter.

While the early success of SDN was in local area networks (and particularly the special case of datacenter networks) it didn’t take too long before it made its impact in the wide area. The WAN applications of SDN can be further subdivided: there was the application of SDN to traffic engineering of inter-datacenter networks (notably from Google and Microsoft) and then there is what came to be known as SD-WAN. This part of the story relates primarily to enterprise WANs–a huge business but not something that gets much coverage in the discussions of SDN at academic conferences. So let’s look a bit more closely at enterprise WANs and why SDN turned out to be a good fit for the challenges in that environment.

My introduction to enterprise WANs came in the early days of MPLS, around 1996. My team at Cisco had published the first drafts on Tag Switching at the IETF, which would eventually lead to the creation of the MPLS working group and all the RFCs that followed. As described in a previous post, we were approached by a team at AT&T whose main problem, in essence, was that their enterprise WAN business was too successful. Their core business was building WANs using Frame Relay virtual circuits to connect the offices and datacenters of enterprises. Deploying a WAN for one customer entailed provisioning a set of frame relay circuits to interconnect the sites, plus configuring a router at every site to manage the routing of traffic over the WAN. The complexity of managing these circuits and routing configurations was becoming overwhelming both because of the popularity of the service and because of the increasing desire to provide full-mesh connectivity (or something close to it) among sites.

At the time, one of the options that was being considered as an alternative to Frame Relay was to use IPSEC tunnels across the Internet to interconnect the sites. Leaving aside the fact that the Internet in 1996 was way less ubiquitous than it is today, the big downside of that approach was that it actually didn’t do much to reduce the complexity of configuration. You would need to configure as many IPSEC tunnels as Frame Relay circuits, and you still need to configure the routing overlay on top of all those tunnels to forward traffic between all the sites. In rough terms, both Frame Relay and IPSEC tunnels require n2 configuration steps, where n is the number of sites. MPLS/BGP VPNs came out ahead by reducing that configuration cost to order n, even with full mesh connectivity among sites. The full story of how that works is in RFC 2547 and in the book I wrote with Yakov Rekhter. One thing that we made sure of was that our system had no central point of control, because in 1996 we knew that central control was not an option for any networking solution aiming to scale up.

A few years later (2003) I gave the SIGCOMM talk for which I am most well known – “MPLS Considered Helpful” (in the outrageous opinion session of course). Talking to people afterwards I was struck by the lack of awareness of how widely deployed MPLS/BGP VPN service was at that point. There were over 100 service providers using it to provide their enterprise WAN services by 2003, but that was completely invisible to most of the SIGCOMM community (perhaps because universities don’t rely on such services and because enterprise network admins don’t show up at academic conferences).

Fast forward to 2012 and MPLS VPNs were the de facto choice for enterprise WANs. But many things had changed since 1996, and those changes were about to align to create the conditions for SD-WAN to emerge. Importantly, the idea that central control was a non-starter had been effectively challenged with the rise of SDN in other settings. Scott Shenker’s influential talk “The Future of Networking, and the Past of Protocols” had made the compelling case for why central control was needed in SDN, and developments in distributed systems had enabled scalable, fault-tolerant centralized controllers such as the one we built for datacenter SDN at Nicira. The first time I heard about the ideas behind SD-WAN was when one of my Nicira colleagues told me he was leaving for another startup. The simple high-level sketch he gave of applying an SDN-style controller to the problem of enterprise WANs immediately made sense.

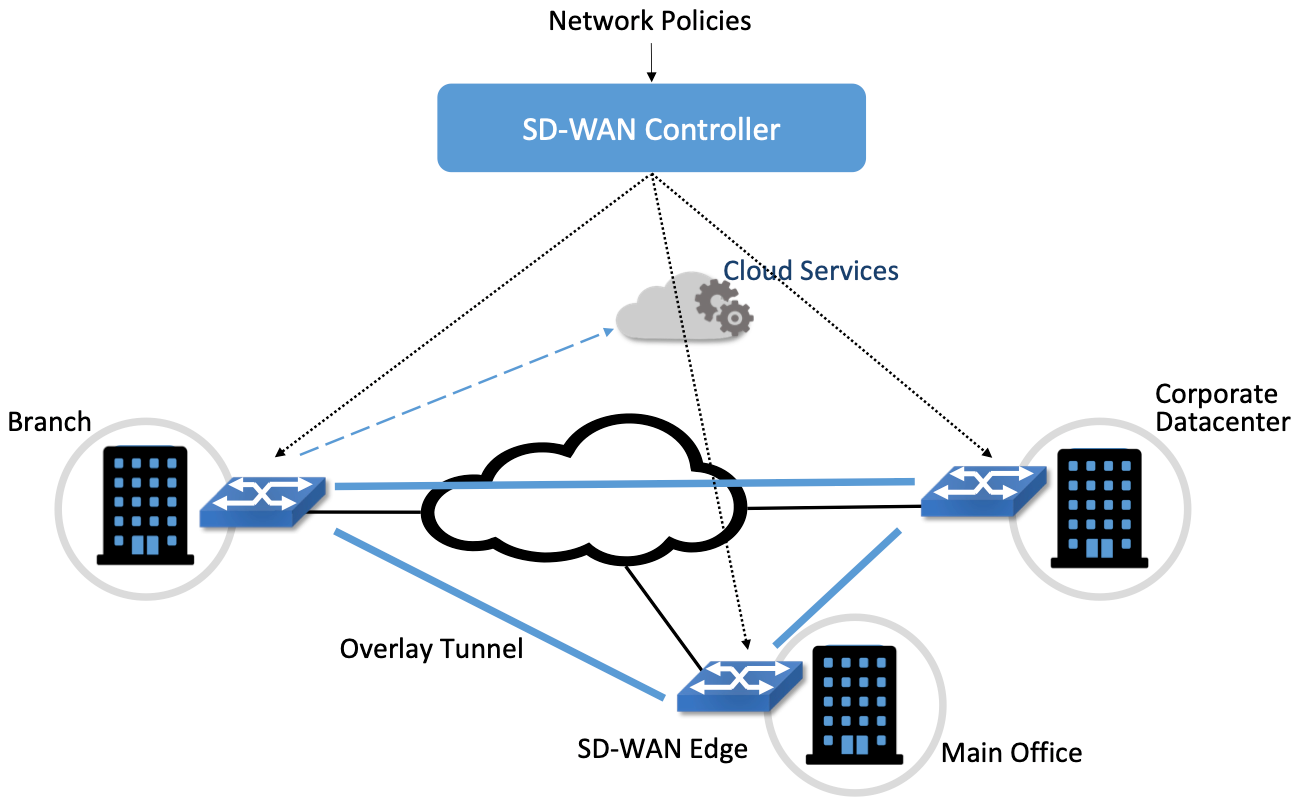

Whereas in 1996 we relied on a fully distributed approach using BGP to determine how sites could communicate with each other, an SDN controller allows policies for inter-site connectivity to be set centrally while still being implemented in a distributed manner at the edges. The benefits of this approach are numerous, especially in an era where high-speed Internet access is a widely-available commodity. Building a mesh of encrypted tunnels among a large number of enterprise sites no longer has n2 configuration complexity, because you can let the controller figure out which tunnels are needed and push configuration rules to the sites. Furthermore, there is no longer a dependence on getting a particular MPLS service provider to connect your site to their network: you just have to get Internet connectivity to your site. This factor alone–replacing “premium” MPLS access with “commodity” Internet–was decisive for some SD-WAN early adopters.

The other big change in the decades since RFC 2547 was the rise of cloud-based services as an important component of enterprise IT (Office 365, Salesforce, etc.). Traditionally, an MPLS VPN would provide site-to-site connectivity among branches and central sites. If access to the outside Internet was required, it would entail backhauling traffic to one of a handful of central sites with external connectivity and all the firewalls etc. needed to secure that connection. But as more business services were delivered from “the cloud”, and with SD-WAN leveraging Internet access rather than dedicated MPLS circuits to every branch, it started to make sense to provide direct Internet access to branch offices. This meant a significant change to the security model for networking. Rather than a single point of connection between the enterprise and the Internet (with a central set of devices to control attendant security risks) there are now potentially as many connection points as there are branches.

So with SD-WAN and the rise of cloud services, you need a way to set and enforce security policy at all the edges of your enterprise–and now there is potentially an edge at every branch. This, in essence, is how SD-WAN morphed into SASE (secure access services edge): once you started putting SD-WAN devices at every site, you needed a way to apply security services at those sites. Fortunately, the “centralized configuration with distributed enforcement” model of SDN provides a natural way to address this issue. The SD-WAN device at the edge is not just an IPSEC tunnel terminating device, but is also a policy enforcement point to apply the security policies of the enterprise. And the central point of control for an SD-WAN system provides a tractable means of configuring the policies in one place even though they will be implemented in a distributed way.

There is a lot more to SD-WAN than I have space to cover here. For example, dealing with QoS in the presence of the Internet’s best-effort service turns out to be important and is one area where the commercial providers of SD-WAN equipment try to differentiate themselves. SD-WAN remains an area where open standards have yet to make much of an impact. But it certainly provides an interesting case study in how a change in the adjacent technologies can make ideas that once seemed impractical (such as central control and VPNs built with meshes of encrypted tunnels) viable again.

Following up on our prior post about getting a 5G small cell up and running, Larry finally tracked down the last bug in his configuration and was able to get traffic flowing from his mobile devices to the Internet. It turned out that debugging networking among a set of containers connected by overlay networks isn’t that easy! We’re updating the appendix of our 5G book accordingly. There is still time to submit your bugfixes to the book via GitHub to earn our thanks and a copy of the book.

You can find us on Mastodon here. The Verge has a good article about the Fediverse. Or maybe you want to have a look at Substack Notes, which we have just started to play with. Photo this week by Aaron Burden on Unsplash.

from Hacker News https://ift.tt/a9Nx4Or

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.