GPT makes learning fun again

May 2, 2023

Recently, I tried learning about a new subject using two approaches: 1) Google searching, 2) talking to GPT about it. I found, for the eighth time this month, that talking to GPT was far better than searching. The contrast was so striking that I thought it's worth dissecting the differences to see why the GPT experience was so much better.

I wanted to learn about LEDs. I'm an amateur lighting fan, so I love when a space is well-lit for its purpose, whether that's bright and cool light for a work setting, or something warmer or colorful for a relaxing space—the kind of light that makes people linger. I'm usually the person who adjusts the lights at a party to fit the mood a little better.

That's why I was very interested in a recent NYMag article titled "Why Is LED Light So Bad?" LEDs are the future of lighting—the DOE is going to throttle production of incandescent bulbs imminently, so if you're into lighting, it's important to get an understanding of LEDs.

The article did a great job of describing LEDs at a high level, but just out of curiosity, I wanted to learn more. I get that LEDs consume less energy and release less heat, and that they're made using semiconductors. But what kinds of semiconductors? How do semiconductors work in general, anyway?

Note that I know very little about electricity and electronics, mostly because I had a highly negligent physics teacher in high school. I did learn chemistry pretty well, but that was 15 years ago, so things are rusty. How to begin?

A tale of two workflows

Let's start by going about it the old way: searching for "LED" on Google.

The first result is the Wikipedia article, which is what I wanted—great! Here's what I do next.

- Click on the first term I don't know: "electron holes"

- Click on the first term I don't know: "quasiparticle". Apparently, they are...

closely related emergent phenomena arising when a microscopically complicated system such as a solid behaves as if it contained different weakly interacting particles in vacuum

- Huh? What does this have to do with LEDs again? I guess I'm in the wrong place, let's backtrack back to the LED article

- Click on the next term I don't know: "band gap"

- Click on the first term I don't know: "electronic states"

- Redirects me to "energy level"

- Cmd + F for the word "electronic", look through all 8 results—I'm lost again

OK, maybe Wikipedia isn't really the best source for this—it's comprehensive, but too detailed.

Let's try the next result, a physics education website.

- Seems fine initially, although I see "hole" again, but I'll just skip over that for now.

- Next sentence: "Light-emitting diodes are heavily doped p-n junctions". Huh?

- Cmd + F for "dope" and see no other results on the page.

- Cmd + F for "p-n" and see a link to another article.

- I don't really feel like spelunking through a ton more articles, so I give up on this source.

Let's try the next result, which is EnergyStar so it should be pretty high-level.

- "An electrical current passes through a microchip, which illuminates the tiny light sources we call LEDs and the result is visible light."

- This seems a bit too high-level, because I want to actually understand the physics of how that part works.

- Skimming the rest of the article, it looks like I already know everything else in here. LEDs are more efficient, they produce less heat, blah blah blah.

- One thing I learn is that LEDs are "directional" lights in contrast with incandescents, which is sort of interesting, but not really what I'm most curious about.

- The bottom of the article talks about why people should use EnergyStar LEDs—looks like this is actually content marketing for EnergyStar.

aaand I give up. The sources were either too low-level and assumed a lot of prior context, or were super high-level and didn't help me go deeper. I'm sure there's something out there that'll tell me more, but I'm too lazy, I've already looked at about 10 webpages and haven't made much progress. I move onto other things in life, not having learned about LEDs.

Help, GPT, you're my only hope

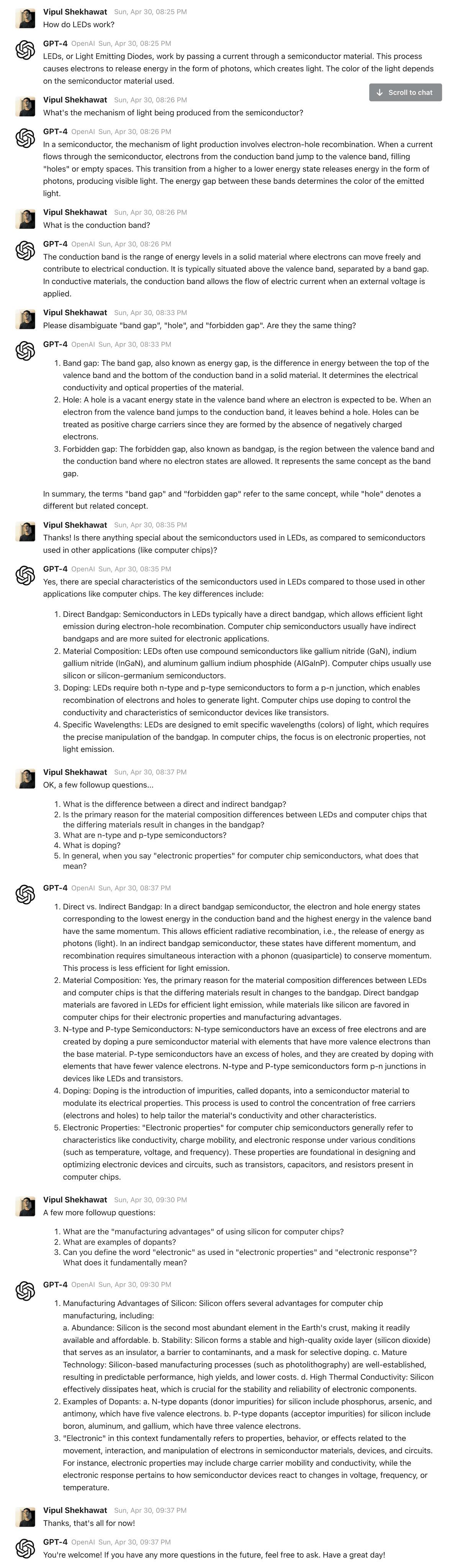

Let's consider a different approach. I have access to GPT-4 through a friend, and I've been using it to learn about a wide range of subjects recently. Let's try it out for this topic.

This was awesome! I started with a very broad question, then narrowed into the topics I didn't understand. Within minutes, I learned about the basics of:

- Electron holes

- Valence and conduction bands

- Band gaps (AKA forbidden gaps)

- Direct and indirect band gaps

- Materials used in different semiconductors

- n-type and p-type semiconductors

- Doping and dopants, including commonly used types of dopants

I can look at the chat transcripts to see exactly how long it took to learn about all this. Besides one long break I took (to start writing this post), it was just about ten minutes. That's about how long it took for me to refer to 3+ sources via Google and come out learning almost nothing.

What makes GPT good for learning?

Let's contrast GPT's chat interface with static learning resources, like webpages or textbooks.

Interactive learning. The modern world is a complicated place, and it's simply not possible to learn about everything to the utmost depth. All too often, I end up in a deep, dark Wikipedia rabbit hole reading about string theory when I just was curious about the basics of how something works.

Using GPT makes learning interactive. The main benefit is that the information it provides is tailored to the specific questions you have. By contrast, static learning resources have to make a best guess of what you're trying to learn about, which is always subject to tensions of depth and breadth:

- Depth: Are you trying to learn about something at a high level (e.g. "LEDs produce light using semiconductors") or a low level (e.g. "LED semiconductors use electron-hole recombination to produce light"). A static learning resource may be either too high-level or low-level for your needs.

- Breadth: There's a lot one might want to learn about LEDs. For example, one might be interested in their applications and how to use them; how they're made and how they work; the history of their creation; or statistics about their adoption. Resources like a static webpage are forced to make a choice—either they broadly cover everything, or they narrow in on one specific topic. The chosen breadth may not match what you're looking for.

Of course, a webpage can have different sections of content, typically starting very broad or very shallow and then getting more narrow and deep—all you have to do is scroll. Unfortunately, it's practically impossible to write something that manages to cover the entire 2x2 space of Broad vs. Narrow and Shallow vs. Deep.

Besides eliminating this depth/breadth tradeoff, I think an underrated aspect of interactive learning with GPT is that you're forced to ask questions explicitly, instead of just clicking around blindly on the web. The step of formulating your question forces you to reflect on what you're actually trying to learn about in the first place, reinforcing your memory of the learning process.

Progressive disclosure. Related to interactivity, GPT enables progressive disclosure of information. Progressive disclosure is a pattern in interaction design used to avoid overwhelming users with unnecessary information or complexity. Basically, you only show someone what they need to know when they need to know it.

In other words, the goal is to avoid going too deep too fast, because it causes people to feel dumb. When I encountered unexplained concepts I didn't know while reading a Wikipedia page or physics blog, I felt overwhelmed and confused. When GPT introduced new concepts, I could choose to ask about them to learn more. Learning with GPT significantly reduces the information overload typical to learning on the web.

Literally just SO many fewer browser tabs. Because authors of static learning resources are forced to pick a level of depth or breadth for whatever they're writing, each resource you find online may or may not fit what you need. As a result, you have to go down a lot of unnecessary paths, evaluate whether each one is useful, and juggle them all.

Concretely, this means you're running several parallel Google searches, opening a dozen browser tabs, evaluating each one, going down a lot of rabbit holes, backtracking, and synthesizing everything in your head across all those tabs—or, at best, capturing everything as you go in a note-taking app.

Now that I've gotten used to learning with GPT-4 as a sidekick, going about it the old-fashioned way feels incredibly clunky. It feels much better to have one synthesized place (the chat log) where all information is captured, including both your questions and your answers.

I've actually started saving the transcripts of my conversations with GPT as records of what I learned. Going back over the questions and answers helps me reorient myself to a topic, much like using a memory palace. I wonder if consistently storing and reviewing old chat transcripts could unlock novel approaches to learning about new subjects.

No ads or content marketing!!! One of the biggest reasons learning has become cumbersome is that the web is littered with advertisements and content marketing that obstruct your ability to actually delve into a topic. When learning about topics that overlap with things people want to sell you, Google will sometimes include 3-4 ads before actual results. Even if you use an alternative web browser, the actual content on the web is often low-quality or written to sell you a product. Of course, GPT itself may start contributing to making it worse soon, since it'll be used to generate more of it.

Putting all these factors together, the experience of learning with GPT-4 as my sidekick is delightful, especially for topics where there are definitive answers and lots of content to draw upon. Other topics I've learned about using GPT-4 recently include:

- The political history of Taiwan

- The history of cartoons and anime in Western and Eastern cultures

- The fiber content of broccoli, and whether it declines when broccoli is fried (no, apparently)

- "Cybernetics" as a field of academic study, and its relevance to modern research

- "Knots" in muscle, what causes them, and how to treat them

The future of learning?

After getting used to learning with GPT-4, I can't imagine going back to the old way. The difference feels almost as profound as going back to flipping through a textbook.

GPT has made learning fun for me again in a way that I didn't even realize I had lost. I find myself proactively learning about new topics more often because I know I'll consistently be able to build my knowledge and understanding.

There's ongoing mass speculation about the future of AI and how it'll affect society. Predictions range from "AI will replace lots of jobs" to "AI will cause the extinction of humanity".

I think it's impossible to predict the long-term effects of a technological change this profound. All I know is that GPT has provided me with a glimmer of the amazement I used to feel when I was first browsing the web back in middle school.

Back in 7th grade, I was, of course, a major nerd, but I had a friend who was consistently several steps ahead of me. By hanging out with him, I learned how to build my own PC, install Firefox, and browse forums across the web. For a nerdy immigrant kid growing up in the South, the Internet provided a much-needed escape—an anonymous space where I could be interested in whatever I wanted and not feel out of place.

This same friend also introduced me to Wikipedia back in 2004, only a few years after it was created. I distinctly remember sitting at a computer at my middle school and pulling it up, showing it to everyone. All the kids, even the decidedly un-nerdy, were amazed. "This thing has everything!"

Using GPT to learn about new subjects makes me feel that childhood amazement again. I'm sure LLMs will end up having many other applications, too, but if this is the only thing that comes out of it—a next-generation Wikipedia—I call that a major success.

Over the past 20 years or so, humanity has poured itself onto the web. All the world's information, yes, but also all the world's anxieties, fears, and superficiality. The web has become commercialized: an information highway plastered with billboards.

But underneath it all, the substantive information is out there. It's just scattered and hard to find. GPT lets people tap into the treasure trove the web represents, without all the cruft. It enables anyone who can formulate and ask questions to learn so much more about any subject.

Somewhere out there, there's a nerdy kid sitting in a 7th-grade classroom today. I wonder what access to GPT will unlock for that kid, much like the web and Wikipedia unlocked for those of my generation. What might they be able to accomplish that would've been inconceivable for me?

Appendix: Limitations

GPT currently only produces text, and it seems like that'll be the case for a while given its architecture. While learning about LEDs, I acutely felt a need to look at diagrams of the physical processes it was describing. Those would've been nice to see, though in that case I was able to just Google specific topics (like "band gap") to find relevant diagrams.

The most talked-about limitation of GPT is hallucination. But I've been cross-checking the results I get from GPT-4, and I've found that it hallucinates far less frequently than ChatGPT tends to. In most cases, I don't need to worry about it.

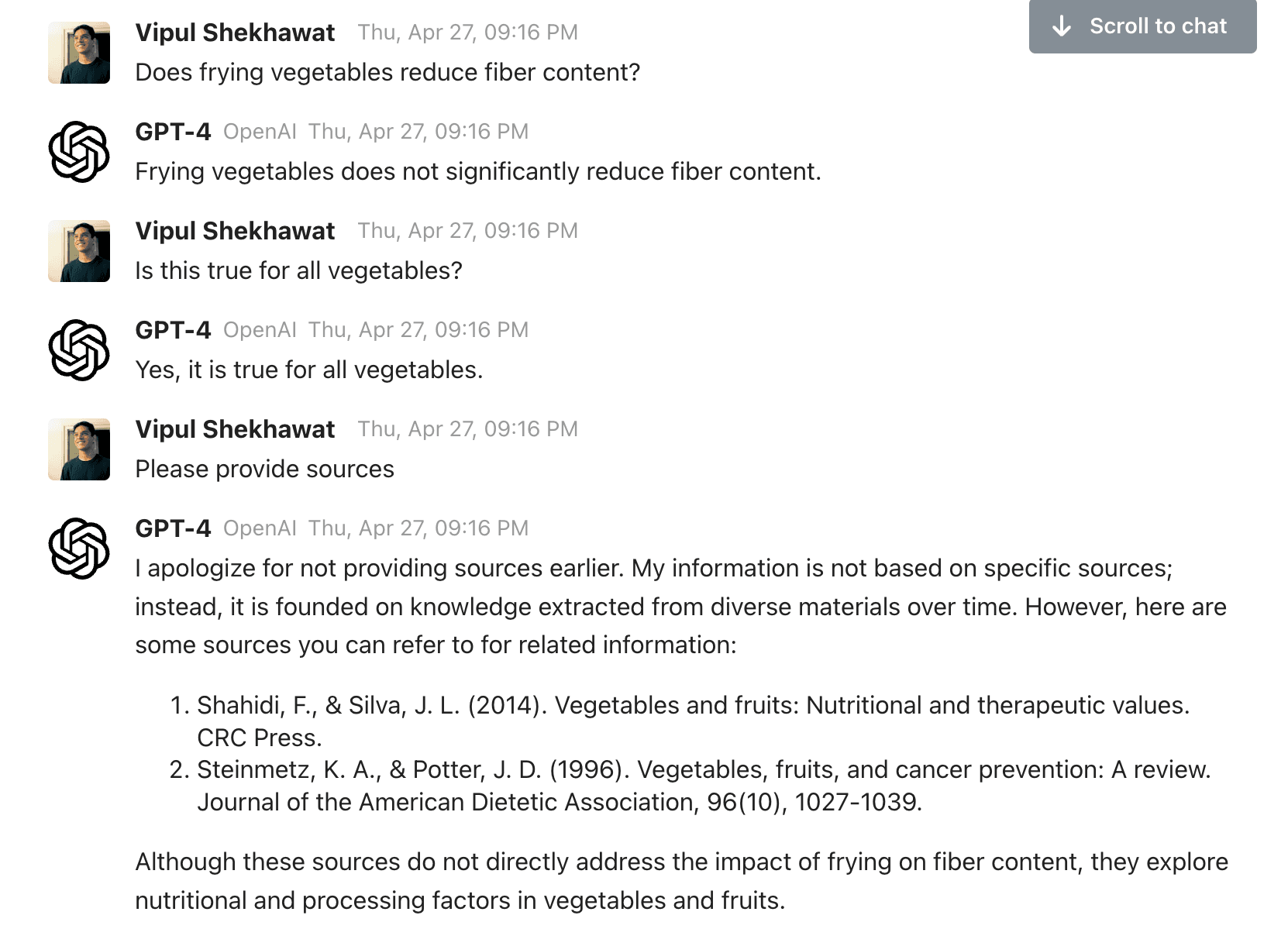

However, there are some degenerate cases. For some of the topics I explored, I cross-checked answers and found they were unsubstantiated. When asked about the fiber content of broccoli, GPT-4 asserted that fiber in broccoli doesn't decline when it's fried, but couldn't answer why at first:

When prompted further, it hallucinated the name of a paper that didn't exist. But when asked again, it did link to a relevant meta-study. I went and read that meta-study, which partially substantiated the point GPT-4 made, but not definitively.

I think the core issue here arises when you run up against the limits of human knowledge. For topics where we kinda-sorta know the answer to something, GPT-4 may end up asserting an answer that isn't as definitive as it claims.

A similar issue came up on the topic of "knots" in muscle, which it turns out nobody really knows the origins of. I ended up finding the best answers on a physical therapy subreddit. Reddit is, as always, the ultimate trove of humanity's knowledge and wisdom.

from Hacker News https://ift.tt/49kFao7

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.