When you’ve got a great idea for a startup, application architecture is probably one of the last things on your mind. But architecting your app right the first time can save you major headaches further down the road.

So let’s take a look at a typical startup application architecture, with a particular focus on the database and how the choices that application architects make in that part of their stack can impact scale, user experience, and more.

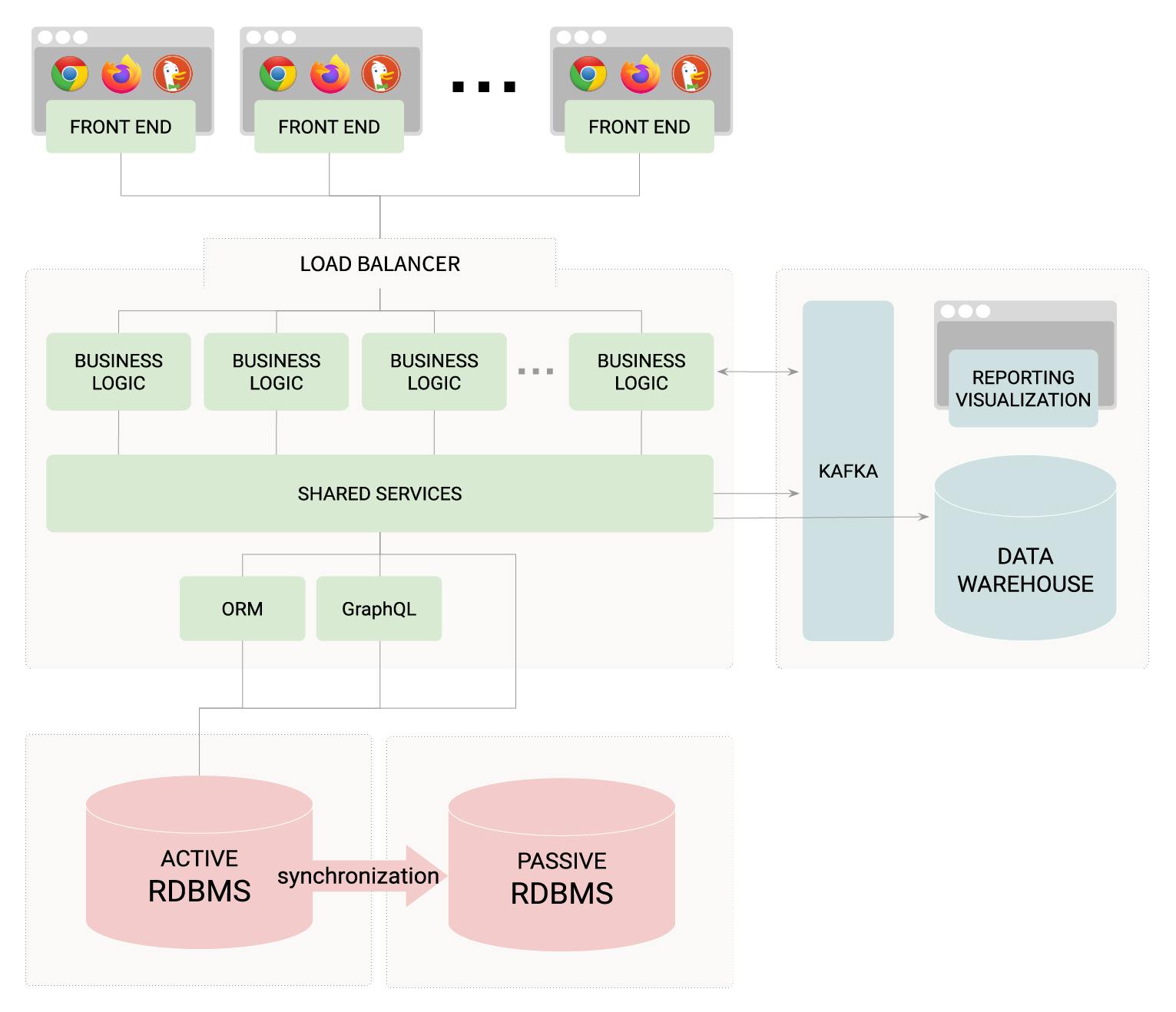

Single-region architecture

Working from the top down, modern applications begin with a user-facing front end that’s typically built with the languages of the web – HTML, CSS, JavaScript, etc.

The front end of the application is connected to the back end via a load balancer that distributes requests to instances of the application, which is written in your programming language of choice – Node.js, Python, Go, etc. These applications are typically built and deployed via shared services (for example, Netlify or Vercel) that allow the use of features like serverless functions, enabling elastic scale for the application backend without the costs associated with purchasing a bunch of dedicated machines.

From the back end, data may be passed horizontally to a data warehouse for long-term storage and analytics processing, often through a message queue or Apache Kafka.

Mission-critical data (such as transactional data) is also passed vertically from the back end of the application to the relational database, either directly or via an intermediary such as an ORM (Object-Relational Mapper) or something like GraphQL.

At the database level, even small applications typically aim for some sort of redundancy, since relying on a single database node means your entire app goes down if the node fails. However, this is typically an active/passive setup and there are some limitations around availability and complexity with this approach. Let’s look at a couple of popular options for maintaining high availability for your database in more detail.

Database considerations for single-region

The easiest way to achieve reasonably high availability is with two database nodes, configured using the active-passive model as depicted in the “before” part of the image above. With active-passive, a single database node handles all reads and writes, which are then synchronized with the passive node for backup. If the active node goes down, you can switch over to make the passive node active, and then restore the active-passive configuration once you’ve gotten the broken node back online.

However, this approach requires a lot of complex operations work to manage and maintain, and while you’re paying for two database nodes, you’re only getting the performance of a single-node system, since a single node handles all read and write requests. If you want to send data from this database to a system like Kafka, for example, you’ll also have to build something like a transactional outbox to ensure consistency between Kafka and your database and avoid the dual write problem.

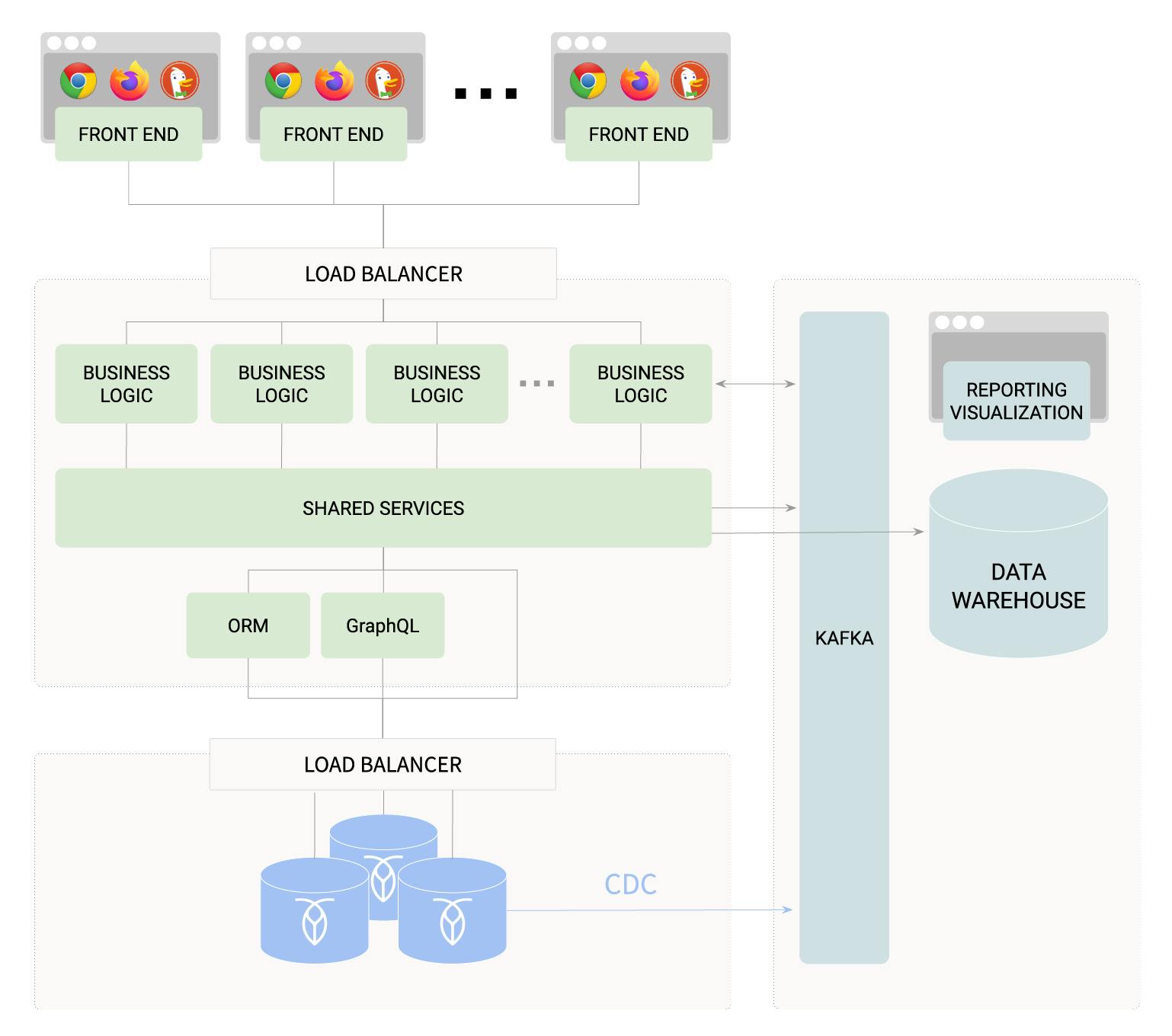

In the architecture above, we can see how using CockroachDB offers an alternative approach. CockroachDB is an active-active database, so if (for example) you’re running CockroachDB with three nodes, you’re getting the performance benefits of having three nodes because reads and writes are spread across the nodes rather than all being routed through a single node as in active-passive.

More importantly, an active-active database provides inherent resilience and very little operational complexity is needed to ensure uptime in the case of node failures. Data is distributed across multiple nodes and every node is an endpoint, so all nodes can service both reads and writes. This active redundancy allows you to not just survive failures but also perform database upgrades and schema changes without downtime.

The CockroachDB implementation is also easy to scale up and down by, for example, adding or removing nodes from a CockroachDB cluster. With CockroachDB serverless, scaling is entirely automated, and your database resources will scale up and down in real time in response to the needs of your application.

Additionally, CockroachDB’s CDC (Change Data Capture) feature makes it easy to store the same data in your transactional database and Kafka without the possibility of introducing inconsistencies.

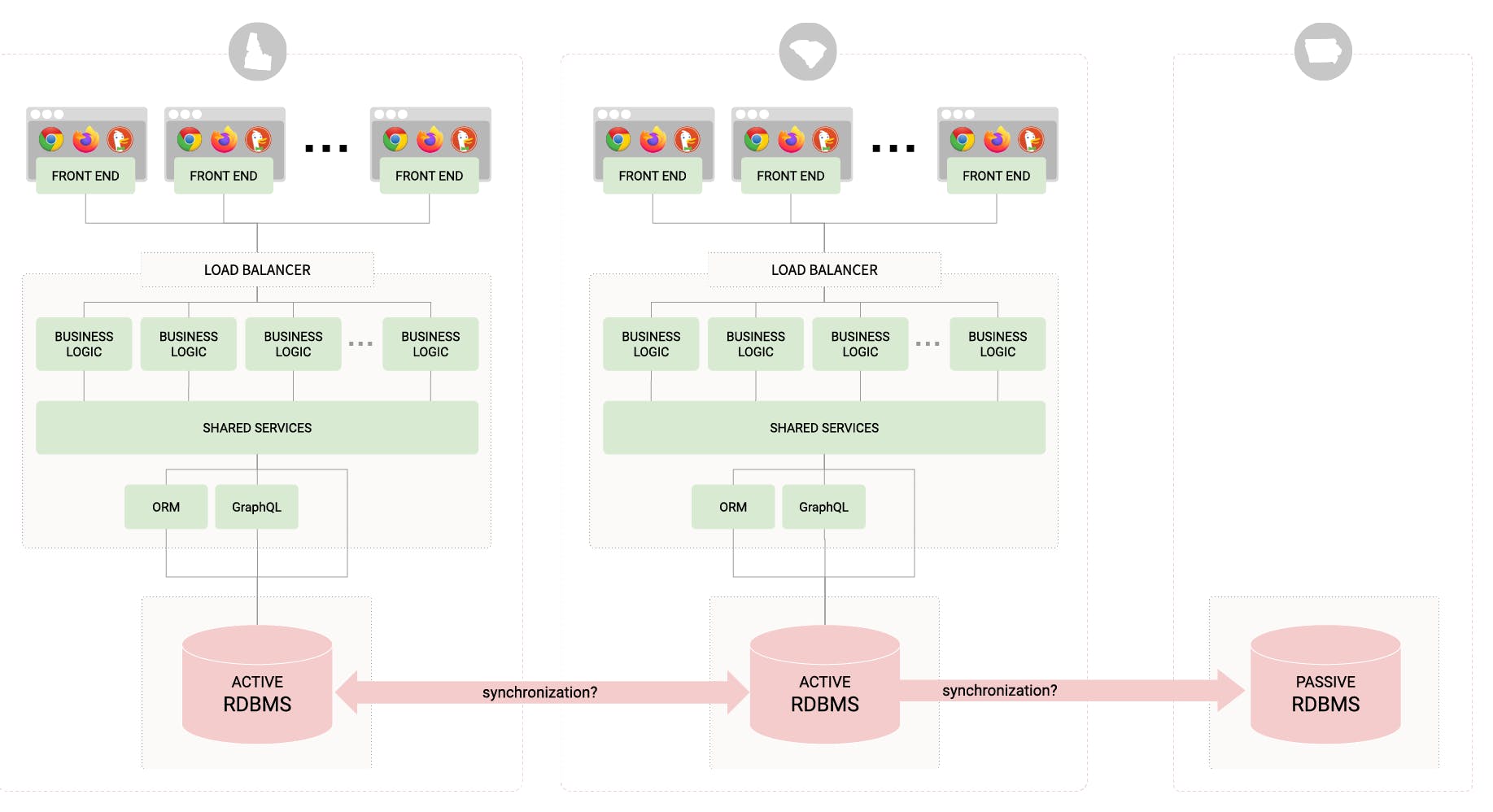

Scaling the database to multiple regions

In general, an application doesn’t change much when moving from single-region to multi-region. However, the relational database can present a significant challenge when your app starts to expand beyond a single region.

Consider, for example, an ecommerce application using the architecture depicted above. If a user in Idaho makes a purchase, that transaction will be stored in the Idaho instance of the application database, but the South Carolina instance also needs that information immediately so that (for example) a user in South Carolina can’t buy an item that just went out of stock because an Idaho user purchased the last one.

Database considerations for multi-region

Typically, organizations will synchronize data between two or more active database instances, one within each region. However, this approach presents an operational challenge and doesn’t offer optimal performance. Maintaining the synchronization is difficult and connection issues can introduce inconsistencies across the repositories. Further, performance is likely to be particularly poor if a region’s database node goes down, since all of that region’s reads and writes will then need to be routed to another region’s node, which suddenly has to handle double the workload.

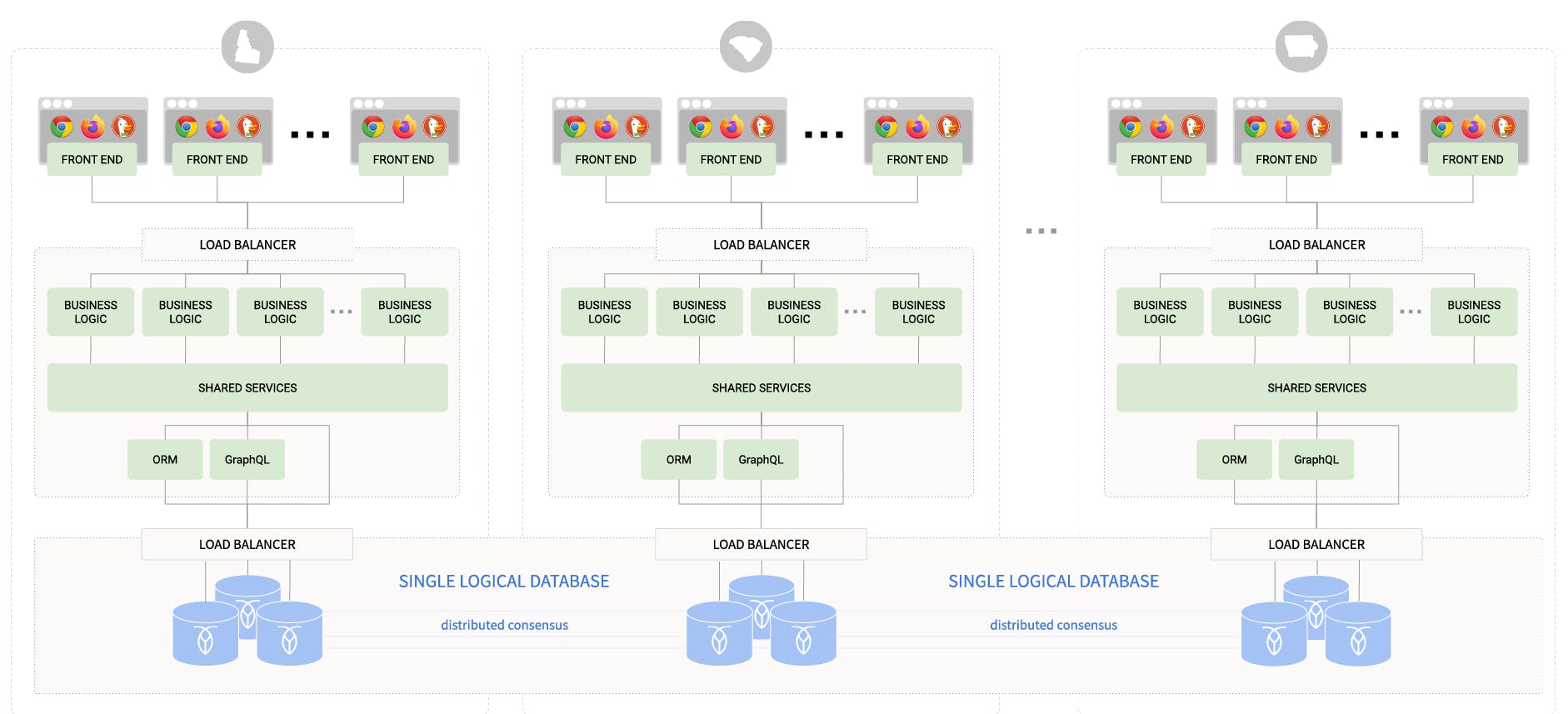

Again, CockroachDB presents a very different approach. Built from the ground up for geographic scale, CockroachDB allows for multi-region deployments to be treated like a single logical database. In other words: your application can treat the database as though it were a single Postgres instance. CockroachDB handles the geographic distribution itself, automatically.

Every node in CockroachDB (in every region) can service reads and writes and access all of the data within the cluster. It doesn’t synchronize data; it uses distributed consensus to ensure database writes are right all the time and everywhere.

And again, because CockroachDB is distributed by nature, all data is replicated across multiple nodes in each region, ensuring superior performance and availability. If one node in a region goes down, read and write requests will be handled by the other nodes in that region, with no need to introduce the latency issues that come with routing traffic to another region.

You can scale later, but architect for scale now

In the days when scaling a relational database meant dealing with manual sharding, it made sense for startups to ignore the problem of database scale until they were actually likely to be confronted with it.

Today, however, distributed SQL databases such as CockroachDB can offer easy elastic scale, and building with them is as simple and affordable as building with a traditional option like Postgres. Given that, it makes sense to build from the outset with a distributed database, rather than having to deal with the challenges and headaches of trying to migrate from a legacy solution when you outgrow it down the road.

With the rise of serverless options such as CockroachDB Serverless, you can actually get a distributed database with automated elastic scale for free. With CockroachDB, it’s easy and affordable to architect your application for scale now, even if you won’t need to scale until later.

from Hacker News https://ift.tt/fbV9asz

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.