For those who don't know yet, Panfrost is the open source OpenGL driver for the ARM Mali Midgard, Bifrost (and soon Valhall) GPU series. This driver is in a pretty good state already (see the conformance status here). On the other hand, we have PanVk, the open source Vulkan driver), and as can be guessed from the PAN_I_WANT_A_BROKEN_VULKAN_DRIVER=1 environment variable that is needed to have it loaded properly, it is still in its early days.

My internship, which started in December 2021 and wrapped up recently, was about getting PanVk closer to conformancy by implementing one of the core Vulkan features: support for secondary command buffers.

But before we dive into implementation details, let's take a step back to explain what command buffers are, why Vulkan introduced this concept, and what makes secondary command buffers different from the primary counterpart.

Command buffers

For those who are not familiar with Vulkan, it is worth reminding you that the Vulkan API is all about optimizing CPU-usage and parallelizing as much of the CPU-tasks as we can to prevent the CPU from being the bottleneck in GPU-intensive applications. In order to make that happen, the new API took a major shift compared to OpenGL, which could be summed up with these three rules:

- Prepare state early, to make the command submission fast

- Re-use things as much as we can, to avoid doing the same computation over and over again

- Split tasks to take advantage of our massively parallel CPUs

In order to achieve that, Vulkan introduced the concept of command buffers: all drawing, computing, or transfer operations, which were previously issued as direct function calls in OpenGL. They are now "recorded" to a command buffer, and have their execution deferred until vkQueueSubmit is called. The following two pseudocodes give an idea of the workflow followed for a program that performs a single draw call in Vulkan and OpenGL:

| OpenGL | Vulkan |

|---|---|

prim = vkAllocateCommandBuffer (...);vkBeginCommandBuffer (prim, ...);vkCmdPipelineBarrier (prim, ...);vkBeginRenderPass (prim, ...);vkCmdBindPipeline (prim, ...); |

|

glBindVertexArray (...);glBindBuffer (...); |

vkCmdBindVertexBuffers (prim, ...); |

glBufferData (...); |

vkCmdCopyBuffer (prim, ..., stagingBuffer, vertexBuffer, ...); |

glDrawElements(...); |

vkCmdDraw(prim, ...); |

vkEndRenderPass (prim, ...);vkEndCommandBuffer (prim, ...);vkQueueSubmit (&prim, ...); |

OpenGL requires us to make direct changes to the OpenGL state machine by using the API calls to configure anything related to the pipeline. While this is fine for code that runs infrequently, there is room for optimization when such operations are run repetitively. Vulkan tries to achieve that.

Rather than directly jumping to command buffers, lets try to answer this: How can this extra verbosity be considered an improvement?

One reason is that most OpenGL API calls make changes to a global state machine, which makes parallelization effectively impossible (at least not on the same GL context). Moreover, before you issue a draw call, you will have to make sure the state machine has the correct state, because the state changes often when you're drawing a complex scene.

Vulkan, on the other hand, provides you with a way to record the set of operations you intend to execute and execute those operations later on. The command buffer has a state that is pretty similar to the GL state, except it is local to the command buffer itself, meaning that any amount of command buffer can be recorded in parallel. They hold the hardware representation of all state changes caused by command recording.

When the user is done recording draw, compute, and transfer commands, it closes the command buffer with vkEndCommandBuffer() and makes it executable. Those command buffers can then be executed with vkQueueSubmit().

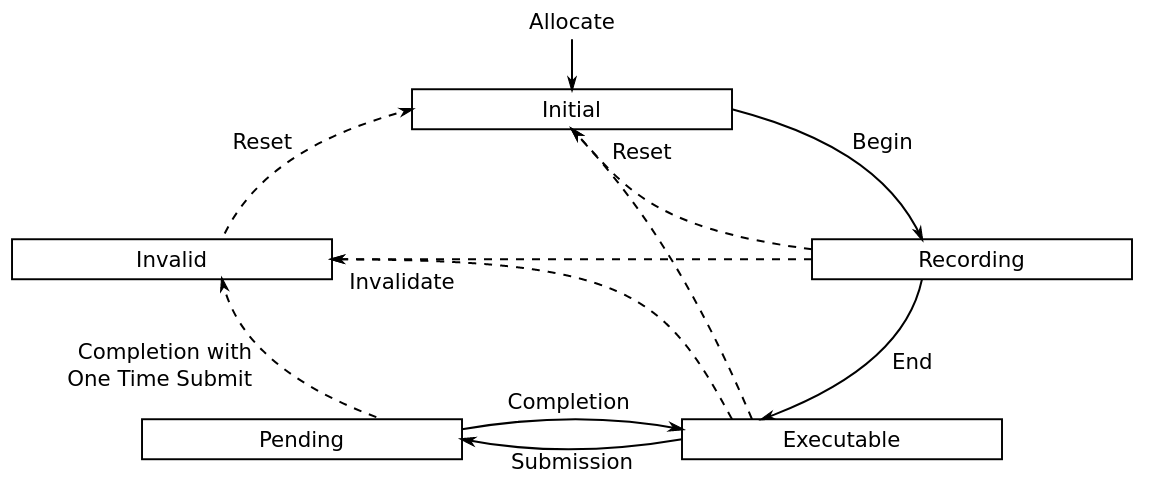

Note that I intentionally simplified the command buffer lifecycle, which actually looks more like this:

|

| Vulkan command buffer lifecycle. |

But the previous explanation should be good enough for the scope of this blog post.

Calling vkQueueSubmit() on a list of executable command buffers leads to the driver submitting recorded commands as GPU jobs.

To sum-up, Vulkan's command buffer based approach has the following advantages:

- The programmer can split command recording into multiple command buffers based on their update rate and extract maximum performance.

- Multiple command buffers can be recorded in different threads before they get collected and executed in the main thread, thus taking advantage of modern CPU's parallelism.

That's it for the concept of command buffer. Now, what is this secondary command buffer that we want to support in PanVk, and how does it differ from the primary command buffer we just described?

Secondary command buffers

The main difference between secondary and primary command buffers is the fact that secondary command buffers cannot be executed directly (you cannot pass those to vkQueueSubmit()). They must be referenced or copied (what is done depends on the GPU architecture and the driver) into primary command buffers with a vkCmdExecuteCommands() call. Think of vkCmdExecuteCommands() as a way to replay all of the commands that were recorded on a secondary command buffer into the primary command buffer, except it is expected to be much faster than manually calling the vkCmd*() functions on the primary command buffer.

There is also one particular mode that allows the secondary command buffers to reference objects that are part of the primary command buffer, like the buffers that will be rendered to when a draw is issued. Such secondary command buffers are created by passing the VK_COMMAND_BUFFER_USAGE_RENDER_PASS_CONTINUE_BIT flag when vkBeginCommandBuffer() is called. This trick allows one to reuse the same secondary command buffer in various primary command buffers that have different context, thus improving re-usability of secondary command buffers.

When we start dealing with a lot of primary command buffers to render our scenes, it is often observed that many commands remain common for multiple primary command buffers. Hence we can move the common commands into secondary command buffers. This also results in a performance boost because Vulkan API calls are converted to hardware representation only once, as mentioned previously.

The ability to record secondary command buffers and reference them from primary ones also helps parallelizing the command buffer construction process, where several threads can build secondary command buffers, which are then collected and aggregated into the primary command buffer using vkCmdExecuteCommands and finally passed to the GPU with vkQueueSubmit

By now, it should be clear that secondary command buffers are yet another way to get the best out of our GPUs while minimizing the CPU load. This makes it very important for Vulkan drivers to implement secondary buffers as efficiently as they can. In the following sections, we will explain how we added support for that feature to PanVk.

How do we add secondary command buffers to PanVk?

We have seen how secondary command buffers can be useful to optimize things, but this relies on the assumption that GPUs have the ability to reference, or at worse, copy a command buffer without too much pain. Unfortunately, Mali GPUs don't fall in that category. This being said, secondary command buffers being part of the core features, we don't really have a choice: we have to support them. So, we came up with a two-step plan to emulate secondary command buffers. My goal was to implement both of them and compare their performance.

- High-level command recording

- Low-level command recording

High-level command recording

As we have seen earlier, vkCmdExecuteCommands() is supposed to replay the commands recorded in the secondary command buffer onto the primary one. Since we don't have a way to support secondary command buffer natively, a straightforward, but inefficient approach would be to replay all the vkCmd*() function calls onto our primary command buffer.

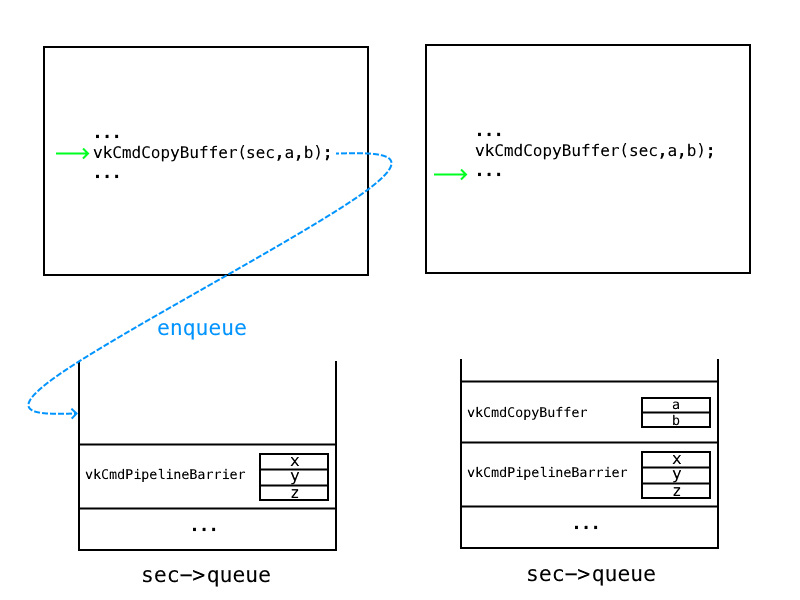

One way of doing this would be to maintain a list of commands to be replayed in their sofware form. When a command is recorded into a secondary command buffer, the command and its arguments are copied and added to a list that is part of the secondary command buffer.

Command queue

|

| Using copies to record Vulkan secondary command buffers. |

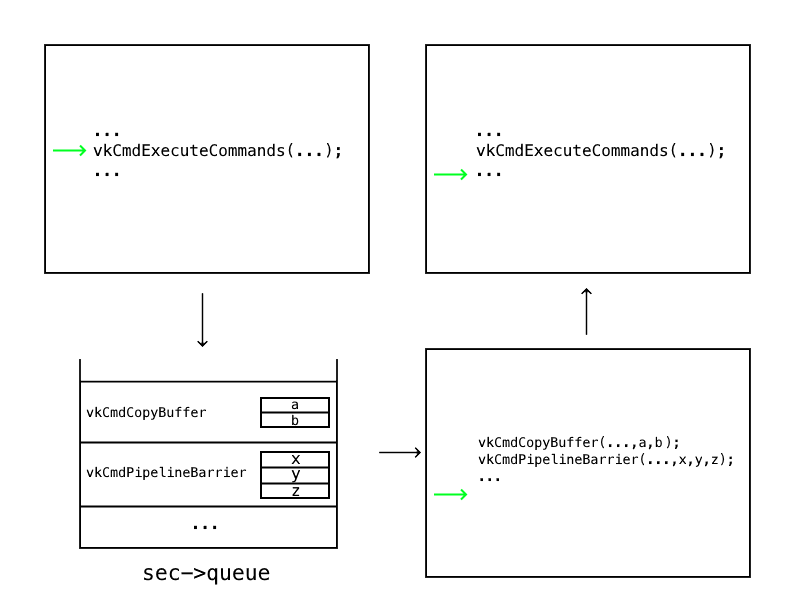

Whenever vkCmdExecuteCommands() is called on a primary command buffer; all the secondary command buffers will replay their recorded state changes onto the primary command buffer. This is done by calling vkCmd* on the primary command buffer with the arguments we saved during the recording step.

|

| Executing copied Vulkan secondary command buffers. |

Luckily, we already have a software-based implementation of Vulkan in Mesa: lavapipe, and this implementation already has a pretty generic infrastructure to record vulkan commands: vk_cmd_queue. So all we had to do was re-use it and add the ability to replay commands on a primary command buffer, as opposed to lavapipe, which just references the software command list and executes all those commands when vkQueueSubmit() is called.

If you are interested in knowing more about this high-level secondary command buffer recording implementation, I suggest looking at this merge request.

Despite being a sub-optimal solution, this High-level software command queues-based implementation was merged to allow Dozen (the Vulkan-on-D3D12 layer) to support secondary command buffers, since the the concept of bundle in D3D12 doesn't quite match the secondary command buffer one. We decided to hook up the high-level secondary command buffer in PanVk since it was available for free, and would help us get better test coverage until we have a proper native implementation.

Following this, we continued our plan to develop a secondary command buffer emulation that deals with them at a lower level.

Low-level command recording

As a prerequisite, we'll have to see how PanVk deals with command buffers internally.

The command buffer internal state changes with every vkCmd* call except vkCmdDraw and vkCmdDispatch. When vkCmdDraw or vkCmdDispatch is called, it will trigger the emission of Mali jobs, which instructs the GPU to execute a draw or compute operation. Those jobs get passed context descriptors that encode the internal command buffer state at the time the draw or dispatch happens.

What is a job?

A job is simply a hardware descriptor. If you're interested in knowing what a job descriptor looks like, you can have a look at these xmls, which contain an exhaustive definition of all sort of hardware descriptors decoded by the GPU.

Jobs can be batched together to avoid unnecessary CPU -> GPU round-trips. Batching is achieved by forming a job chain whose first element is passed to the GPU.

How do we pass Mali jobs to the GPU

Mali jobs need to be read and written by both the CPU and the GPU. The GPU has to read and execute the job, and then can update it to reflect whether it was successful or failed. The CPU also needs to have access to those jobs, because they are forged by the driver, whose code is being executed on the CPU. The abstraction providing GPU-readable+writeable/CPU-writeable memory is commonly called a buffer object, which is often abbreviated to BO. Those BOs are created through a dedicated ioctl() provided by the kernel part of the GPU driver (in case of panfrost, it's named DRM_IOCTL_PANFROST_MMAP_BO).

As memory allocation can be quite time-consuming, drivers tend to allocate big blocks of memory they pick from when then need smaller pieces. In this regard, PanVk is no different than other drivers. It will allocate memory pools(mempool) and allocate hardware descriptors to be passed to the GPU from such pools.

Copying secondary command buffer jobs to primary command buffers

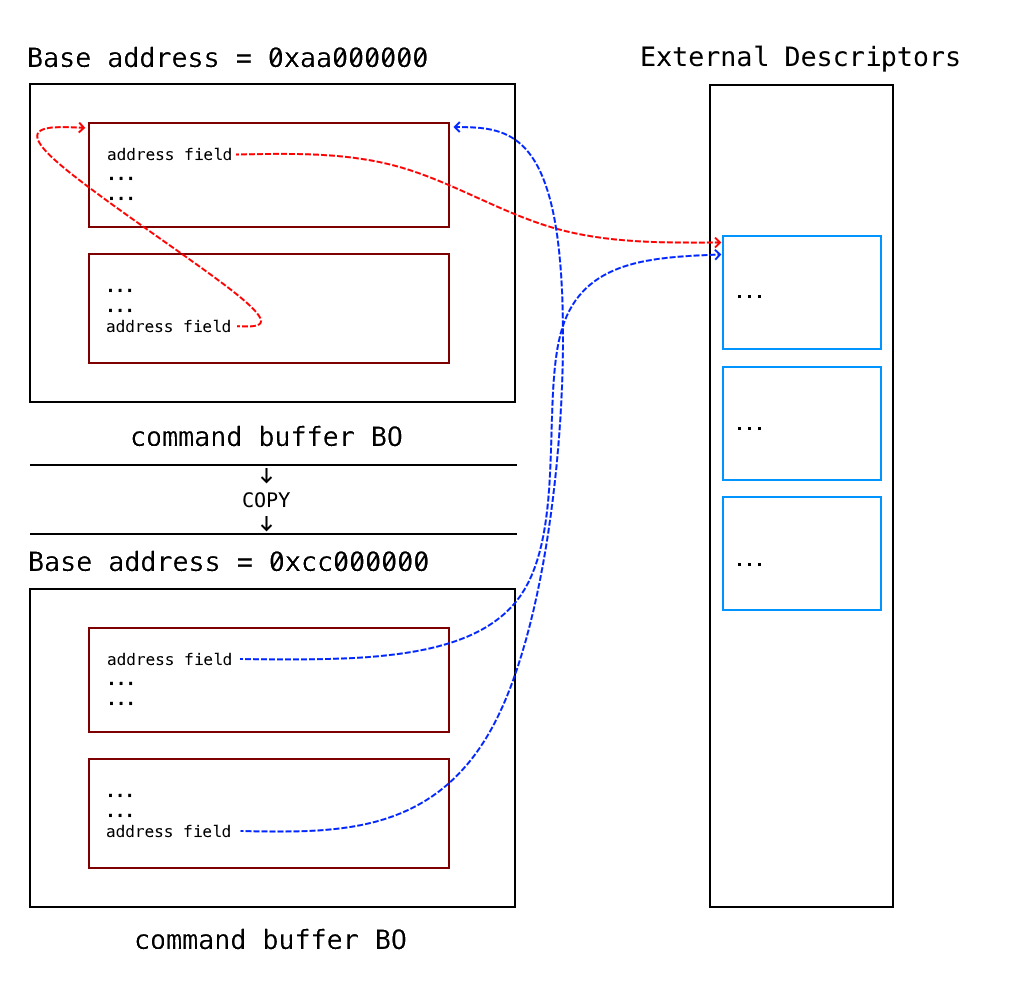

Now let's get back to our initial goal for a moment. We said that jobs are just hardware descriptors we put in a memory region that is shared with the GPU and pass those to the GPU when we want to execute something. So, how difficult would it be to copy jobs attached to a secondary command buffer to some memory pool that belongs to the primary command buffer? On most GPUs it is more or less how secondary command buffers are implemented. The command stream encodes state changes and actions, and can be copied around. But Mali GPUs are different. If you look at hardware descriptor definitions in those xml files, you might notice that jobs are not the only descriptors we have at hand. In fact, jobs reference other descriptors encoding the context attached to those jobs. For instance, jobs encoding a draw (yes, a draw requires more than one job :-)) will point to a framebuffer descriptor encoding the render target and depth/stencil buffers to update.

Now, simply copying these jobs won't work, issues will arise if we do this:

- We need CPU addresses for reading but the linked list is formed using GPU addresses. Also, the descriptor linked list may spread across multiple BOs, which makes the process of converting to a CPU address more complex. Hence, we'll have to track each job carefully while

memcpy, - BOs have CPU mappings flagged uncached. Each read on such memory would hurt us pretty badly. Which means the secondary -> primary BO copy would be super slow.

- The

type=addressfields hold absolute GPU addresses to other descriptors. When we memcpy these, it is essential to relocate them according to the destination base address.

|

| Hardware representation of nested Vulkan command buffers. |

We therefore implemented a solution that solves these issues with relative simplicity:

CPU-only memory blob for secondary command buffers

If we can force secondary command buffers to store all their jobs in a single memory blob, we can copy the entire buffer containing jobs of the secondary command buffer into a primary command buffer's memory buffer.

Moreover, a secondary command buffer does not need to be visible to the GPU since the GPU never accesses that memory. This would also speed up memcpy calls because CPU caching is enabled.

Let's defer tackling the third issue after we understand the implementation of CPU-only BOs.

Hence, the first step was to add CPU-only BOs to panfrost. We keep a single CPU-only BO in a mempool, if it requests one.

struct panfrost_ptr {

/* CPU address */

void *cpu;

/* GPU address */

mali_ptr gpu;

};

struct panfrost_bo {

...

/* Mapping for the entire object (all levels) */

struct panfrost_ptr ptr;

...

};

struct panvk_pool {

...

/* CPU-visible pool, only used for secondary command buffers */

bool cpu_only;

struct panfrost_bo cpu_bo; // cpu -> mmapped, gpu -> set to an fake base address

}

After adding functionality for CPU-only BO, secondary command buffers were configured to hold three CPU-only pools: a descriptor pool, a TLS pool and a varyings pool, with fake GPU base address of 0xffffff00000000, 0xfffffe00000000 and 0xfffffd00000000, respectively. These addresses are unlikely to be reached since the kernel driver allocates stuff sequentially from the bottom. This gimmick comes in handy for a small trick we use when dealing with relocations.

Now, job descriptors for a vkCmdDraw or vkCmdDispatch on a secondary command buffer will be emitted to a memory region with efficient CPU access.

The next course of action would be to deal with CmdExecuteCommands

When CmdExecuteCommands is called, we can allocate an extra BO to the memory pool of the primary command buffer and copy the BO of the secondary command buffer. The job descriptors contained in it will be effectively transferred. But, here comes the third issue, the addresses contained will not be valid after the BO is copied.

To fix it, we need to fix all address fields which we will do using "relocations":

Pointer relocations

Since we have a single BO for all the jobs, the fixing(also known as relocation) can be of 2 kinds:

- An address which points into one of the 3 mempools whose lifetime is associated with the secondary command buffer. Remember, the ones we had set a fake GPU base address for.

- An address which points to some external attachment like framebuffer.

The second kind have no option but to be handled on a case-by-case basis.

Now we can use a trick for the first case. A pointer needs to be relocated if the address it holds is in range of a CPU-only BO. A pointer into a CPU-only BO would hold an illegal adress value. We can use the property that we know this illegal range and subtract its base address to get an offset into the BO. This offset can be added to the base address of the destination BO. Setting this address value to the pointer would legalise it. Let's see how this was implemented in code.

struct panvk_reloc_ctx is used to keep track of the pools which we would need to access while relocating.

struct panvk_reloc_ctx {

struct {

struct panvk_cmd_buffer *cmdbuf; // Track cmdbuf pool

struct panfrost_ptr desc_base, varying_base; // Practically, we only needed to track, cmdbuf desc and varying base

} src, dst;

uint32_t desc_size, varying_size;

uint16_t job_idx_offset;

};

The main relocation logic can be factored out into a simple macro.

#define PANVK_RELOC_COPY(ctx, pool, src_ptr, dst_ptr, type, field) \ do { \ mali_ptr *src_addr = (src_ptr) + pan_field_byte_offset(type, field); \ if (*src_addr < (ctx)->src.pool ## _base.gpu || \ *src_addr >= (ctx)->src.pool ## _base.gpu + (ctx)->pool ## _size) \ //check if pointer is in range break; \ mali_ptr *dst_addr = (dst_ptr) + pan_field_byte_offset(type, field); \ // calculate byte offset of required field *dst_addr = *src_addr - (ctx)->src.pool ## _base.gpu + (ctx)->dst.pool ## _base.gpu; \ // pointer fixup } while (0)

The relocation logic is pretty simple: We subtract the illegal base address from it and add the base address of the BO we are copying to.

// Sample call: static void panvk_reloc_draw(const struct panvk_reloc_ctx *ctx, void *src_ptr, void *dst_ptr) { ... PANVK_RELOC_COPY(ctx, desc, src_ptr, dst_ptr, DRAW, position); ... }

Some external addresses always need to be changed to some external addresses which are part of the primary command buffer and are not populated when a secondary command buffer is recorded. So we created another macro for that purpose.

#define PANVK_RELOC_SET(dst_ptr, type, field, value) \ do { \ mali_ptr *dst_addr = (dst_ptr) + pan_field_byte_offset(type, field); \ *dst_addr = value; \ // fixup unconditionally } while (0)

With this and lots of debugging, we got all deqp secondary command buffer tests passing!

perf check

perf helps us profile our code by analyzing the rough percentage of total CPU cycles consumed by different functions in our binary. Secondary command buffers are generally recorded once and executed multiple times. Hence it is important to find out which implementation of vkCmdExecuteCommands performs better.

We have two independently developed implementations to compare. So, we can't really compare the two implementations when they're tested in different runs because the recording code is completely different, which induces a bias on the percentage you get:

implem 1 implem 2 record in cycles X CPU cycles Y CPU cycles replay in cycles N CPU cycles M CPU cycles total in cycles A CPU cycles B CPU cycles replay in % N/A M/B

If implem1's record cycles are significantly higher than implem2's, the total number of cycles will grow accordingly, and when you calculate the replay in %, you might get a number that's lower in implem1 even though it's actually performing worse in reality (spending more CPU cycles in implem1's replay logic than in implem2's one). That's exactly what happens in our case. The recording logic in the native case is heavier than in the sw-queue version, so comparing percentages in two different runs doesn't really work

To get reliable results we need to get both implementations running in one execution, which can be achieved trivially.

The command queue logic of high-level implementation was completely independent of the CPU-only BO of the hardware implementation; The commits of one branch can easily be rebased upon the other. For the two CmdExecuteCommands implementations, I named them CmdExecuteCommandsStep1 and CmdExecuteCommandsStep2 and called both of them inside CmdExecuteCommands handler. This is logically incorrect, as we will get duplicate jobs, but this would work fine for profiling!

Next we modify record_many_draws_secondary_1 test in deqp. The test records commands into a secondary command buffer and then executes them onto a primary command buffer. The commands recorded are pretty heavy, consisting of 128 * 128 draw calls. To further take it a notch up, the test was modified to call CmdExecuteCommands twenty times instead of one. This should extensively test the two implementations and let us decide between the two:

- 22.28% 0.00% deqp-vk libvulkan_panfrost.so [.]panvk_v7_CmdExecuteCommands

- panvk_v7_CmdExecuteCommands

+ 13.33% panvk_v7_CmdExecuteCommandsStep1

+ 5.92% panvk_v7_CmdExecuteCommandsStep2

+ 2.94% __memcpy_generic

What's __memcpy_generic doing there? For some reason, perf seems to think that the memcpy calls inside CmdExecuteCommandsStep2 aren't part of CmdExecuteCommandsStep2. Essentially, actual time spent in CmdExecuteCommandsStep2 should include time spent in __memcpy_generic.

We can see that the low-level relocation method(8.86%) outperforms the high-level software queue method(13.33%). To confirm if our findings are consistent for different loads, let us patch the test with different numbers of CmdExecuteCommands and get perf numbers for them.

| No of CmdExecuteCommands calls | High-level Command Queues | Low-level Pointer Relocations |

|---|---|---|

| 20 | 13.33% | 8.86% |

| 15 | 15.04% | 9.91% |

| 10 | 15.98% | 10.38% |

| 5 | 12.00% | 7.73% |

| 1 | 3.37% | 2.09% |

The perf results show that the low-level implementation outperforms high-level implementation by 1.5x-2x!

However, we missed one thing: memory usage. I am using time -v to compare memory usage between the two implementations. The entries show Maximum resident set size (kbytes) for them.

| No of CmdExecuteCommands calls | High-level Command Queues | Low-level Pointer Relocations |

|---|---|---|

| 20 | 574384 | 595908 |

| 10 | 387368 | 405436 |

| 1 | 220544 | 240612 |

The difference in memory usage is ~20mb for all 3 cases. So we are incurring a memory overhead for native implementation, but the performance boost is considerable. While making a clear decision between the two is tough, in most cases native implementation should perform better for a more or less tolerable memory usage penalty. This can be improved by compressing buffers or similar solutions, but that is out of the scope of this project.

Wrapping up

My first experience with Collabora was as a GSoC student under xrdesktop, working on a 3D rendering project. This internship allowed me to delve into graphics driver programming with Mesa and understand how these wonderful little pieces of engineering work under the hood. The overall experience has been fantastic, with me getting to learn from experienced engineers, ready to assist me at a moment's notice.

For more information on Collabora's internships, keep an eye on our careers page!

The code

You can check out the code written during this internship by having a look at the merge requests below:

from Hacker News https://ift.tt/lifzn6d

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.