Recommendation systems have been around for a long time. Also, a massive amount of literature has been written around Recommendation systems. So, in this edition of my newsletter, I thought I would cover some of the not-so-spoken areas of recommendation systems. I would not cover any algorithms but just some of the patterns that I have observed while working on the same or by talking with people who have worked on large scale recommendation systems.

-

Framing the recommendation problem - A lot of companies can be carried away by the hype around recommendation systems at various companies. Everyone wants to do their recommendation system like Netflix, Youtube, Spotify, Airbnb, Pinterest, Amazon etc. However, there is very little thought given to what the company is trying to accomplish using their recommendation system.

Is the company trying to solve for discovery, or for engagement, or for something else? If this is not thought through, the team which is trying to solve for the use case is left with very vague problem statements and hence can go around in circles trying to solve for an unknown problem. If Netflix, shows certain sections with recommended movies, while Spotify tries to create playlists which a person will like, your company might be trying to accomplish something entirely different. Framing the recommendation usecase can help the team come up with the right metrics and reward function.

-

Baselines - Heuristic Baselines are a great starting point in multiple ways. A heuristic baseline can be something as simple as showing the most popular items. Completely random baseline is too un-informed and might not give you great information about the learning capabilities of the algorithm. Running a logistic regression might be too informed a baseline and can become a long drawn process. Heuristic baselines can be status quo too - By status quo, I mean - If you want to show top 3 items in ranked order through your recommendations, what were you showing as top 3 items on you app before any recommendations kicked in. Recency/Frequency based baselines are commonly used too. Baselines help the team understand what the model is learning at the top of what is already there. They also help you check all the basic pipelines and contracts which are anyway needed for the recommendations to work. In addition to all this, informed baselines can be your soft landing spot in case you recommendation pipelines/systems are failing in the future. Keep a mechanism ready to send all the traffic to this informed baseline.

-

Metrics,Reward Functions - As discussed in the first point, framing your problem statement is very important . The next most important things is framing your metrics and reward functions. While, it might seem obvious, this is extremely hard. Offline metrics of ML algorithms don’t match up or correlate with you online metrics when the system is put in front of the consumer. Getting them in sync requires lots of thinking through. It also needs you to run a lot of experiments, simulations and log online/offline metrics together to understand the patterns. Online metrics are often times delayed in nature making them further hard to correlate with offline metrics. Relevance is not equal to Revenue or Retention. It can be a non-linear combination of multiple metrics. Lot of experimentation, simulation, analysis will be required to create reward functions which correlate with your metric of interest.

Evaluation of recommendation system is a much talked about area. Eugene Yan recently came out with an important blog post which talks about recommendation systems as an interventional system, which is very close to what most ML teams start thinking about as their recommendation systems become mature. As mentioned in my previous newsletters, logging all offline and online metrics in one place is a great way to go about metrics. This is an extremely involved area and the team needs to spend lots of time on this. Simulations and counterfactual evaluations are another involved area to look at. If you thought, getting to such metrics was the hard part, you are extremely wrong. Getting business stakeholders aligned on such metrics is 10X harder than actually creating such metrics.

-

Slow and Fast moving metrics - This is an extension to the previous point but deserves a section of its own. Some of the business metrics can be very slow. Finding fast moving proxies for such metrics is extremely important or else the rate at which you can innovate will be very slow. Additionally, there are metrics which are extremely sensitive and others which are not. Finding a sensitive metric which is somewhat causal of your slower moving metric of interest is a big win. Having an initial baseline of all the metrics can very useful in eyeballing the performance of your recommendation systems.

-

Lower number of items, simpler algorithms - Not every company has millions of SKU’s like Amazon, Spotify, Netflix, Google etc. In case you have a limited number of items, the thumb rule is that - Simpler algorithms will often beat complicated algorithms.

The informed baseline will be really hard to beat. Multi Armed Bandit based explore-exploit methods will almost take you the distance. In my own workflow, I would like to have a MAB as the first model after the informed baseline is in place, no matter what. In case, you have smaller number of items in the 100’s, Multi armed bandits will be extremely competitive.

-

Realtime Inferencing and On device compute - Do you need real-time inferencing or are you okay with batch inferencing? Also, how much additional upside does the real time inferencing give to you? In case, your best model is a lightweight model or a lightweight user level model or you have few items (in 100’s), you might want to push the computation of the model to the device. This will not only save you huge infrastructure costs, it will also help latencies. There are a few companies which are working on - On device compute or Federated Learning. These companies might help you get there fast. Almost, all companies work on near real time inferencing through async processes to make their pipeliness and inferencing a lot more resilient.

-

RecsysOps is real hard -

Source - https://ift.tt/r4FWkYf

While there has been a lot of talk about how MLOps is hard, RecsysOps is a different breed altogether. If you have 10-100’s of millions of users and 10’s of thousands or millions of items, recsysops is a nightmare. In an ideal recsysops system, you will need the best of all things good MLOps sytems provide an more. Some of the most important things I would look at while creating a RecsysOps system would be -

-

Feature Stores with lots of cache

-

Metric Beds with visualization layers

-

Prediction Service templates which can be quickly clones for any model - This is another underlooked point. The two major ways in which large scale prediction service is written are - 1. Write the predictions as a values with the user id as a key. 2. Write all the features (user,item etc) into the feature store. A dot product or some other cheap operation can be done on the top of such features as part of the prediction service.

Compute heavy models requires lots of hardwork to optimize as a prediction service. ONNX runtimes are used very often for prediction services as models grow in complexity. Whether you use the 1st option or the 2nd option is a tradeoff between how many items are there on the prediction (how much of cache will it take up) vs how much latency is the real time prediction taking up. Recommendation systems almost always work in async. The worst thing that can happen in case of an async system will be stale recommendations. Okay, that was an exaggeration - Worse can happen! Chip Huyen wrote a very good blog about real time machine learning at the start of the year which talks about the architechtures being used at various companies.

-

System health check metrics which can be visualized alongside the metric bed visualization

-

Distributed Training and distributed inferencing support. Distributed ML systems are hard enough. Add slow moving business metrics into the mix and you have the complete recipe for nightmare.

-

Keyed predictions is a design pattern you might want to look for.

-

DAG management systems such as airflow is a pre-requisite

-

Lots of other moving parts. The architecture which for a long time was considered two tower is very well illustrated here.

-

-

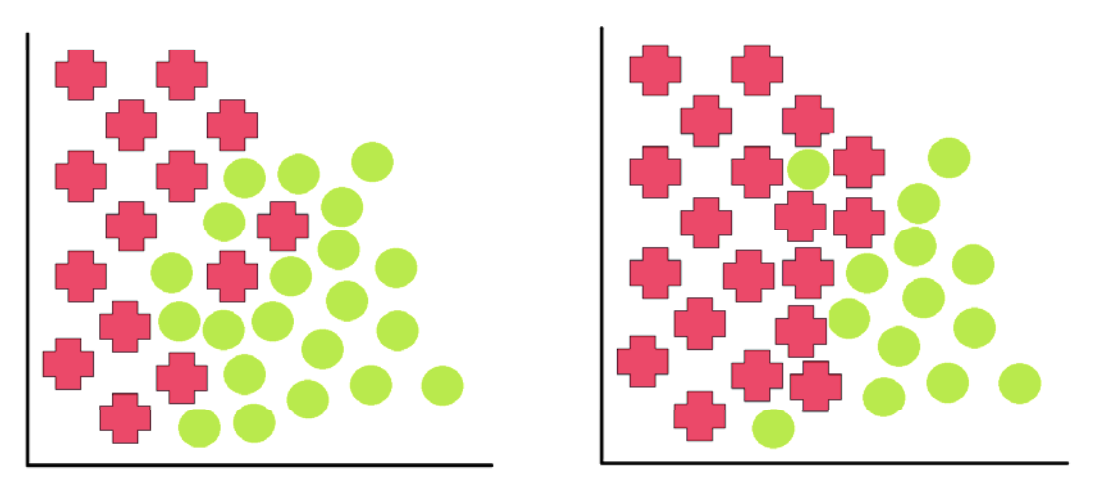

Data Distribution Shifts - In a fast moving company, data drifts happen. If you are a content company, new content and new kind of content is never more than a week away.

Source - https://ift.tt/2zHU8we

The best of models find it very hard to generalize on data which has shifted in distribution. There is almost no full proof tool for detecting data distribution drifts but basics such as checking the distributions of the feature space, checking the distribution of predictions etc must be automated. Triggering alerts on the basis of such drifts is a good idea

-

Residual effects and Novelty effects - Other than the mention about residual and novelty effects in experimentation books, not too many people are paying attention to the residual and novelty effects of recommendation systems. While Novelty effect can subside by doing an experiment over an extended period of time, residual effects are hard to detect and to deal with. Residual effects tend to play a very large role in the performance of recommendation systems. Rehashing your users in novel ways to overcome such effects has been an ongoing area of research at multiple companies.

-

Request Response Logs to create training data - Evaluating the performance of your current system and retraining the models at optimal frequency are important areas. While, building the contract between the backend sytems and the Recommendation systems, logging and parsing request response is good way to create training data for future retraining of your models. Even if you create a few new features, you will need to backfill only a limited number of features. This is a much understated area. Everyone is probably doing it but in case anyone is missing it, please make a note.

-

Matrix Factorization techniques, Word2vec kind of techniques - If you can apply Matrix factorization in a meaningful way, it will almost always work. In case of fresh items coming up, companies have been known to roll it out to a few consumers just to capture interaction data, so that they can roll it out to other consumers in a more personalized way. There is a tradeoff between freshness of items vs how personalized they are. Which are the few users who should get those fresh items and give us the most important signals is an active area of interest for me.

Additionally, I have talked with multiple companies which claim to create item vectors basis the sequence in which items are consumed by their consumers. For example, for an ecommerce company, if the sequence of consumption of items is A→B→C→D, they try to predict C basis inputs A,B,D. This is very similar to how wordvectors are trained. This way, they get embedding for every item. The two techniques mentioned here have been go to techniques which have worked for multiple companies and deserve a trial.

-

Explore and Exploit Dilemma - Explore and exploit is a well known dilemma. At the beginning of a user’s lifetime on your application, you might try to explore her interest more but as you get more signals about the user, you start exploiting their interests. Even when you have enough signals about a user,you keep some part of your recommendation as exploration content so that you can keep track of a user’s changing interest pattern. This is basic. However, there is a lot of formulation to be done on the exploration problem for cold and sparse users. How can you explore faster? Is UCB the fastest way to explore user interest?

Reward functions can be a combination of “actual reward” such as click or transaction and how much signal a user gave while doing an interaction. In such a reward formulation, all kinds of models can be tried to learn user signals faster.

-

Silent Failures can be extremely costly - Machine learning systems, and recommendation systems in general, can fail silently. Examples can be - The input data can have missing value or the input data can have a data drift, the outputs can be coming with more than expected latency, the recommendation systems might be going to fallback models, some kind of instrumentation change might not have been accounted for, etc. In general, multiple teams such as the Applied Scientists, ML Platform Engineers, Data Analysts, Backend and Frontend engineers are all involved in pulling off a large scale recommendation system and hence in the absence of a very good monitoring, debugging and alerting system, recommendation systems can fail for minutes, hours or days. If you consumer experience (revenue or churn) is related to the recommendation system in the right way, you might lose out on few percentage points of your revenue without you even knowing it.

I hope you find my newsletters useful. Please subscribe to my newsletters to keep me motivated about writing.

from Hacker News https://ift.tt/zmlRbc9

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.