Compute Express Link Overview

written by ben on 2022-06-01

Table Of Contents

Intro

Compute Express Link (CXL) is the next spec of significance for connecting hardware devices. It will replace or supplement existing stalwarts like PCIe. The adoption is starting in the datacenter, and the specification definitely provides interesting possibilities for client and embedded devices. A few years ago, the picture wasn't so clear. The original release of the CXL specification wasn't Earth shattering. There were competing standards with intent hardware vendors behind them. The drive to, and release of, the Compute Express Link 2.0 specification changed much of that.

There are a bunch of really great materials hosted by the CXL consortium. I find that these are primarily geared toward use cases, hardware vendors, and sales & marketing. This blog series will dissect CXL with the scalpel of a software engineer. I intend to provide an in depth overview of the driver architecture, and go over how the development was done before there was hardware. This post will go over the important parts of the specification as I see them.

All spec references are relative to the 2.0 specification which can be obtained here.

What it is

Let's start with two practical examples.

- If one were creating a PCIe based memory expansion device today, and desired that device expose coherent byte addressable memory there would really be only two viable options. One could expose this memory mapped input/output (MMIO) via Base Address Register (BAR). Without horrendous hacks, and to have ubiquitous CPU support, the only sane way this can work is if you map the MMIO as uncached (UC), which has a distinct performance penalty. For more details on coherent memory access to the GPU, see my previous blog post. Access to the device memory should be fast (at least, not limited by the protocol's restriction), and we haven't managed to accomplish that. In fact, the NVMe 1.4 specification introduces the Persistent Memory Region (PMR) which sort of does this but is still limited.

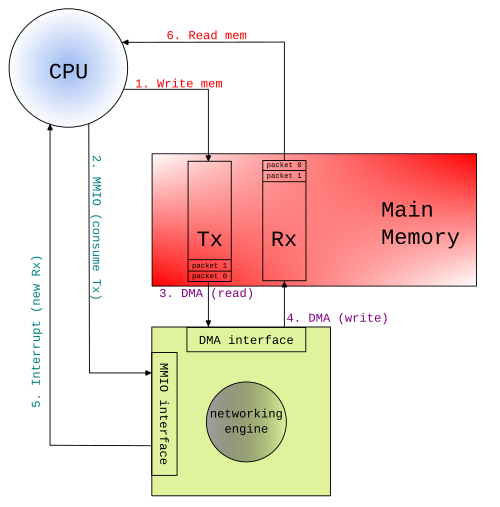

- If one were creating a PCIe based device whose main job was to do Network Address Translation (NAT) (or some other IP packet mutation) that was to be done by the CPU, critical memory bandwidth would be need for this. This is because the CPU will have to read data in from the device, modify it, and write it back out and the only way to do this via PCIe is to go through main memory.

CXL defines 3 protocols that work on top of PCIe that enable (Chapter 3 of the CXL 2.0 specification) a general purpose way to implement the examples. Two of these protocols help address our 'coherent but fast' problem above. I'll call them the data protocols. The third, CXL.io can be thought of as a stricter set of requirements over PCIe config cycles. I'll call that the enumeration/configuration protocol. We'll not discuss that in any depth as it's not particularly interesting.

There's plenty of great overviews such as this one. The point of this blog is to focus on the specific aspects driver writers and reviewers might care about.

CXL.cache

But first, a bit on PCIe coherency. Modern x86 architectures have cache coherent PCIe DMA. For DMA reads this simply means that the DMA engine obtains the most recent copy of the data. For writes, once the DMA is complete the DMA engine will send an invalidation request to the host CPU to invalidate the range that was DMA'd. Fundamentally however, using this is generally not optimal since keeping coherency would require the CPU basically snoop the PCIe interconnect all the time. This would be bad for power and performance. As such, general drivers manage coherency via software mechanisms. There are exceptions to this rule, but I'm not intimately familiar with them, so I won't add more detail.

CXL.cache is interesting because it allows the device to participate in the CPU cache coherency protocol as if it were another CPU rather than being a device. From a software perspective, it's the less interesting of the two data protocols. Chapter 3.2.x has a lot of words around what this protocol is for, and how it is designed to work. This protocol is targeted towards accelerators which do not have anything to provide to the system in terms of resources, but instead utilize host-attached memory and a local cache. The CXL.cache protocol if successfully negotiated throughout the CXL topology, host to endpoint, should just work. It permits the device to have coherent view of memory without software intervention. Similarly, the host is able to read from the device caches without using main memory as a stopping point. Main memory can be skipped and instead data can be directly transferred over CXL.cache protocol. The protocol describes snoop filtering and the necessary messages to keep coherency. At this level, consider it a more efficient version of PCIe coherency.

CXL.cache has a bidirectional request/response protocol where a request can be made from host to device (H2D) or vice-versa (D2H). The set of commands are what you'd expect to provide proper snooping. For example, H2D requests with one of the 3 Snp* opcodes defined in 3.2.4.3.X, these allow to gain exclusive access to a line, shared access, or just get the current value; while the device uses one of several commands in Table 18 to read/write/invalidate/flush (similar uses).

One might also notice that the peer to peer case isn't covered. The CXL model however makes every device/CPU a peer in the CXL.cache domain. While the current CXL specification doesn't address skipping CPU caches in this matter entirely, it'd be a safe bet to assume a specification so comprehensive would be getting there soon. CXL would allow this more generically than NVMe.

To summarize, CXL.cache essentially lets CPU and device caches to remain coherent without needing to use main memory as the synchronization barrier.

CXL.mem

If CXL.cache is for devices that don't provide resources, CXL.mem is exactly the opposite. CXL.mem allows the CPU to have coherent byte addressable access to device-attached memory while maintaining its own internal cache. Unlike CXL.cache where every entity is a peer, the device or host sends requests and responses, the CPU, known as the "master" in the CXL spec, is responsible for sending requests, and the CXL subordinate (device) sends the response. Introduced in CXL 1.1, CXL.mem was added for Type 2 devices. Requests from the master to the subordinate are "M2S" and responses are "S2M".

When CXL.cache isn't also present, CXL.mem is very straightforward. All requests boil down to a read, or a write. When CXL.cache is present, the situation is more tricky. For performance improvements, the host will tell the subordinate about certain ranges of memory which may not need to handle coherency between the device cache, device-attached memory, and the host cache. There is also meta data passed along to inform the device about the current cacheline state. Both the master and subordinate need to keep their cache state in harmony.

The requests are known as

- Req - Request without data. These are generally reads and invalidates, where the response will put the data on the S2M's data channel.

- RwD - Request with data. These are generally writes, where the data channel has the data to write.

The responses:

- NDR - No Data Response. These are generally completions on state changes on the device side, such as, writeback complete.

- DRS - Data Response. This is used for returning data, ie. on a read request from the master.

Bias controls

Unsurprisingly, strict coherency often negatively impacts bandwidth, or latency, or both. While it's generally ideal from a software model to be coherent, it likely won't be ideal for performance. The CXL specification has a solution for this. Chapter 2.2.1 describes a knob which allows a mechanism to provide the hint over which entity should pay for that coherency (CPU vs. device). For many HPC workloads, such as weather modeling, large sets of data are uploaded to an accelerator's device-attached memory via the CPU, then the accelerator crunches numbers on the data, and finally the CPU download results. In CXL, at all times, the model data is coherent for both the device and the CPU. Depending on the bias however, one or the other will take a performance hit.

Host vs. device bias

Using the weather modeling example, there are 4 interesting flows.

- CPU writes data to device-attached memory.

- GPU reads data from device-attached memory.

- *GPU writes data to device_attached memory.

- CPU reads data from device attached memory.

*#3 above poses an interesting situation that was possible only with bespoke hardware. The GPU could in theory write that data out via CXL.cache and short-circuit another bias change. In practice though, many such usages would blow out the cache.

The CPU coherency engine has been a thing for a long time. One might ask, why not just use that and be done with it. Well, easy one first, a Device Coherency Engine (DCOH) was already required for CXL.cache protocol support. More practically however, the hit to latency and bandwidth is significant if every cacheline access required a check-in with the CPU's coherency engine. What this means is that when the device wishes to access data from this line, it must first determine the cacheline state (DCOH can track this), if that line isn't exclusive to the accelerator, the accelerator needs to use CXL.cache protocol to request the CPU make things coherent, and once complete, then can access it's device-attached memory. Why is that? If you recall, CXL.cache is essentially where the device is the initiator of the request, and CXL.mem is where the CPU is the initiator.

So suppose we continue on this CPU owns coherency adventure. #1 looks great, the CPU can quickly upload the dataset. However, #2 will immediately hit the bottleneck just mentioned. Similarly for #3, even though a flush won't have to occur, the accelerator will still need to send a request to the CPU to make sure the line gets invalidated. To sum up, we have coherency, but half of our operations are slower than they need to be.

To address this, a [fairly vague] description of bias controls is defined. When in host bias mode, the CPU coherency engine effectively owns the cacheline state (the contents are shared of course) by requiring the device to use CXL.cache for coherency. In device bias mode however, the host will use CXL.mem commands to ensure coherency. This is why Type 2 devices need both CXL.cache and CXL.mem.

Device types

I'd like to know why they didn't start numbering at 0. I've already talked quite a bit about device types. I believe it made sense to define the protocols first though so that device types would make more sense. CXL 1.1 introduced two device types, and CXL 2.0 added a third. All types implement CXL.io, the less than exciting protocol we ignore.

| Type | CXL.cache | CXL.mem |

|---|---|---|

| 1 | y | n |

| 2 | y | y |

| 3 | n | y |

Just from looking at the table it'd be wise to ask, if Type 2 does both protocols, why do Type 1 and Type 3 devices exist. In short, gate savings can be had with Type 1 devices not needing CXL.mem, and Type 3 devices offer gate savings and increased performance because they don't have to manage internal cache coherency. More on this next...

CXL Type 1 Devices

These are your accelerators without local memory.

The quintessential Type 1 device is the NIC. A NIC pushes data from memory out onto the wire, or pulls from the wire and into memory. It might perform many steps, such as repackaging a packet, or encryption, or reordering packets (I dunno, not a networking person). Our NAT example above is one such case.

How you might envision that working is the PCIe device would write the incoming packet into the Rx buffer. The CPU would copy that packet out of the Rx buffer, update the IP and port, then write it into the Tx buffer. This set of steps would use memory write bandwidth when the device wrote into the Rx buffer, memory read bandwidth when the CPU copied the packet, and memory write bandwidth when the CPU writes into the Tx buffer. Again, NVMe has a concept to support a subset of this case for peer to peer DMA called Controller Memory Buffers (CMB), but this is limited to NVMe based devices, and doesn't help with coherency on the CPU. Summarizing, (D is device cache, M is memory, H is Host/CPU cache)

- (D2M) Device writes into Rx Queue

- (M2H) Host copies out buffer

- (H2M) Host writes into Tx Queue

- (M2D) Device reads from Tx Queue

Post-CXL this becomes a matter of managing cache ownership throughout the pipeline. The NIC would write the incoming packet into the Rx buffer. The CPU would likely copy it out so as to prevent blocking future packets from coming in. Once done, the CPU has the buffer in its cache. The packet information could be mutated all in the cache, and then delivered to the Tx queue for sending out. Since the NIC may decide to mutate the packet further before going out, it'd issue the RdOwn opcode (3.2.4.1.7), from which point it would effectively own that cacheline.

- (D2M) Device writes into Rx Queue

- (M2H) Host copies out buffer

- (H2D) Host transfers ownership into Tx Queue

With accelerators that don't have the possibility of causing backpressure like the Rx queue does, step 2 could be removed.

CXL Type 2 Devices

These are your accelerators with local memory.

Type 2 devices are mandated to support both data protocols and as such, must implement their own DCOH engine (this will vary in complexity based on the underlying device's cache hierarchy complexity). One can think of this problem the same way as multiple CPUs where each has their own L1/L2, but a shared L3 (like Intel CPUs have, where L3 is LLC). Each CPU would need to track transitions between the local L1 and L2, and the L3 to the global L3. TL;DR on this is, for Type 2 devices, there's a relatively complex flow to manage local cache state on the device in relation to the host-attached memory they are using.

In a pre-CXL world, if a device wants to access its own memory, caches or no, it would have the logic to do so. For example, in GPUs, the sampler generally has a cache. If you try to access texture data via the sampler that is already in the sampler cache, everything remains internal to the device. Similarly, if the CPU wishes to modify the texture, an explicit command to invalidate the GPUs sampler cache must be issued before it can be reliably used by the GPU (or flushed if your GPU was modifying the texture).

Continuing with this example in the post-CXL world, the texture lives in graphics memory on the card, and that graphics memory is participating in CXL.mem protocol. That would imply that should the CPU want to inspect, or worse, modify the texture it can do so in a coherent fashion. Later in Type 3 devices, we'll figure out how none of this needs to be that complex for memory expanders.

CXL Type 3 Devices

These are your memory modules. They provide memory capacity that's persistent, volatile, or a combination.

Even though a Type 2 device could technically behave as a memory expander, it's not ideal to do so. The nature of a Type 2 device is that it has a cache which also needs to be maintained. Even with meticulous use of bias controls, extra invalidations and flushes will need to occur, and of course, extra gates are needed to handle this logic. The host CPU does not know a Type 2 device has no cache. To address this, the CXL 2.0 specification introduces a new type, Type 3, which is a "dumb" memory expander device. Since this device has no visible caches (because there is no accelerator), a reduced set of CXL.mem protocol can be used, the CPU will never need to snoop the device, which means the CPU's cache is the cache of truth. What this also implies is a CXL type 3 device simply provides device-attached memory to the system for any use. Hotplug is permitted. Type 3 peer to peer is absent from the 2.0 spec, and unlike CXL.cache, it's not as clear to see the path forward because CXL.mem is a Master/Subordinate protocol.

In a pre-CXL world the closes thing you find to this are a combination of PCIe based NVMe devices (already discussed above), NVDIMM devices, and of course, attached DRAM. All three of these have their attributes conveyed via BIOS in one way or another.

In a post-CXL world the story is changed in the sense that the OS is responsible for much of the configuration and this is why Type 3 devices are the most interesting from a software perspective.

Host physical address space management

This would apply to Type 3 devices, but technically could also apply to Type 2 devices.

Even though the protocols and use-cases should be understood, the devil is in the details with software enabling. Type 1 and Type 2 devices will largely gain benefit just from hardware; perhaps some flows might need driver changes, ie. reducing flushes and/or copies which wouldn't be needed. Type 3 devices on the other hand are a whole new ball of wax.

Type 3 devices will need host physical address space allocated dynamically (it's not entirely unlike memory hot plug, but it is trickier in some ways). The devices will need to be programmed to accept those addresses. And last but not least, those devices will need to be maintained using a spec defined mailbox interface.

The next chapter will start in the same way the driver did, the mailbox interface used for device information and configuration.

Summary

Important takeaways are as follow:

- CXL.cache allows CPUs and devices to operate on host caches uniformly.

- CXL.mem allows devices to export their memory coherently.

- Bias controls mitigate performance penalties of CXL.mem coherency

- Type 3 devices provide a subset of CXL.mem, for memory expanders.

from Hacker News https://ift.tt/kCZsAYH

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.