A few months ago, I posted a video titled Enterprise SAS RAID on the Raspberry Pi... but I never actually showed a SAS drive in it. And soon after, I posted another video, The Fastest SATA RAID on a Raspberry Pi.

Well now I have actual enterprise SAS drives running on a hardware RAID controller on a Raspberry Pi, and it's faster than the 'fastest' SATA RAID array I set up in that other video.

A Broadcom engineer named Josh watched my earlier videos and realized the ancient LSI card I was testing would not likely work with the ARM processor in the Pi, so he was able to send two pieces of kit my way:

After a long and arduous journey involving multiple driver revisions and UART debugging on the card, I was able to bring up multiple hardware RAID arrays on the Pi.

This blog post is also available in video form on my YouTube channel:

SAS RAID

But what is SAS RAID, and what makes hardware RAID any better than the software RAID I used in my SATA video?

The drives you might use in a NAS or a server today usually fall into three categories:

- SATA

- SAS

- PCI Express NVMe

All three types can use solid state storage (SSD) for high IOPS and fast transfer speeds.

SATA and SAS drives might also use rotational storage, which offers higher capacity at lower prices, though there's a severe latency tradeoff with that kind of drive.

RAID, which stands for Redundant Array of Independent Disks, is a method of taking two or more drives and putting them together into a volume that your operating system can see as if it were just one drive.

RAID can help with:

- Redundancy: A hard drive (or multiple drives, depending on RAID type) can fail and you won't lose access to your data immediately.

- Performance: multiple drives can be 'striped' to increase read or write throughput (again, depending on RAID type).

Extra caching configuration (to separate RAM or even to dedicated faster 'cache' drives) can speed up things even more than is possible on the main drives themselves, and hardware RAID can provide even better data protection with separate flash storage that caches write data if power is gone.

Caveat: If you can fit all your data on one hard drive, already have a good backup system in place, and don't need maximum availability, you probably don't need RAID, though.

I go into a lot more detail on RAID itself in my Raspberry Pi SATA RAID NAS video, so check that out to learn more.

Now, back to SATA, SAS, and NVMe.

All three of these describe interfaces used for storage devices. And the best thing about a modern storage controller like the 9460-16i is that you can connect to all three through one single HBA (Host-Bus Adapter).

If you can spend a couple thousand bucks on a fast PC with lots of RAM, software RAID solutions like ZFS or BTRFS offer a lot of great features, and are pretty reliable.

But on a system like my Raspberry Pi, software-based RAID takes up much of the Pi's CPU and RAM, and doesn't perform as well. (See this excellent post about /u/rigg77's ZFS experiment).

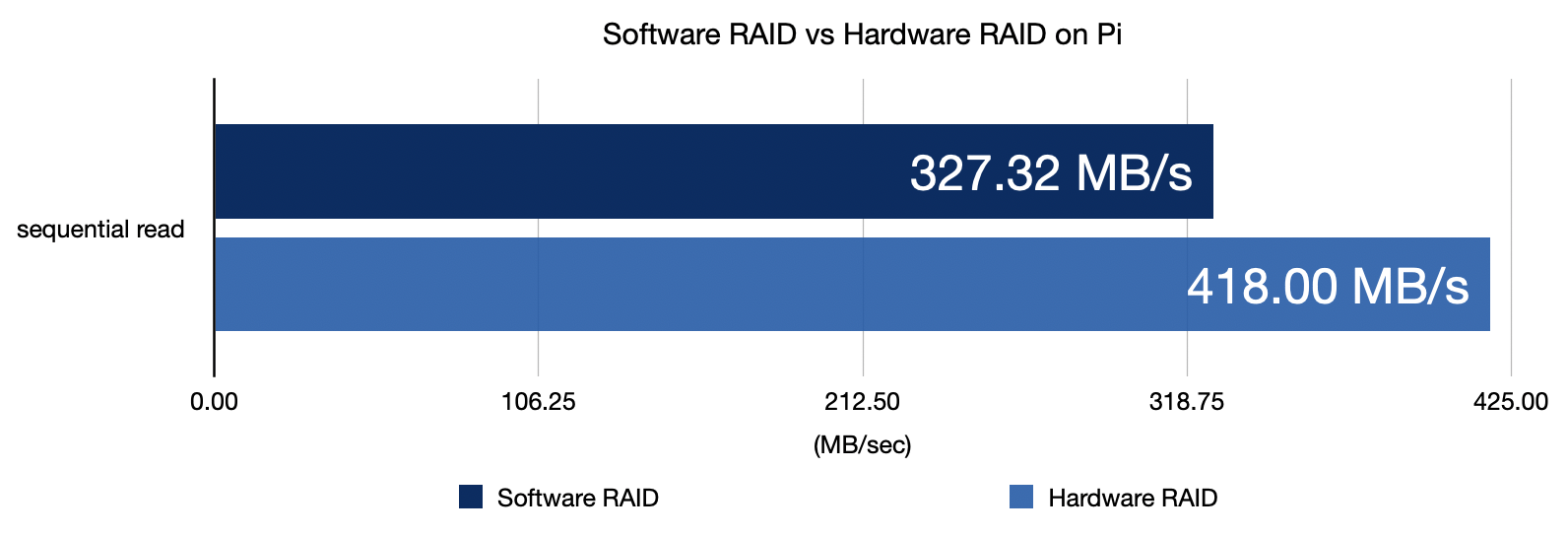

The fastest disk speed I could get with software mdadm-based RAID was about 325 MB/sec, and that was with RAID 10. Parity calculations may make that maximum speed even lower!

Using hardware RAID allowed me to get over 400 MB/sec. That's a 20% performance increase, AND it leaves the Pi's CPU free to do other things.

We'll get more into performance later, but first, I have to address the elephant in the room.

Why enterprise RAID on a Pi?

People are always asking why I test all these cards—in this case, a storage card that costs $600 used—on low-powered Raspberry Pis. I even have a 10th-gen Intel desktop in my office, so, why not use that?

Well, first of all, it's fun, I enjoy the challenge, and I get to learn a lot since failure teaches me a lot more than easy success.

But with this card, there are two other good reasons:

First: it's great for a little storage lab.

In a tiny corner of my desk, I can put 8 drives, an enterprise RAID controller, my Pi, and some other gear for testing. When I do the same thing with my desktop, I quickly run out of space. The burden of having to go over to my other "desktop computer" desk to test means I am less likely to set things up on a whim to try out some new idea.

Second: using hardware RAID takes the IO burden of the Pi's already slow processor.

Having a fast dedicated RAID chip and an extra 4 GB of DDR4 cache for storage gives the Pi reliable, fast disk IO.

If you don't need to pump through gigabytes per second, the Pi and MegaRAID together are more energy efficient than running software RAID on a faster CPU. That setup used 10-20W of power.

My Intel i3 desktop running by itself with no RAID card or storage attached idles at 25W but usually hovers around 40W—twice the power consumption, without a storage card installed.

A Pi-based RAID solution isn't going to take over Amazon's data centers, though. The Pi just isn't built for that. But it is a compelling storage setup that was never possible until the Compute Module 4 came along.

Broadcom MegaRAID 9460-16i

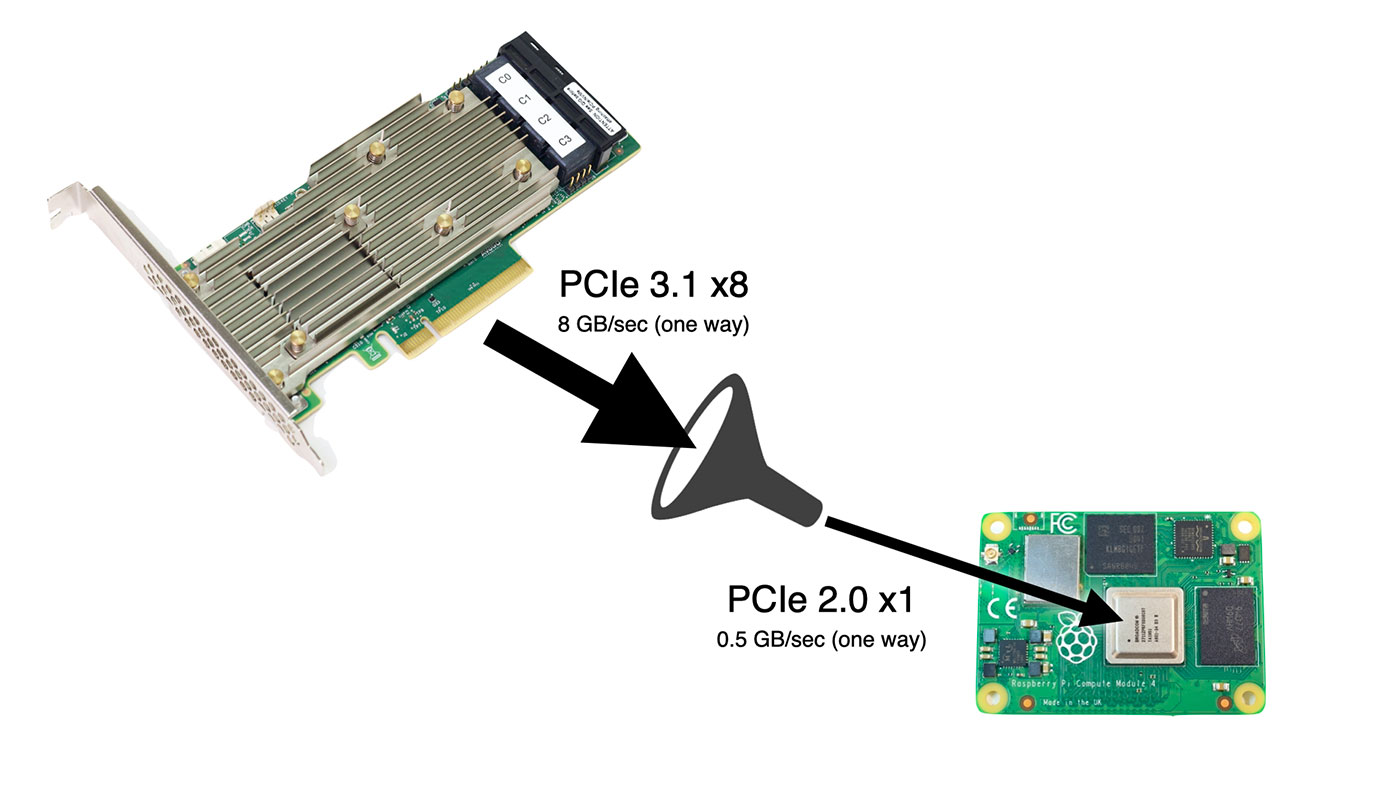

The MegaRAID card Josh sent is PCIe Gen 3.1 and supports x8 lanes of PCIe bandwidth.

Unfortunately, the Pi can only handle x1 lane at Gen 2 speeds, meaning you can't get the maximum 6+ GB/sec of storage throughput—only 1/12 that. But the Pi can use four fancy tricks this card has that the SATA cards I tested earlier don't:

- SAS RAID-on-Chip, a computer in its own regard, taking care of all the RAID storage operations. The Pi's slow CPU is saved from having to manage RAID operations.

- 4 GB DDR4 SDRAM cache, speeding up IO on slower drives, and saving the Pi's precious 1/2/4/8 GB of RAM.

- Optional CacheVault flash backup: plug in a super capacitor, and if there's a power outage, it dumps all the memory in the card's write cache to a built-in flash storage chip.

- Tri-mode ports allow you to plug any kind of drive into the card (SATA, SAS, or NVMe), and it will work with it.

It also does everything internal to the RAID-on-Chip, so even if you plug in multiple NVMe drives, you won't bottleneck the Pi's poor little CPU (with it's severely limited IO bandwidth). Even faster processors can run into bandwidth issues when using multiple NVMe drives.

Oh, and did I mention it can connect up to 24 NVMe drives or a whopping 240 SAS or SATA drives to the Pi?

I, for one, would love to see a 240 drive petabyte Pi NAS...

Getting the card to work

Josh sent over a driver, some helpful compilation suggestions, and some utilities with the card.

I had some trouble compiling the driver at first, and ran into a couple problems immediately:

- The

raspberrypi-kernel-headerspackage didn't exist for the 64-bit Pi OS beta (it has since been created), so I had to compile my own headers for the 64-bit kernel. - Raspberry Pi OS didn't support MSI-X, but luckily Phil Elwell committed a kernel tweak to Pi OS that enabled it (at least at a basic level) in response to a forum topic on getting the Google Coral TPU working over PCIe.

With those two issues resolved, I cross-compiled the Pi OS kernel again, and fan into another issue: the driver assumed it would have CONFIG_IRQ_POLL=y for IRQ polling functionality, but that wasn't set by default for the Pi kernel, therefore I had to recompile again to get that option working.

Finally, thinking I was in the clear, I found that the kernel headers and source from my cross-compiled kernel weren't built for ARM64 like the kernel itself, and rather than continue debugging my cross-compile environment, I bit the bullet and did the full 1-hour compile on the Raspberry Pi itself.

An hour later, the driver compiled without errors for the first time.

I excitedly ran sudo insmod megaraid_sas.ko, and then... it hung, and after five minutes, printed the following in dmesg:

[ 372.867846] megaraid_sas 0000:01:00.0: Init cmd return status FAILED for SCSI host 0

[ 373.054122] megaraid_sas 0000:01:00.0: Failed from megasas_init_fw 6747

Things were getting serious, because two other Broadcom engineers joined a conference call with Josh and I, and they had me pull off the serial UART output from the card to debug PCIe memory addressing issues!

We found the driver would work on 32-bit Pi OS, but not the 64-bit beta. Which was strange, since in my experience drivers have often worked better under the 64-bit OS.

A few days later, a Broadcom driver engineer sent over patch which fixed the problem, which was related to the use of the writeq function, which is apparently not well supported on the Pi. Josh filed a bug report to the Pi kernel issue queue about it: writeq() on 64 bit doesn't issue PCIe cycle, switching to two writel() works.

Anyways, the driver finally worked, and I could see the attached storage enclosure being identified in the dmesg output!

Setting up RAID with StorCLI

StorCLI is a utility for managing RAID volumes on the MegaRAID card, and Broadcom has a comprehensive StorCLI reference on their website.

The command I used to set up a 4-drive SAS RAID 5 array went like this:

sudo ./storcli64 /c0 add vd r5 name=SASR5 drives=97:4-7 pdcache=default AWB ra direct Strip=256

- I named the

r5arraySASR5 - The array uses drives 4-7 in storage enclosure ID 97

- There are a few caching options.

- I set the strip size to 256 KB (which is typical for HDDs—64 KB would be more common for SSDs).

I created two RAID 5 volumes: one with four Kingston SA400 SATA SSDs, and another with four HP ProLiant 10K SAS drives.

I used lsblk -S to make sure the new devices, sda and sdb, were visible on my system. Then I partitioned and formatted them with:

sudo fdisk /dev/sdasudo mkfs.ext4 /dev/sda

At this point, I had a 333 GB SSD array, and an 836 GB SAS array. I mounted them and made sure I could read and write to them.

Storage on boot

I also wanted to make sure the storage arrays were available at system boot, so I could share them automatically via NFS, so I installed the compiled driver module into my kernel:

- I copied the module into the kernel drivers directory for my compiled kernel:

sudo cp megaraid_sas.ko /lib/modules/$(uname -r)/kernel/drivers/ - I added the module name, 'megaraid_sas', to the end of the /etc/modules file:

echo 'megaraid_sas' | sudo tee -a /etc/modules - I ran

sudo depmodand rebooted, and after boot, everything came up perfectly.

One thing to note is that a RAID card like this can take a minute or two to initialize, since it has its own initialization process. So boot times for the Pi will be a little longer if you want to wait for the storage card to come online first.

A note on power supplies: I used a couple different power supplies when testing the HBA. I found that if I used a lower-powered 12V 2A power supply, the card seemed to not get enough power, and would endlessly reboot itself. I switched to this 12V 5A power supply, and the card ran great. (I did not attempt using an external powered 'GPU riser', since I have had mixed experiences with them.)

Performance

With this thing fully online, I tested performance with fio.

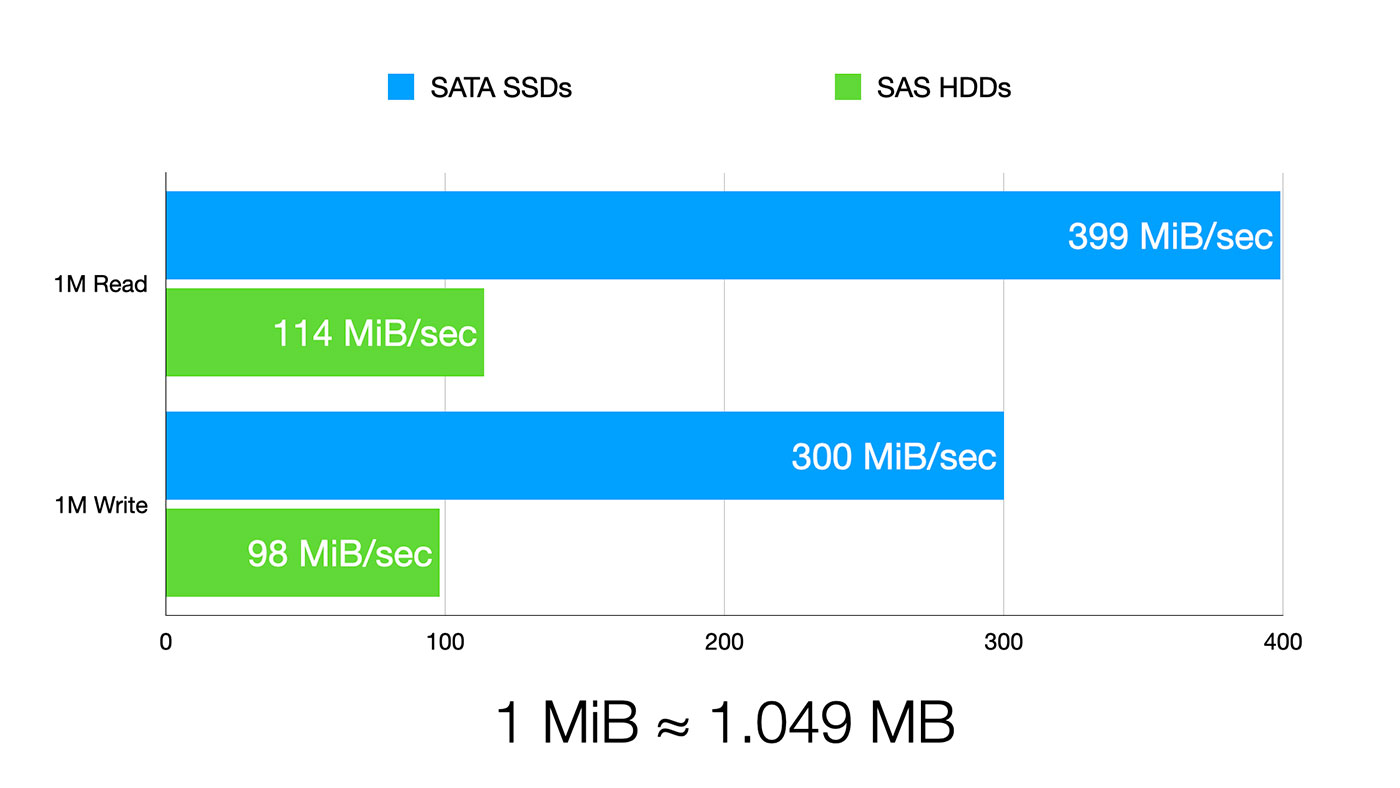

For 1 MB random reads, I got:

- 399 MiB/sec on the SSD array

- 114 MiB/sec on the SAS array

For 1 MB random writes, I got:

- 300 MiB/sec on the SSD array

- 98 MiB/sec on the SAS drives

Note: 1.000 MiB = 1.024 MB

These results show two things:

First, even cheap SSDs are still faster than spinning SAS drives. No real surprise there.

Second, the limit to the SSD speed is the Pi's PCIe bus. I'm able to get 3.35 Gbps of bandwidth, and that's actually better than the bandwidth I could pump through an ASUS 10G network adapter, which could only get up to 3.27 gigabits.

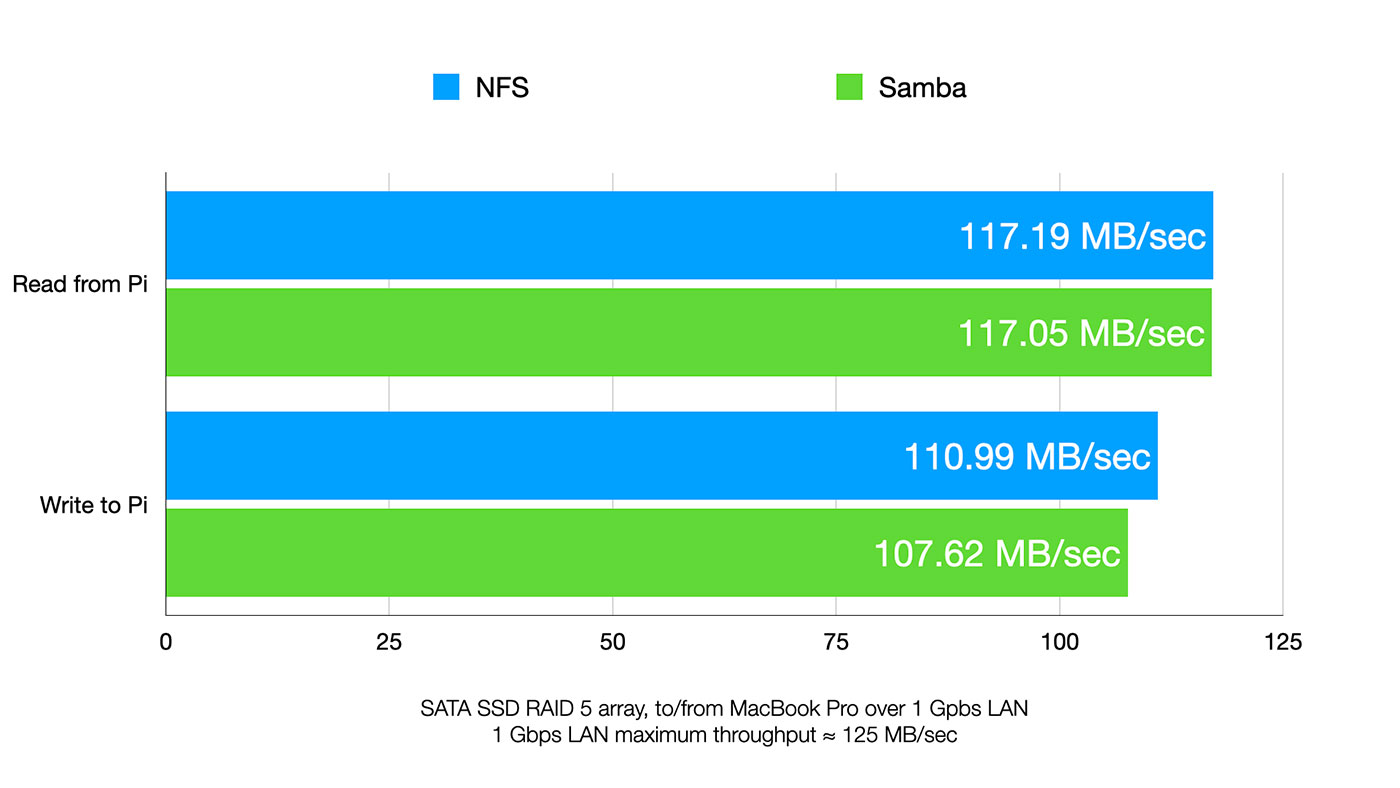

There are tons of other tests I could do, but I wanted to see how the drives performed for network storage, so I installed Samba and NFS and ran more benchmarks.

I was amazed both Samba and NFS got almost wire speed for reads, meaning the Pi was able to feed data to my Mac as fast as the gigabit interface could pump it through.

If you remember from my SATA RAID video, the fastest I could get with NFS was 106 MB/sec, and that speed fluctuated when packets were queued up while the Pi was busy sorting out software RAID.

With the storage controller handling the RAID, the Pi was staying a solid 117 MB/sec continuously, during 10+ GB file copies.

PCIe Switches and 2.5 Gbps Networking

What about 2.5 GbE networking? I actually have two PCIe switches, and a few different 2.5 Gbps NICs I've already successfully used with the Pi. So I tried using both switches and a few NICs, and unfortunately I couldn't get enough power through the switch for both the NIC and the power-hungry storage controller.

I even pulled out my 600W PC PSU, but accidentally fried it when I tried shorting the PS_ON pin to power it up. Oops.

Watch me zap the PSU (and luckily, not myself) here.

So 2.5 Gbps performance will, unfortunately, have to wait until I get a new power supply.

Conclusion

Finally, I have true hardware SAS RAID running on the Raspberry Pi. Josh was actually first to do it, though, on 32-bit Pi OS.

The driver we were testing is still pre-release, though. If you run out and buy a MegaRAID controller today, you'll have some trouble getting it working on the Pi until the changes make it into the public driver.

Am I going to recommend you buy this $1000 HBA for a homemade Pi-based NAS? Maybe not. But there is a lower-end version you can get, the 9440-8i. Still has all the high-end features, and it's less than $200 used on eBay (Dell server pulls, mostly).

Even that might be overkill, though, if you just want to build a cheap NAS and only use SATA drives. I'll be covering more inexpensive NAS options soon, so subscribe to this blog's RSS feed, or subscribe to my YouTube channel to follow along!

from Hacker News https://ift.tt/3kr4luY

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.