The adoption of NVM Express® SSDs in the data center is unleashing performance that was unprecedented just a few years ago. Since the inception of the SPDK project, the community has demonstrated that it’s possible to build systems capable of tens of millions of IOPS (I/O operations per second) using commodity hardware. Over the last couple of years, we have demonstrated systems capable of over 10 million IOPS (using a single CPU core!), but now we’re ready to significantly advance the state of the art. Yes, 80 Million IOPS.

We built a 2U Intel® Xeon® server system capable of 80 MILLION 512B random read I/O operations by combining the latest 3rd Generation Intel® Xeon® Scalable Processor (code-named Ice Lake) with Intel® Optane™ SSDs. The system has 2 CPUs with 64 PCIe Gen 4 lanes each, giving us plenty of PCIe lanes to connect NVMe SSDs and other I/O devices such as network cards.

| Configuration | |

| CPU | Intel® Xeon® Platinum 8380 Processor @ 2.30GHz |

| Memory | 16x 64GB 3200MHz DDR4 |

| Storage | 16x Intel® Optane™ SSD P5800X 800GB |

| Platform | Intel® Server Board M50CYP2SB2U |

| BIOS | SE5C6200.86B.2021.D40.2103100308 (ucode:0x261) |

| OS | Linux Fedora30 (Kernel 5.7.12) |

| Hyperthreading | ON |

| Turbo | ON |

| Turbo Frequency | 3.4GHz |

BIG NUMBERS

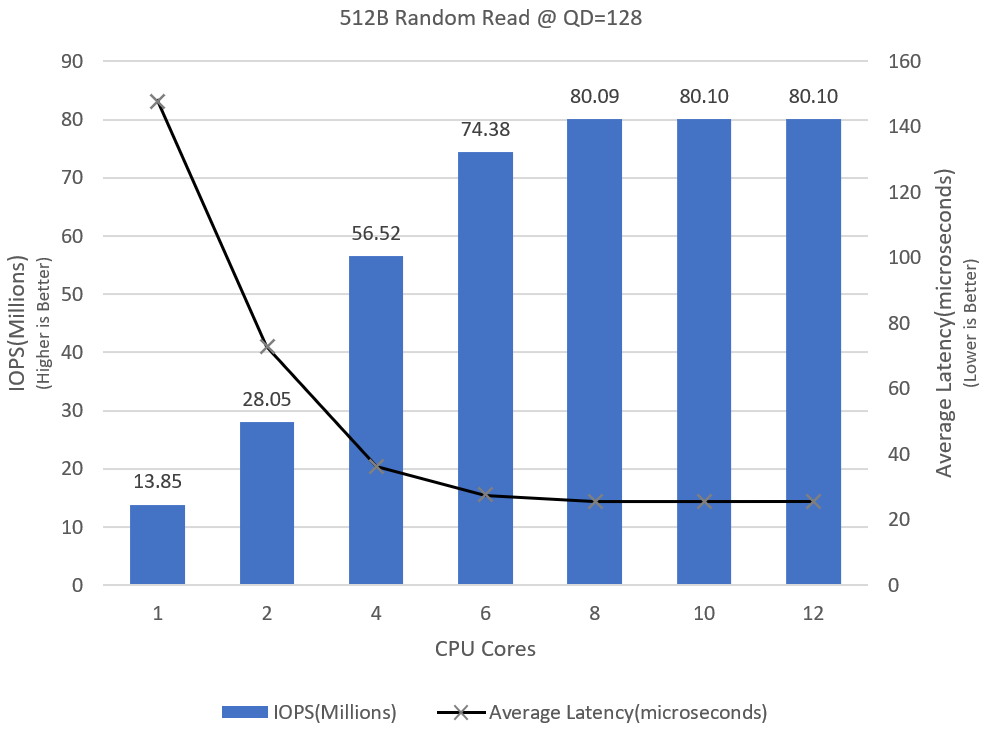

The graph below demonstrates the I/O throughput scalability of the SPDK NVMe driver with the addition of more CPU cores to perform I/O. We measured a stunning 13.85 MILLION 512B I/O per second on 1 CPU core. The IOPS scaled linearly with the addition of CPU cores until 4 cores. Above 4 CPU cores, the scaling is non-linear as the IOPS approach the limits of the SSD capabilities. At just 8 I/O processing cores, we measured over 80 MILLION IOPS at an amazing average latency of just 25.54 microseconds.

512B random reads at queue depth 128 to each device.

Is Your Storage Software ready for 80M IOPS?

One of the major challenges that organizations integrating NVMe SSDs face is the performance and efficiency of the storage software is increasingly critical to the overall storage system performance. Combining the latest Xeon Scalable processor platform with Intel® Optane™ SSDs may strain even flash-optimized architectures. If you are new to SPDK, you might be asking “where do I find a storage stack capable of 80 million IOPS?”. The Storage Performance Development Kit (SPDK) provides a set of open-source tools and libraries for writing high performance, scalable, user-mode storage applications. SPDK was designed from the beginning to support what we call “next generation” storage media, such as Intel® Optane™ devices. Designing for low latency and high throughput is what this project is all about. There is a lot of reading material over at SPDK’s main documentation page. In particular, check out the Introduction and Concepts sections.

The bedrock of SPDK is a user space, polled-mode, asynchronous, lockless NVMe driver that provides zero-copy, highly parallel access directly to an SSD from a user space application. Two years ago, the SPDK community highly optimized the SPDK NVMe driver to achieve over 10 million IOPS from one thread. We published a blog that explains the techniques and strategies used to achieve great performance. Additionally, the SPDK NVMe driver achieves linear IOPS scalability because there are no locks in the I/O path and pinning I/O threads to CPU cores keeps data the caches of the CPU cores accessing it.

You can find out more information about the design of the SPDK NVMe driver at the following documentation page, which includes the APIs you need to start integrating the driver into your storage application.

How About 4KB I/Os?

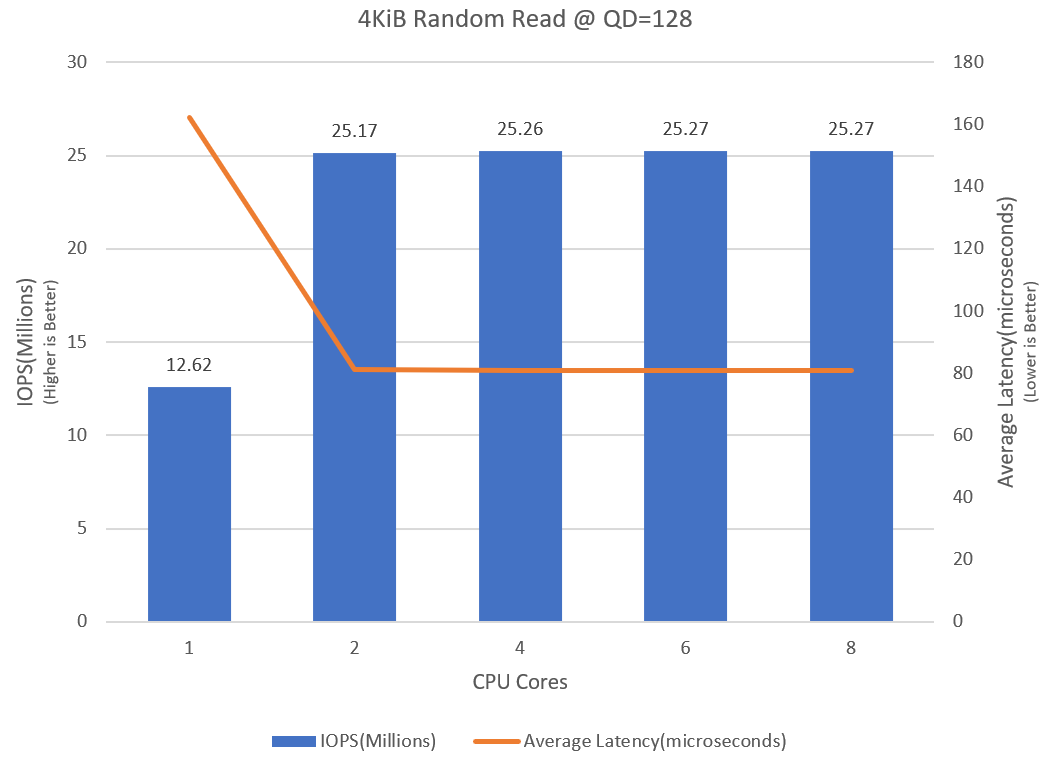

The graph below shows the scaling for 4KiB random read I/O. We measured 12.62 million 4KiB random read I/O operations per second on a single thread. The IOPS scaled linearly as we added a second core to 25.17 million IOPS.

4KiB random reads at queue depth 128 to each device.

Test Methodology

- The data was collected using the SPDK NVMe perf tool in the examples/nvme/perf directory of the SPDK repository. The perf tool was created because off-the-shelf performance tools were not designed to get to this IOPS rate. SPDK’s NVMe perf tool has a comparatively reduced feature set, but is designed specifically to get to this level of performance.

- The 1 I/O core test was performed using a CPU core on NUMA node 0. However, as we scaled the number of I/O cores to 2, 4, 6, 8, 10 and 12, we evenly split the CPU cores between the 2 NUMA nodes and configured the perf jobs to use SSDs on the same NUMA node as the CPU cores running perf jobs.

- Each workload was run for 5 minutes and repeated three times at each CPU count.

Wrapping Up

SPDK is enabling best-in-class performance via a growing list of open source libraries and applications covering storage, storage networking, and storage virtualization. SPDK follows a fully open development model with all code BSD licensed, allowing community members to integrate any or all the components under the most permissive licensing terms. There is an increasing number of companies and projects contributing to and adopting SPDK to take advantage of the advancements in NVMe solid state media in hyper converged and disaggregated storage usage models. Join the SPDK open source community’s pursuit to build storage software for systems capable of 100 million IOPS!

Notices & Disclaimer

Performance varies by use, configuration and other factors. Learn more at https://ift.tt/31TO3mc. Performance results are based on testing as of dates shown in configurations and may not reflect all publicly available updates. See configuration details. No product or component can be absolutely secure. Your costs and results may vary. Intel technologies may require enabled hardware, software or service activation. © Intel Corporation. Intel, the Intel logo, and other Intel marks are trademarks of Intel Corporation or its subsidiaries. Other names and brands may be claimed as the property of others.

Configuration Details

Test by Intel as of 3/17/2021. System: 1-node, 2x Intel® Xeon® Platinum 8380 Processor (40 cores, HT=On, Turbo=ON), Total Memory 1024 GB (16 slots/ 64GB/ 3200 MHz), BIOS:SE5C6200.86B.2021.D40.2103100308 (ucode:0x261), Storage: 16x Intel® Optane™ SSD 800GB P5800X. Software: Fedora 30, Linux Kernel 5.7.12, gcc 9.3.1 compiler, SPDK 20.10. Workload: 512B/4096B Random Read @ QD=128

from Hacker News https://ift.tt/3fK5X0U

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.