First newsletter of the year; quite a bit has happened in the world since I wrote the last one. I wrote a few thousand words on those subject(s) and decided to park them, since the last thing we need on this planet is another hot take. Anyway this is a newsletter so I will try to focus on providing you with information you probably do not already have.

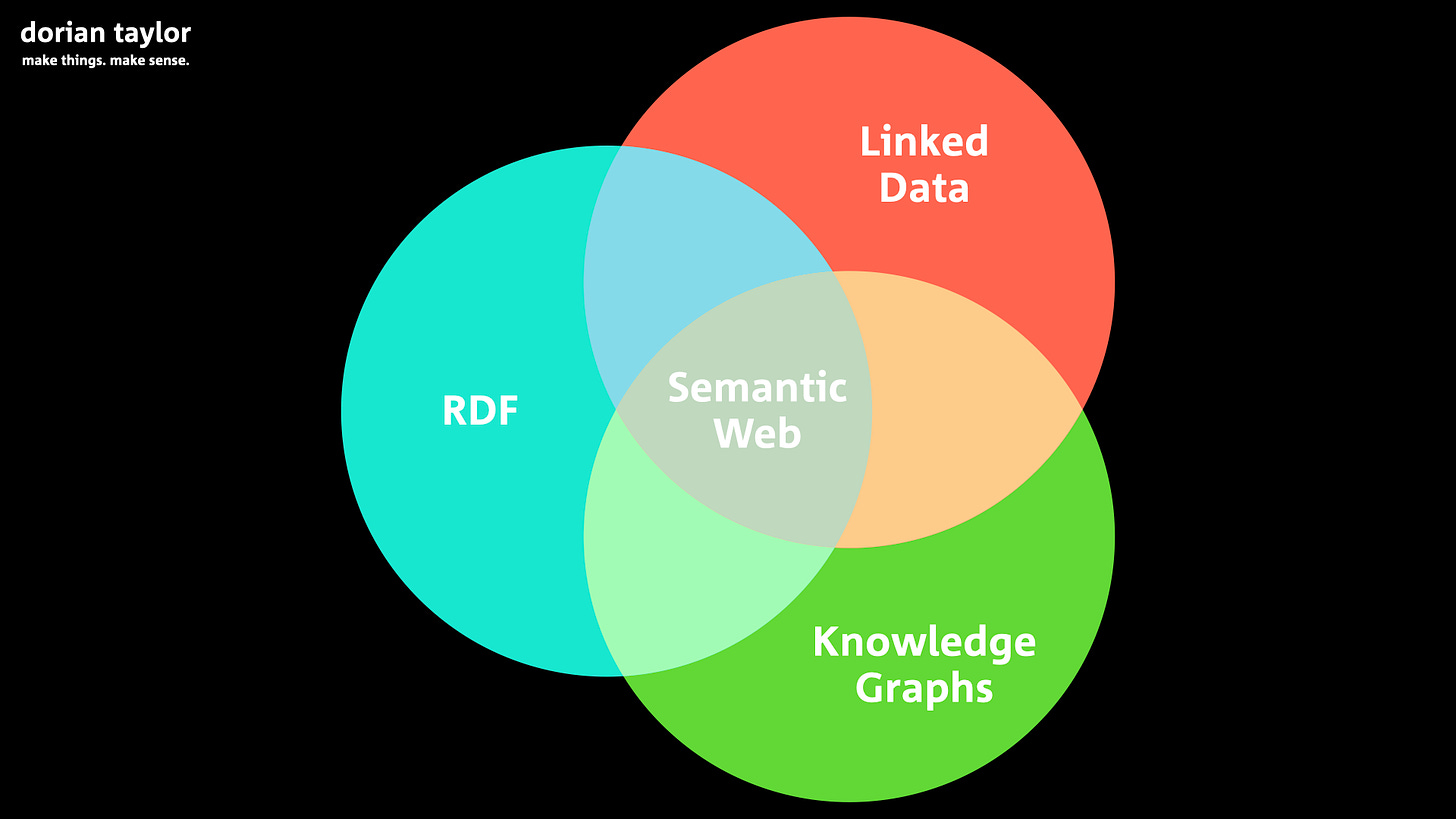

I have been thinking about data again recently, as I had been helping a client with some “personal training” sessions on gaining proficiency with linked data, a set of technical standards I have been using to represent computable information for over a decade and a half. After our first session he asked me what the main challenges were, which inadvertently sent me on a several-week-long✱ inquest into why I’ve stuck so long with what has been derided by the mainstream tech industry as flop. The result was a notion I hadn’t previously paid that much attention to.

✱ During this time I also incurred an exceptionally painful and time-consuming Omicron-lockdown-induced knee injury, and its attendant recovery. I was genuinely surprised by how disruptive it was to my routine.

The big thing linked data does well is something the big tech firms don’t actually want: independence from them. Gaining proficiency in techniques for ultimately giving all your users’ data away, in that scene at least, is unlikely to be rewarded. Radical portability and interoperability of data is an issue of values, and ultimately a political agenda. Espousing linked data is a statement about the kind of world you want to live in.

To restate my own principles, or at least a couple of them:

-

A business relationship is likely to be more equitable if there are viable alternatives to it,

-

Computers could be doing way more work than they currently do.

OK So I Made a Video

My weeks-long inquest culminated in something akin to a video essay. It is just shy of 27 minutes long, and I consider it a rough draft of language I intend to successively refine (and considerably shorten) over a much longer interval. The audience I was aiming for was on the order of “educated white-collar professional who regularly uses a computer for work, and is potentially, but not necessarily a tech industry insider”, so a big chunk of the video is exposition à la “what is data, anyway?”, though I’m trying to take it from a quasi-philosophical angle, rather than a straight-up didactic one.

A revelatory moment came when trying to answer my client’s question about the frustrations of linked data, and aside from the generally poor (albeit steadily improving) available tooling, perhaps the most frustrating thing about it is its neglect from the one constituency you’d think would have been all over it: mainstream Web development.

My epiphany, to spoil the video a little, is that mainstream Web’s nigh-universal disdain for linked data, is actually a case of mismatched values. Arguments against linked data are fairly consistently couched in terms of it being unnecessarily complicated, but necessary is a value judgment. Linked data makes it possible to completely decouple computable information from the system that ordinarily houses it. Longitudinal evidence suggests typical Web-based product companies don’t actually want that.

I have decided that “machine-readable” information is insufficiently expressive, because anything you put into a computer is to some degree being “read” by a machine. Not all machine-readable information, however, is directly computable, to the extent that it has enough formal structure and semantics to be fed straight into a (deterministic) computational operation. So the distinction, I believe, is important.

It makes a heck of a lot of sense, really: if you’re rewarded by your data-siloing, surveillance-arbitraging employer to crank out features that silo more data, are you going to get promoted for getting good at tech that’s going to make things slower to ship✱, in order to give all the data away? Of course not.

✱ Initially, while you set it up, but then it gets faster to work with it than not to.

And that really is the issue: organizations that are in the business of hoarding data are not interested in getting any good at sharing it. After all, gatekeeping your customers’ data is a great way to ensure their loyalty. I, however, anticipate that as people get more sophisticated, there will at least be a niche market who will be loyal because they can access—and remove—100% of their data. And that’s saying nothing about all these other domains:

-

Governments

-

universities

-

GLAMR (galleries, libraries, archives, museums, records)

-

journalistic outlets (you know, news media)

-

think tanks and other NGOs

-

unions and trade/professional associations

-

…et cetera.

These kinds of entities, either abandoning the tendency to jealously hoard information or never exhibiting the trait in the first place (ha ha), could treat the radical interoperability of linked data as a strength rather than a weakness, and enjoy all the mundane everyday benefits of a much more organized and accessible information ecosystem.

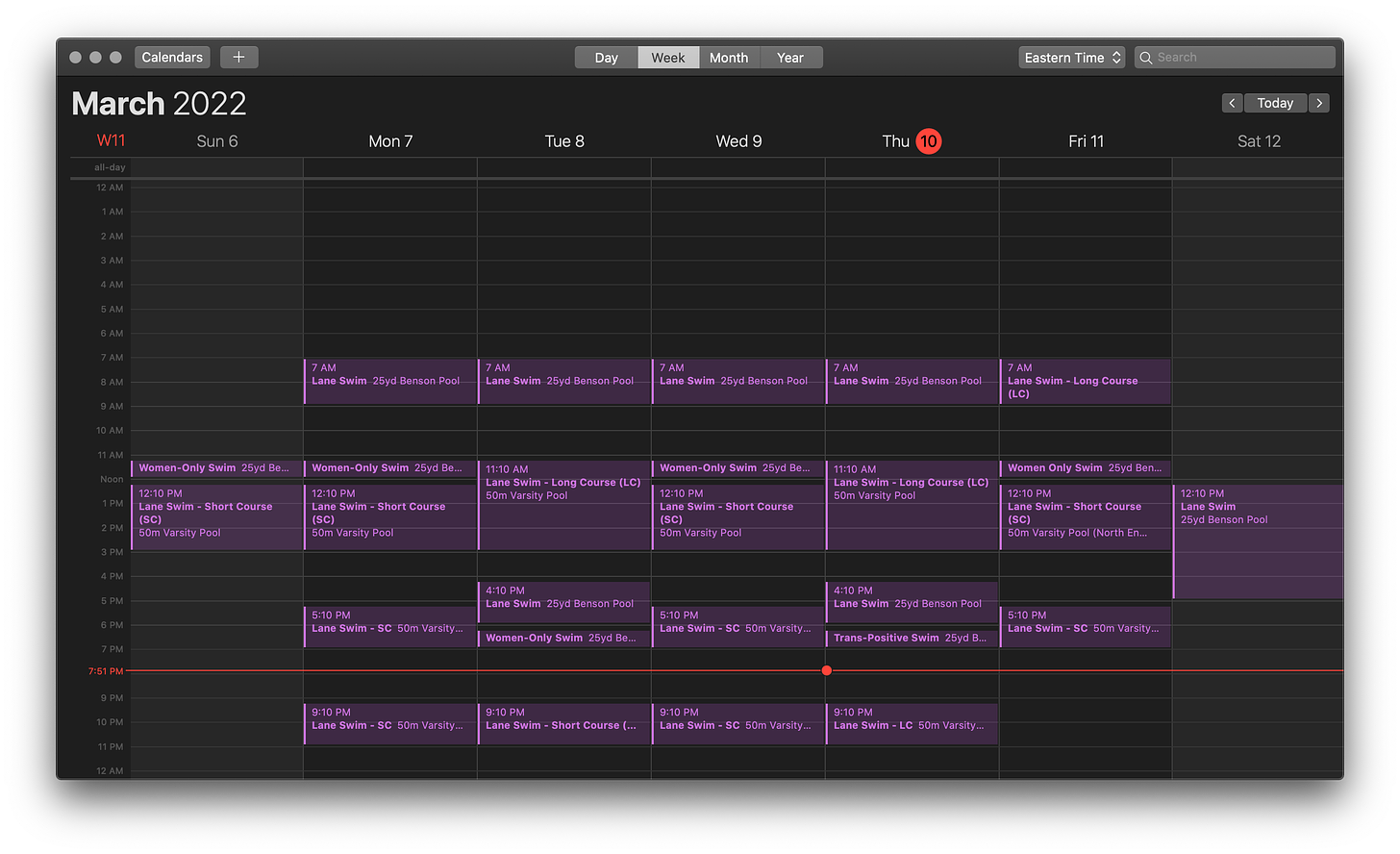

Scraping the Pool

The University of Toronto Athletic Centre houses the only long-course pool in a 15-mile radius. Memberships are scarce and expensive, and the various teams take precedence, leaving ordinary passholders with the dregs. The pool in its full 50-metre configuration is likewise only available for a few hours a couple days a week. On Thursday I got boned because there was a swim meet going on that you wouldn’t have known about if you hadn’t dug into a page buried into one of the half-dozen U of T athletics department websites to see an ad-hoc oh-by-the-way message tacked to the top of the regular schedule, the latter hidden by an “accordion”-style click-to-reveal webpage feature.

My remark here is that an event like a swim meet is planned months in advance; the department knew it was happening and almost certainly has a master calendar somewhere that features months worth of programming for every facility under its control. So a couple questions:

-

Why so stingy with that data?

-

Why not publish it in a format that people will actually use?

This information is not very useful on some random, deep-linked webpage, but it is useful in my calendar, you know, where I schedule all of my other activities. Since this isn’t the first time I’ve been surprised by scheduling changes at this facility, I decided to do just this.

The result was a one-off scraper script that that consumed said accordionized schedule page and turned it into an iCal file. It took me a little over two hours to write.

I even streamed most of the process, but had to bail for a meeting before I finished.

Now, I may be proximately picking on the U of T athletics department (I have contacted them separately), but I’m really trying to make a statement about open data. They almost certainly possess this very useful situational information in a structured form, which can be manipulated into other structured forms suitable for, say, anybody who was interested in that information to click once and have it an up-to-date copy in their possession, on their phone or laptop, in perpetuity. Or, alternatively, for those who don’t want to commit, a format that will take advantage of Google’s enhanced search results. This, instead of every user of the facility hunting down some ephemeral✱ webpage every day to make sure the space they want to use is available before putting in the effort to commute there.

✱ I say “ephemeral” here because you know bookmarking that page is going to be pointless because somebody is going to move or rename it, and probably soon.

The median person I encounter often isn’t even aware that this is a thing you can do, let alone has an informed opinion about whether it’s worth the effort. They tend to have the right instinct though: it probably isn’t.

The way to determine if a software intervention is worth doing is to estimate a value per run✱. This will tell you how many times the software will have to be executed in order to offset what it cost to create. From there you can determine the likelihood that scenario will be met or exceeded.

✱ Or maybe not value per run per se: we can imagine scenarios—like this one—where a program is run once but produces an output that is consumed multiple times, so maybe value per use (of the program’s output) is more accurate.

It took me two hours—including rummaging for materials and refreshing myself on the relevant specs—to write a program intended to be run once. Its objective was to produce a file I can import into iCal that will be valid for probably (since I wrote it late in the semester) another month. I will be its only user. Indeed, it almost certainly took longer to write this code than it would have taken to just transcribe the information by hand directly into iCal.

That said, I should be able to run the same code when they release the new schedule for the following semester. Repeated runs are really where programming makes its gains. Since the schedule was likely laid out by hand in an ad-hoc manner, I don’t trust that it will have the same layout or likely even be the same page, so my code will probably need to be modified, albeit likely only a few minutes’ worth. Unless they mangle the actual source content too badly (like put it in an image or PDF or something), I could conceivably keep up this little cat-and-mouse game indefinitely.

Still, though, my little intervention can’t interpret changes to the schedule (which the comms people decided to express as freeform text), so I still have to personally monitor this webpage and adjust the file, although instead of every day, probably only around once a week. I could of course take this on as a service to others and republish the iCal file on one of my own Web properties, but they would have to find it for it to be of any use to them, and this is something I only have so much energy to advertise.

This mundane example gives us a hint toward valuating the development of this particular information resource. In order to avoid being disappointed by sporadic changes to the schedule, every user of the pool (hundreds? thousands?) has to check every day they intend to swim (up to 7 days a week but realistically less), which means they need to find (or bookmark) the page, and probably set up some kind of reminder to do so. That’s each individual person setting up their own personal routine and infrastructure to compensate for this information not being delivered to them.

My intervention was a one-off expenditure of my own time to write the code, plus what will likely end up as an ongoing refresh✱ say, once a week, to keep abreast of changes, plus a full refresh once a semester, plus whatever effort it costs me to promulgate† the existence of this service.

✱ I could probably create another little program to monitor the page for changes to the schedule so I don’t have to go and check every time, but rather have the changes sent to me. That, though, is a much bigger job—at least to do it right. Since it is also in support of a suboptimal strategy, it amounts to throwing good money effort after bad.

† Note I am probably not going to actually do this part, because it’s really the U of T’s job. They can even have my code if they want.

The benefit of an information resource is almost always to save a certain amount of people a certain amount of time, over a certain amount of time. Setting that information resource up—and then maintaining it afterward—costs whatever it costs. The question is: is it worth it?

We can shrink the cost from everybody every time to just me once in a while with a modicum of effort (assuming burdening me in perpetuity with this volunteer work counts as a “modicum”). That’s a time savings of several orders of magnitude, but what would it take to also relieve me (or whoever) of this burden? Probably not much more than the initial effort, if it was done in the right place.

This is because where you intervene in an information system is significant: information tends to get lost the farther you get from its source, like a game of Telephone. Assuming the master schedule is digital—which would be bonkers if it wasn’t—it necessarily must, in order to be at all useful, possess the appropriate structure to map to different representations like an iCal file, or search engine metadata. If the originating data source doesn’t expose the full structure, you’re stuck with scraping. The only question here, is whether or not you can access it, and do so sufficiently cleanly.

Scraping, which is what I did, is almost always possible, with enough effort, enough of an appetite for itinerant failure, and enough of a willingness to leave certain features or capabilities on the table. It’s also a fundamentally degenerate strategy, because the underlying resource can simply be changed out from under you. In this way scraping can be viewed either as plucky resistance, or an admission of defeat, since obtaining the genuine article is not a technical issue, but a political one. Namely, there’s an entity that has the real deal in their possession, and for one reason or another, they aren’t giving it to you. In the latter setting—the institutional setting—this is an issue of being a stakeholder to a procurement process. But you can’t advocate for outcomes in that scenario, if you don’t know what to advocate for.

When I say “computers could be doing way more work than they currently do”, I’m actually referring to certain patterns of design decisions in computer systems that inhibit—or prohibit—certain outcomes. You can’t anticipate every use case for an information system over an arbitrarily long time horizon, but you can choose systems that expose their data semantics as a matter of course. That way, you can commission small interventions like the one I did, except do so much closer to the source.

Software—especially enterprise software—is still sold in terms of features and functionality: whatever the system “lets” you do. The irony is that so much software, enterprise and otherwise, boils down to CRUD (create, read, update, delete) operations, and the user interfaces to perform those operations. There tend to be very few verbs besides. So the menu of things the software “lets” you do can really be framed as the complement of what it actively prevents you from doing. Software that fully exposes its data “lets” you do anything, because it’s the data that matters.

from Hacker News https://ift.tt/6Qp7AN5

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.