This week, at the ACM CCS 2020 conference, researchers from UC Riverside and Tsinghua University announced a new attack against the Domain Name System (DNS) called SAD DNS (Side channel AttackeD DNS). This attack leverages recent features of the networking stack in modern operating systems (like Linux) to allow attackers to revive a classic attack category: DNS cache poisoning. As part of a coordinated disclosure effort earlier this year, the researchers contacted Cloudflare and other major DNS providers and we are happy to announce that 1.1.1.1 Public Resolver is no longer vulnerable to this attack.

In this post, we’ll explain what the vulnerability was, how it relates to previous attacks of this sort, what mitigation measures we have taken to protect our users, and future directions the industry should consider to prevent this class of attacks from being a problem in the future.

DNS Basics

The Domain Name System (DNS) is what allows users of the Internet to get around without memorizing long sequences of numbers. What’s often called the “phonebook of the Internet” is more like a helpful system of translators that take natural language domain names (like blog.cloudflare.com or gov.uk) and translate them into the native language of the Internet: IP addresses (like 192.0.2.254 or [2001:db8::cf]). This translation happens behind the scenes so that users only need to remember hostnames and don’t have to get bogged down with remembering IP addresses.

DNS is both a system and a protocol. It refers to the hierarchical system of computers that manage the data related to naming on a network and it refers to the language these computers use to speak to each other to communicate answers about naming. The DNS protocol consists of pairs of messages that correspond to questions and responses. Each DNS question (query) and answer (reply) follows a standard format and contains a set of parameters that contain relevant information such as the name of interest (such as blog.cloudflare.com) and the type of response record desired (such as A for IPv4 or AAAA for IPv6).

The DNS Protocol and Spoofing

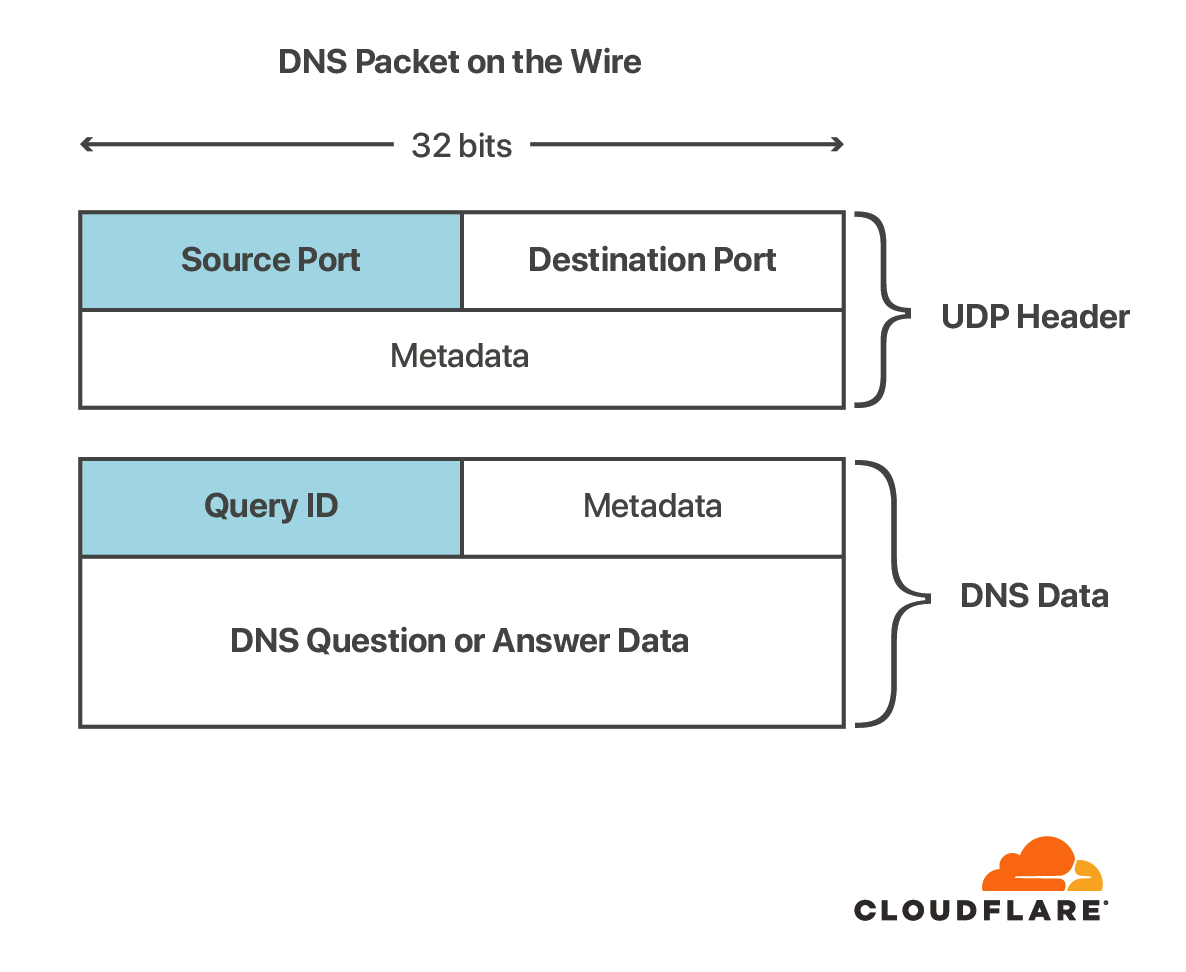

These DNS messages are exchanged over a network between machines using a transport protocol. Originally, DNS used UDP, a simple stateless protocol in which messages are endowed with a set of metadata indicating a source port and a destination port. More recently, DNS has adapted to use more complex transport protocols such as TCP and even advanced protocols like TLS or HTTPS, which incorporate encryption and strong authentication into the mix (see Peter Wu’s blog post about DNS protocol encryption).

Still, the most common transport protocol for message exchange is UDP, which has the advantages of being fast, ubiquitous and requiring no setup. Because UDP is stateless, the pairing of a response to an outstanding query is based on two main factors: the source address and port pair, and information in the DNS message. Given that UDP is both stateless and unauthenticated, anyone, and not just the recipient, can send a response with a forged source address and port, which opens up a range of potential problems.

Since the transport layer is inherently unreliable and untrusted, the DNS protocol was designed with additional mechanisms to protect against forged responses. The first two bytes in the message form a message or transaction ID that must be the same in the query and response. When a DNS client sends a query, it will set the ID to a random value and expect the value in the response to match. This unpredictability introduces entropy into the protocol, which makes it less likely that a malicious party will be able to construct a valid DNS reply without first seeing the query. There are other potential variables to account for, like the DNS query name and query type are also used to pair query and response, but these are trivial to guess and don’t introduce an additional entropy.

Those paying close attention to the diagram may notice that the amount of entropy introduced by this measure is only around 16 bits, which means that there are fewer than a hundred thousand possibilities to go through to find the matching reply to a given query. More on this later.

The DNS Ecosystem

DNS servers fall into one of a few main categories: recursive resolvers (like 1.1.1.1 or 8.8.8.8), nameservers (like the DNS root servers or Cloudflare Authoritative DNS). There are also elements of the ecosystem that act as “forwarders” such as dnsmasq. In a typical DNS lookup, these DNS servers work together to complete the task of delivering the IP address for a specified domain to the client (the client is usually a stub resolver - a simple resolver built into an operating system). For more detailed information about the DNS ecosystem, take a look at our learning site. The SAD DNS attack targets the communication between recursive resolvers and nameservers.

Each of the participants in DNS (client, resolver, nameserver) uses the DNS protocol to communicate with each other. Most of the latest innovations in DNS revolve around upgrading the transport between users and recursive resolvers to use encryption. Upgrading the transport protocol between resolvers and authoritative servers is a bit more complicated as it requires a new discovery mechanism to instruct the resolver when to (and when not to use) a more secure channel. Aside from a few examples like our work with Facebook to encrypt recursive-to-authoritative traffic with DNS-over-TLS, most of these exchanges still happen over UDP. This is the core issue that enables this new attack on DNS, and one that we’ve seen before.

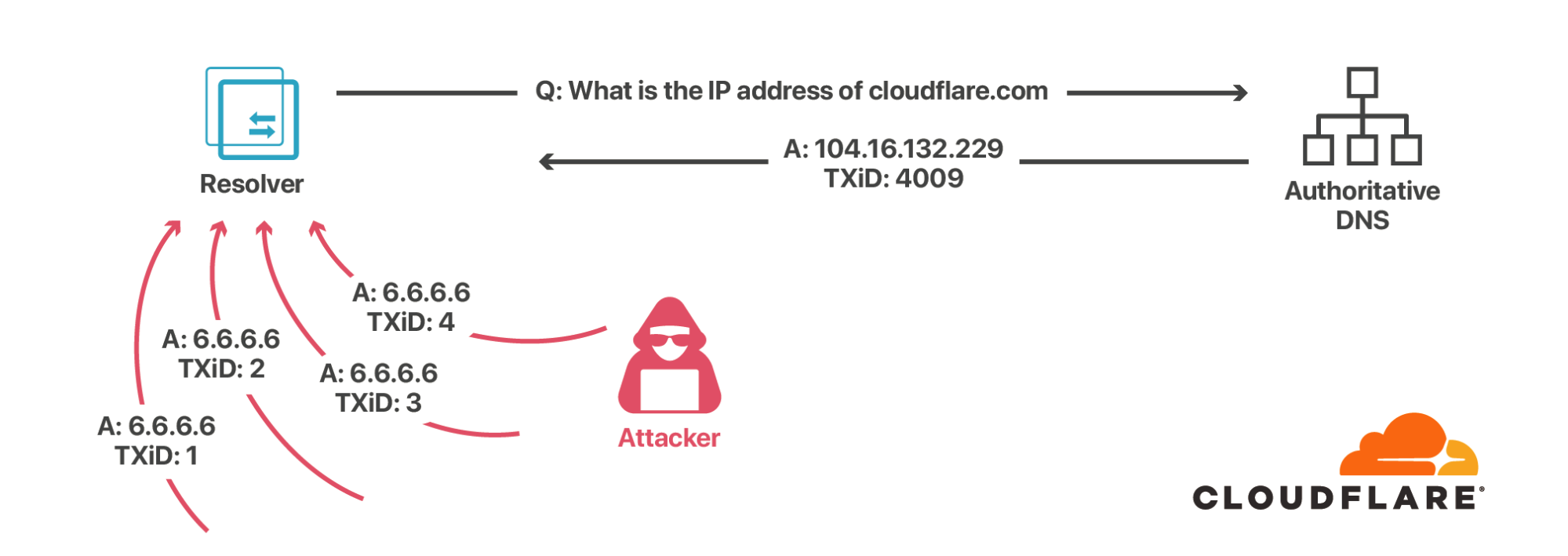

Kaminsky’s Attack

Prior to 2008, recursive resolvers typically used a single open port (usually port 53) to send and receive messages to authoritative nameservers. This made guessing the source port trivial, so the only variable an attacker needed to guess to forge a response to a query was the 16-bit message ID. The attack Kaminsky described was relatively simple: whenever a recursive resolver queried the authoritative name server for a given domain, an attacker would flood the resolver with DNS responses for some or all of the 65 thousand or so possible message IDs. If the malicious answer with the right message ID arrived before the response from the authoritative server, then the DNS cache would be effectively poisoned, returning the attacker’s chosen answer instead of the real one for as long as the DNS response was valid (called the TTL, or time-to-live).

For popular domains, resolvers contact authoritative servers once per TTL (which can be as short as 5 minutes), so there are plenty of opportunities to mount this attack. Forwarders that cache DNS responses are also vulnerable to this type of attack.

In response to this attack, DNS resolvers started doing source port randomization and careful checking of the security ranking of cached data. To poison these updated resolvers, forged responses would not only need to guess the message ID, but they would also have to guess the source port, bringing the number of guesses from the tens of thousands to over a billion. This made the attack effectively infeasible. Furthermore, the IETF published RFC 5452 on how to harden DNS from guessing attacks.

It should be noted that this attack did not work for DNSSEC-signed domains since their answers are digitally signed. However, even now in 2020, DNSSEC is far from universal.

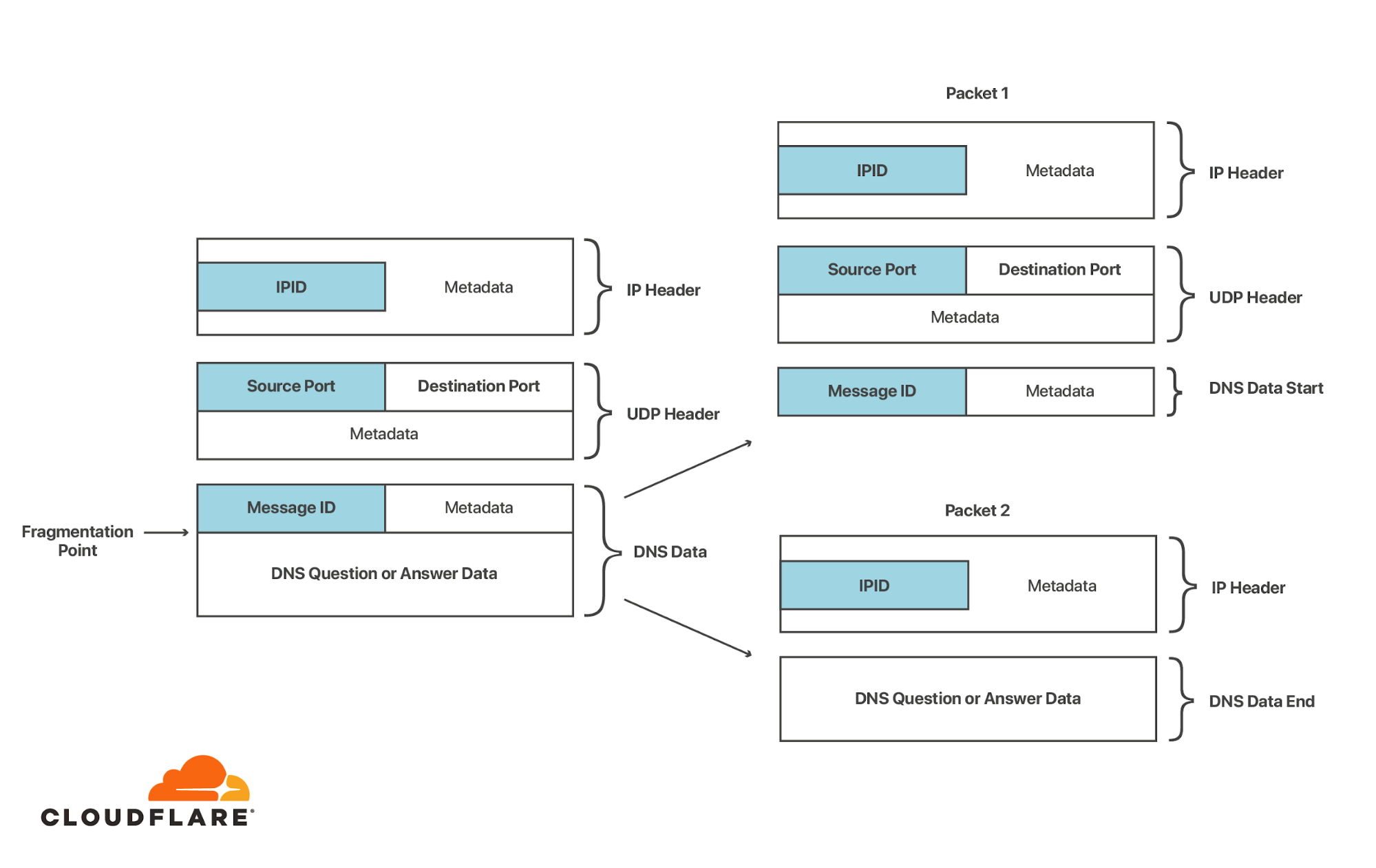

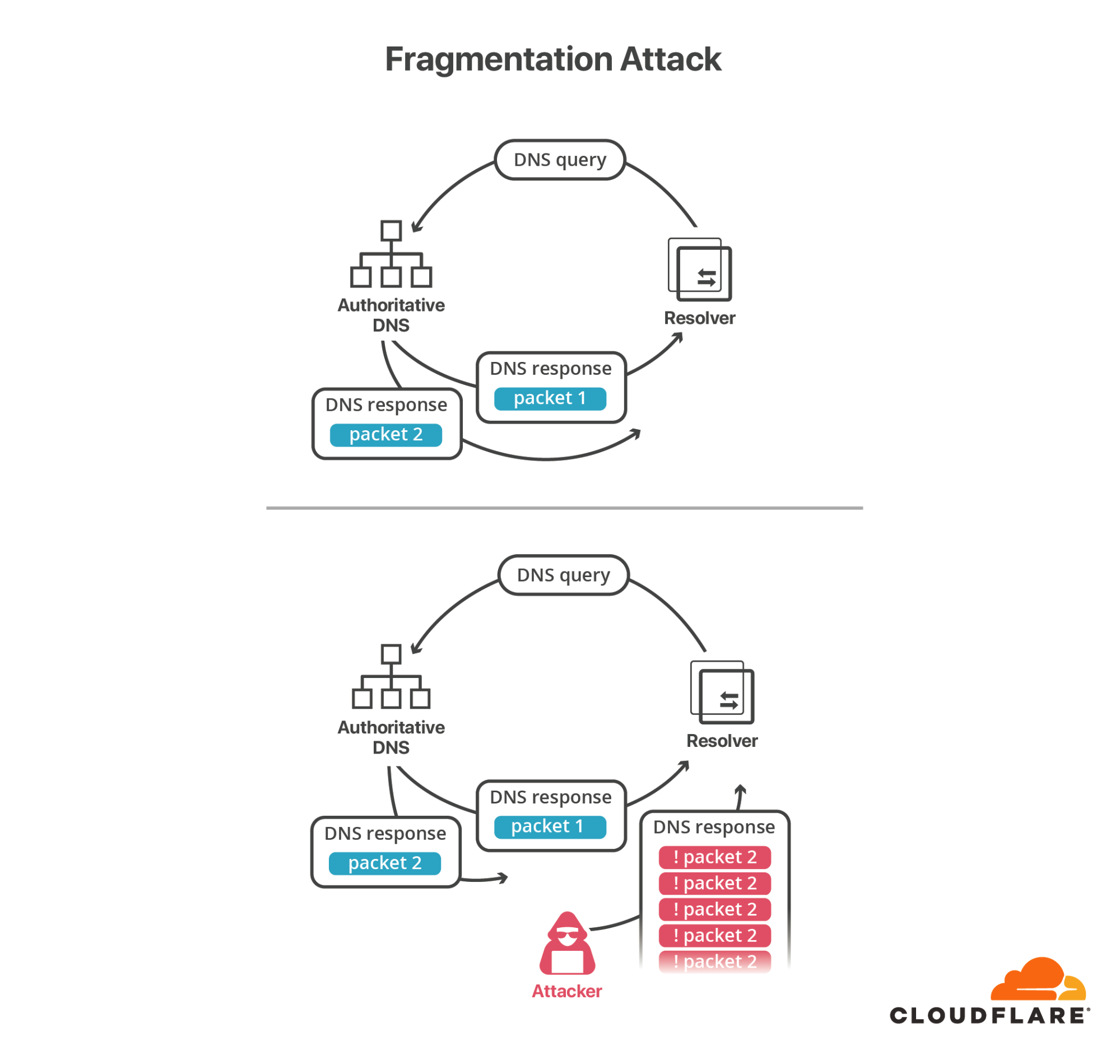

Defeating Source Port Randomization with Fragmentation

Another way to avoid having to guess the source port number and message ID is to split the DNS response in two. As is often the case in computer security, old attacks become new again when attackers discover new capabilities. In 2012, researchers Amir Herzberg and Haya Schulman from Bar Ilan University discovered that it was possible for a remote attacker to defeat the protections provided by source port randomization. This new attack leveraged another feature of UDP: fragmentation. For a primer on the topic of UDP fragmentation, check out our previous blog post on the subject by Marek Majkowski.

The key to this attack is the fact that all the randomness that needs to be guessed in a DNS poisoning attack is concentrated at the beginning of the DNS message (UDP header and DNS header).If the UDP response packet (sometimes called a datagram) is split into two fragments, the first half containing the message ID and source port and the second containing part of the DNS response, then all an attacker needs to do is forge the second fragment and make sure that the fake second fragment arrives at the resolver before the true second fragment does. When a datagram is fragmented, each fragment is assigned a 16-bit IDs (called IP-ID), which is used to reassemble it at the other end of the connection. Since the second fragment only has the IP-ID as entropy (again, this is a familiar refrain in this area), this attack is feasible with a relatively small number of forged packets. The downside of this attack is the precondition that the response must be fragmented in the first place, and the fragment must be carefully altered to pass the original section counts and UDP checksum.

Also discussed in the original and follow-up papers is a method of forcing two remote servers to send packets between each other which are fragmented at an attacker-controlled point, making this attack much more feasible. The details are in the paper, but it boils down to the fact that the control mechanism for describing the maximum transmissible unit (MTU) between two servers -- which determines at which point packets are fragmented -- can be set via a forged UDP packet.

We explored this risk in a previous blog post in the context of certificate issuance last year when we introduced our multi-path DCV service, which mitigates this risk in the context of certificate issuance by making DNS queries from multiple vantage points. Nevertheless, fragmentation-based attacks are proving less and less effective as DNS providers move to eliminate support for fragmented DNS packets (one of the major goals of DNS Flag Day 2020).

Defeating Source Port Randomization via ICMP error messages

Another way to defeat the source port randomization is to use some measurable property of the server that makes the source port easier to guess. If the attacker could ask the server which port number is being used for a pending query, that would make the construction of a spoofed packet much easier. No such thing exists, but it turns out there is something close enough - the attacker can discover which ports are surely closed (and thus avoid having to send traffic). One such mechanism is the ICMP “port unreachable” message.

Let’s say the target receives a UDP datagram destined for its IP and some port, the datagram either ends up either being accepted and silently discarded by the application, or rejected because the port is closed. If the port is closed, or more importantly, closed to the IP address that the UDP datagram was sent from, the target will send back an ICMP message notifying the attacker that the port is closed. This is handy to know since the attacker now doesn’t have to bother trying to guess the pending message ID on this port and move to other ports. A single scan of the server effectively reduces the search space of valid UDP responses from 232 (over a billion) to 217 (around a hundred thousand), at least in theory.

This trick doesn’t always work. Many resolvers use “connected” UDP sockets instead of “open” UDP sockets to exchange messages between the resolver and nameserver. Connected sockets are tied to the peer address and port on the OS layer, which makes it impossible for an attacker to guess which “connected” UDP sockets are established between the target and the victim, and since the attacker isn’t the victim, it can’t directly observe the outcome of the probe.

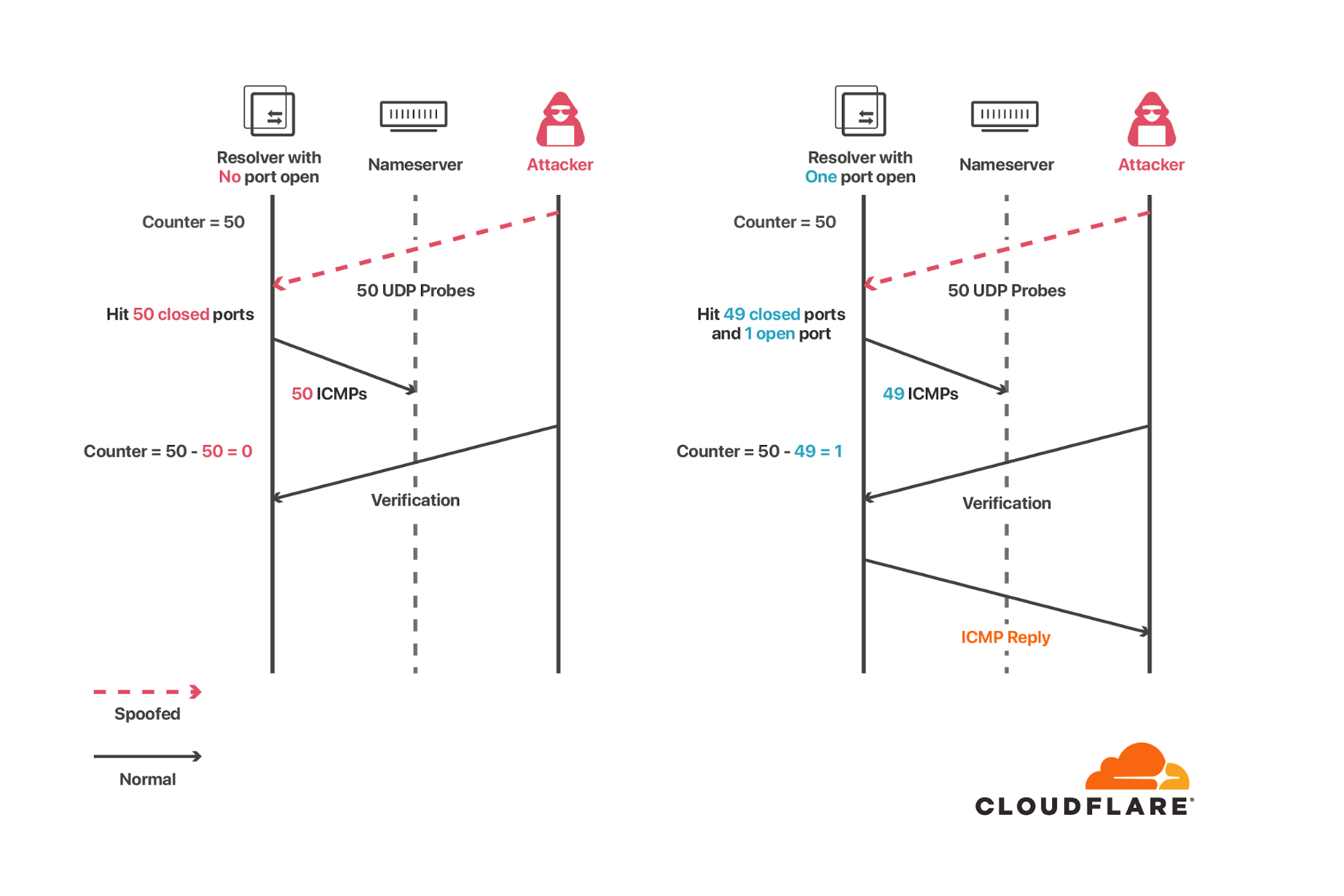

To overcome this, the researchers found a very clever trick: they leverage ICMP rate limits as a side channel to reveal whether a given port is open or not. ICMP rate limiting was introduced (somewhat ironically, given this attack) as a security feature to prevent a server from being used as an unwitting participant in a reflection attack. In broad terms, it is used to limit how many ICMP responses a server will send out in a given time period. Say an attacker wanted to scan 10,000 ports and sent a burst of 10,000 UDP packets to a server configured with an ICMP rate limit of 50 per second, then only the first 50 would get an ICMP “port unreachable” message in reply.

Rate limiting seems innocuous until you remember one of the core rules of data security: don’t let private information influence publicly measurable metrics. ICMP rate limiting violates this rule because the rate limiter’s behavior can be influenced by an attacker making guesses as to whether a “secret” port number is open or not.

don’t let private information influence publicly measurable metrics

An attacker wants to know whether the target has an open port, so it sends a spoofed UDP message from the authoritative server to that port. If the port is open, no ICMP reply is sent and the rate counter remains unchanged. If the port is inaccessible, then an ICMP reply is sent (back to the authoritative server, not to the attacker) and the rate is increased by one. Although the attacker doesn’t see the ICMP response, it has influenced the counter. The counter itself isn’t known outside the server, but whether it has hit the rate limit or not can be measured by any outside observer by sending a UDP packet and waiting for a reply. If an ICMP “port unreachable” reply comes back, the rate limit hasn’t been reached. No reply means the rate limit has been met. This leaks one bit of information about the counter to the outside observer, which in the end is enough to reveal the supposedly secret information (whether the spoofed request got through or not).

Concretely, the attack works as follows: the attacker sends a bunch (large enough to trigger the rate limiting) of probe messages to the target, but with a forged source address of the victim. In the case where there are no open ports in the probed set, the target will send out the same amount of ICMP “port unreachable” responses back to the victim and trigger the rate limit on outgoing ICMP messages. The attacker can now send an additional verification message from its own address and observe whether an ICMP response comes back or not. If it does then there was at least one port open in the set and the attacker can divide the set and try again, or do a linear scan by inserting the suspected port number into a set of known closed ports. Using this approach, the attacker can narrow down to the open ports and try to guess the message ID until it is successful or gives up, similarly to the original Kaminsky attack.

In practice there are some hurdles to successfully mounting this attack.

- First, the target IP, or a set of target IPs must be discovered. This might be trivial in some cases - a single forwarder, or a fixed set of IPs that can be discovered by probing and observing attacker controlled zones, but more difficult if the target IPs are partitioned across zones as the attacker can’t see the resolver egress IP unless she can monitor the traffic for the victim domain.

- The attack also requires a large enough ICMP outgoing rate limit in order to be able to scan with a reasonable speed. The scan speed is critical, as it must be completed while the query to the victim nameserver is still pending. As the scan speed is effectively fixed, the paper instead describes a method to potentially extend the window of opportunity by triggering the victim's response rate limiting (RRL), a technique to protect against floods of forged DNS queries. This may work if the victim implements RRL and the target resolver doesn’t implement a retry over TCP (A Quantitative Study of the Deployment of DNS Rate Limiting shows about 16% of nameservers implement some sort of RRL).

- Generally, busy resolvers will have ephemeral ports opening and closing, which introduces false positive open ports for the attacker, and ports open for different pending queries than the one being attacked.

We’ve implemented an additional mitigation to 1.1.1.1 to prevent message ID guessing - if the resolver detects an ID enumeration attempt, it will stop accepting any more guesses and switches over to TCP. This reduces the number of attempts for the attacker even if it guesses the IP address and port correctly, similarly to how the number of password login attempts is limited.

Outlook

Ultimately these are just mitigations, and the attacker might be willing to play the long game. As long as the transport layer is insecure and DNSSEC is not widely deployed, there will be different methods of chipping away at these mitigations.

It should be noted that trying to hide source IPs or open port numbers is a form of security through obscurity. Without strong cryptographic authentication, it will always be possible to use spoofing to poison DNS resolvers. The silver lining here is that DNSSEC exists, and is designed to protect against this type of attack, and DNS servers are moving to explore cryptographically strong transports such as TLS for communicating between resolvers and authoritative servers.

At Cloudflare, we’ve been helping to reduce the friction of DNSSEC deployment, while also helping to improve transport security in the long run. There is also an effort to increase entropy in DNS messages with RFC 7873 - Domain Name System (DNS) Cookies, and make DNS over TCP support mandatory RFC 7766 - DNS Transport over TCP - Implementation Requirements, with even more documentation around ways to mitigate this type of issue available in different places. All of these efforts are complementary, which is a good thing. The DNS ecosystem consists of many different parties and software with different requirements and opinions, as long as the operators support at least one of the preventive measures, these types of attacks will become more and more difficult.

If you are an operator of an authoritative DNS server, you should consider taking the following steps to protect yourself from this attack:

We’d like to thank the researchers for responsibly disclosing this attack and look forward to working with them in the future on efforts to strengthen the DNS.

from Hacker News https://ift.tt/35vCkN8

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.