The 8008 was Intel's first 8-bit microprocessor, introduced in 1972. While primitive by today's standards, the 8008 is historically important because it essentially started the

microprocessor revolutionand is the ancestor of the modern x86 processor family. I've been studying the 8008's silicon die under the microscope and reverse-engineering its circuitry.

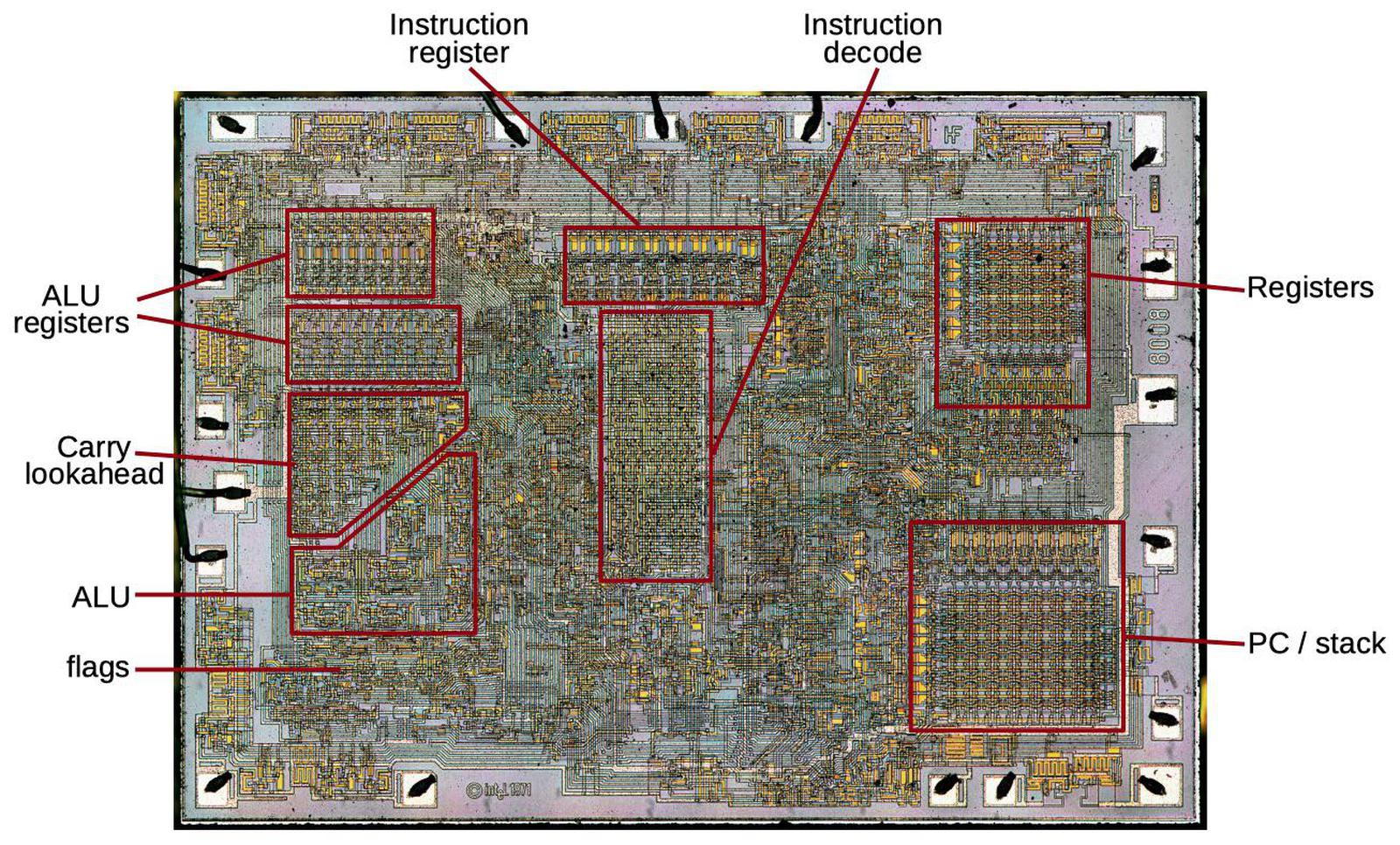

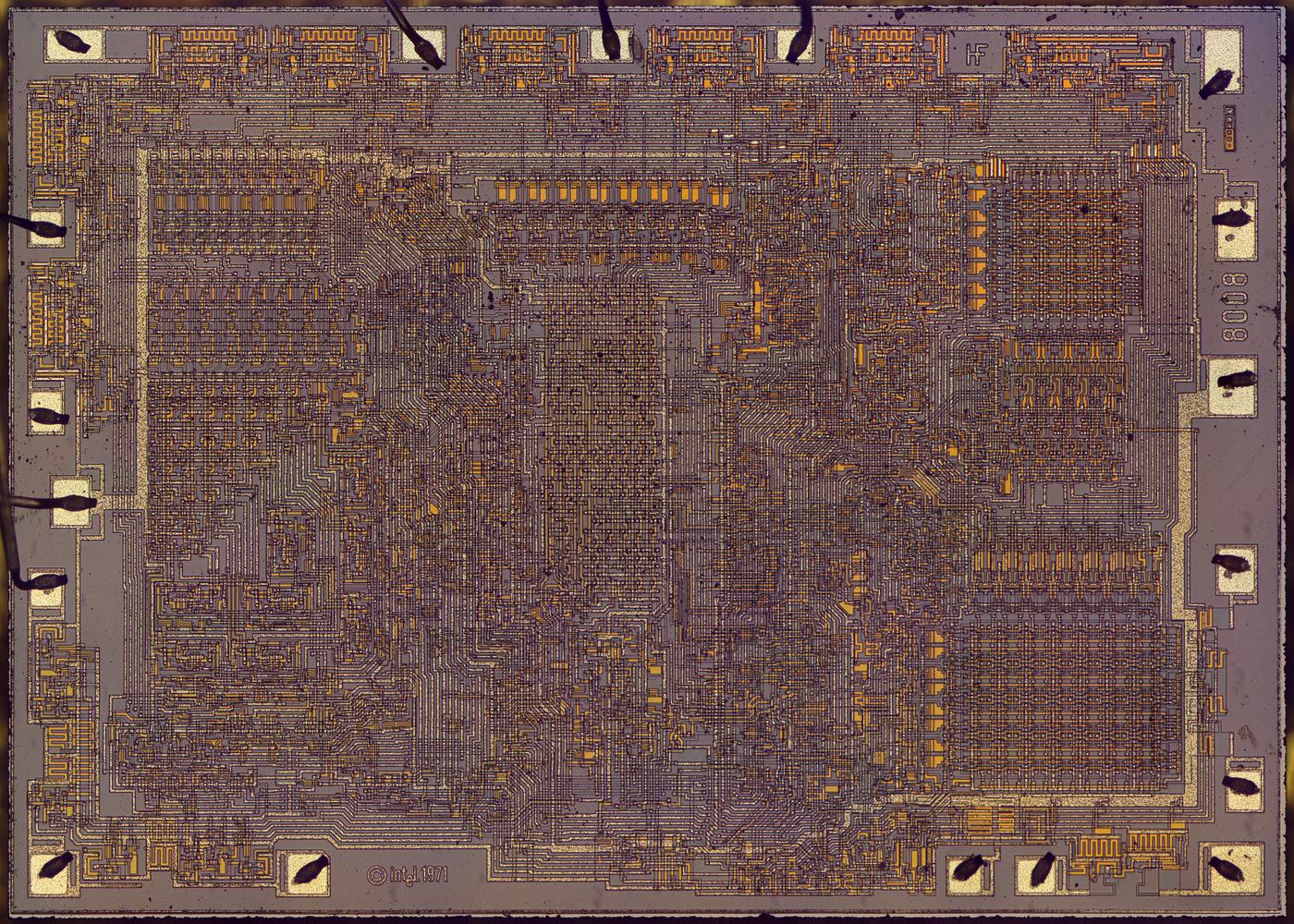

The die photo below shows the main functional blocks1 including the registers, instruction decoder, and on-chip stack storage. The 8-bit arithmetic logic unit (ALU) is on the left. Above the ALU is the carry-lookahead generator, which improves performance by computing the carries for addition, before the addition takes place. It's a bit surprising to see carry lookahead implemented in such an early microprocessor. This blog post explains how the carry circuit is implemented.

The Intel 8008 die with key functional blocks labeled. Click for a larger version.

Most of what you see in the die photo is the greenish-white wiring of the metal layer on top. Underneath the metal is polysilicon wiring, providing more connections as well as implementing transistors. The chip contains about 3500 tiny transistors, which appear as brighter yellow. The underlying silicon substrate is mostly obscured; it is purplish-gray. Around the edges of the die are 18 rectangular pads; these are connected by tiny bond wires to the external pins of the integrated circuit package (below).

An 8008 integrated circuit in an 18-pin DIP (dual inline package). The package is very scratched, but I didn't see the point in paying for mint condition for a chip I was immediately going to decap.

The 8008 was sold as a small 18-pin DIP (dual inline package) integrated circuit. 18 pins is an inconveniently small number of pins for a microprocessor, but Intel was committed to small packages at the time.2 In comparison, other early microprocessors typically used 40 pins, making it much easier to connect the data bus, address bus, control signals, and power to the processor.

Addition

The heart of a processor is the arithmetic-logic unit (ALU), the functional block that performs arithmetic operations (such as addition or subtraction) and logical operations (such as AND, OR, and XOR). Addition was the most challenging operation to implement efficiently because of the need for carries.3

Consider how you add two decimal numbers such as 8888 and 1114, with long addition. Starting at the right, you add each pair of digits (8 and 4), write down the sum (2), and pass any carry (1) along to the left. In the next column, you add the pair of digits (8 and 1) along with the carry (1), writing down the sum (0) and passing the carry (1) to the next column. You repeat the process right-to-left, ending up with the result 10002. Note that you have to add each position before you can compute the next position.

Binary numbers can be added in a similar way with a circuit called a ripple-carry adder that was used in many early microprocessors. Each bit is computed by a full adder, which takes two input bits and a carry and produces the sum bit and a carry-out. For instance, adding binary 1 + 1 with no carry-in yields 10, for a sum bit of 0 and a carry-out of 1. Each carry-out is added to the bit position to the left, just like decimal long addition.

The problem with ripple carry is if you add, say, 11111111 + 1, you need to wait as the carry "ripples" through the sum from right to left. This makes addition a slow serial operation instead of a parallel operation. Even though the 8008 only performs addition on 8-bit numbers, this delay would slow the processor too much. The solution was a carry lookahead circuit that rapidly computes the carries for all eight bit positions. Then the sum can be calculated in parallel without waiting for carries to ripple through the bits. According to 8008 designer Hal Feeney, "We built the carry look-ahead logic because we needed the speed as far as the processor is concerned. So carry look ahead seemed like something we could integrate and have fairly low real estate overhead and, as you see, the whole carry look ahead is just a very small portion of the chip."

Implementing carry lookahead

The idea of carry lookahead is that if you can compute all the carry values in advance, then you can rapidly add all the bit positions in parallel. But how can you compute the carries without performing the addition? The solution in the 8008 was to build a separate circuit for each bit position to compute the carry based on the inputs.

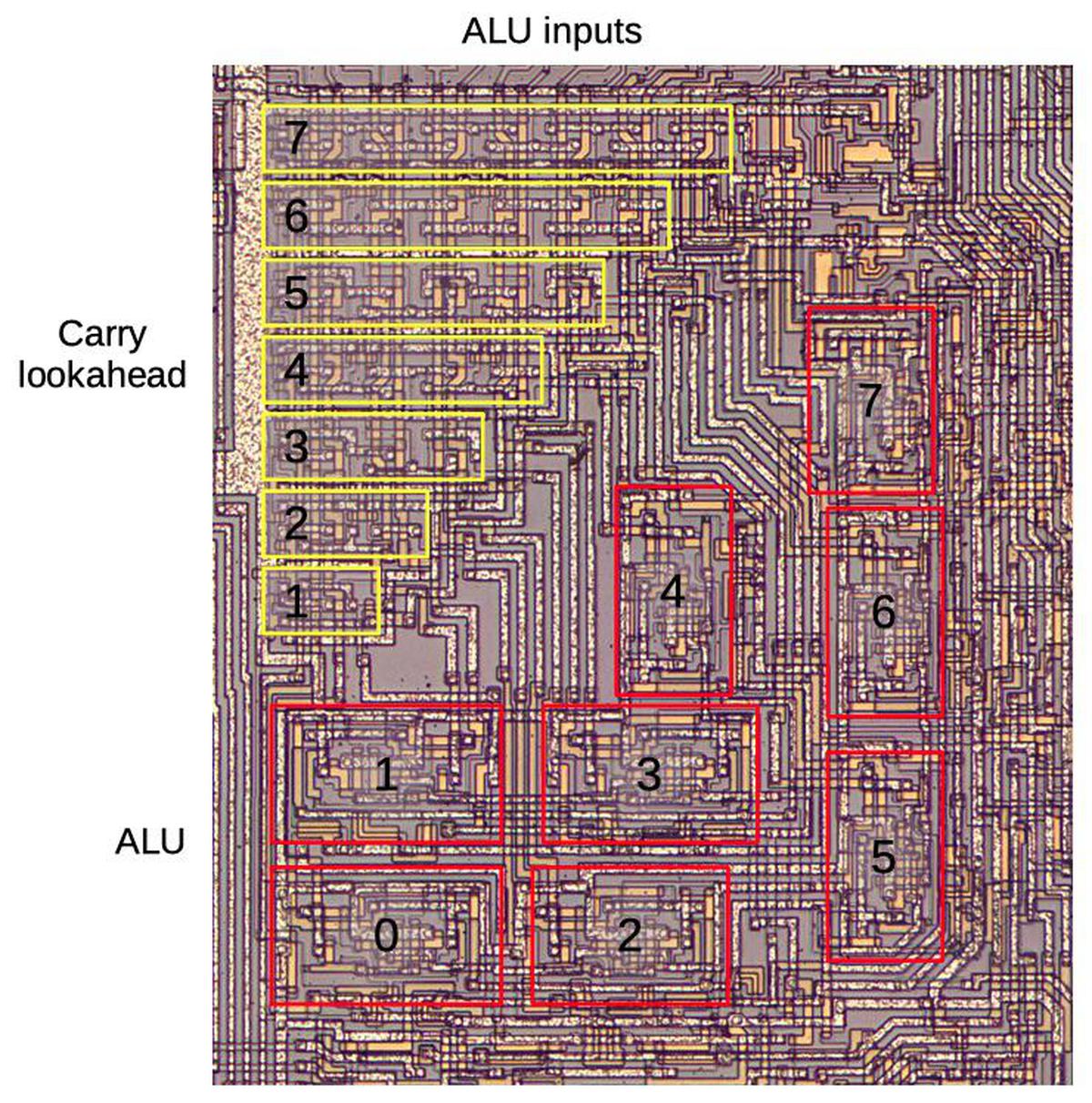

The diagram below zooms in on the carry lookahead circuitry and the arithmetic-logic unit (ALU). The two 8-bit arguments and a carry-in arrive at the top. These values flow vertically through the carry lookahead circuit, generating carry values for each bit along the way. Each ALU block receives two input bits and a carry bit and produces one output bit. The carry lookahead has a triangular layout because successive carry bits require more circuitry, as will be explained. The 8-bit ALU has an unusual layout in order to make the most of the triangular space. Almost all microprocessors arrange the ALU in a rectangular block; an 8-bit ALU would have 8 similar slices. But in the 8008, the slices of the ALU are scattered irregularly; some slices are even rotated sideways. I've written about the 8008's ALU before if you want more details.

Closeup of the 8008 die showing the carry lookahead circuitry and the ALU.

To understand how carry lookahead works, consider three addition cases. First, adding 0+0 cannot generate a carry, even if there is a carry in; the sum is 0 (if there is no carry in) or 1 (with carry in). The second case is 0+1 or 1+0. In this case, there will be a carry out only if there is a carry in. (With no carry-in the result is 1, while with carry-in the result is 10.) This is the "propagate" case, since the carry-in is propagated to carry-out. The final case is 1+1. In this case, there will be a carry-out, regardless of the carry-in. This is the "generate" case, since a new carry is generated.

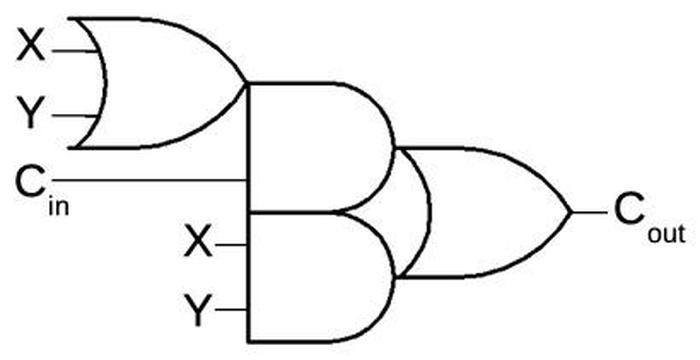

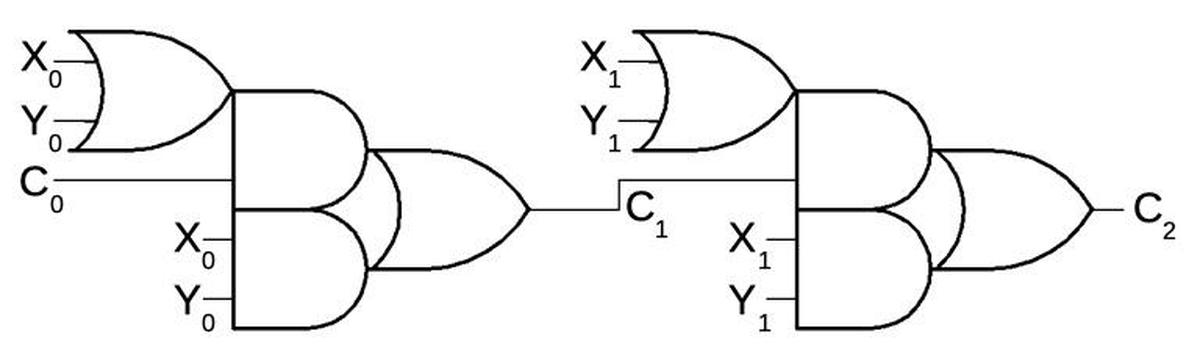

The circuit below computes the carry-out when adding two bits (X and Y) along with a carry-in. This circuit is built from an OR gate on the left, two AND gates in the middle, and an OR gate on the right. (Although this circuit looks complex, it can be implemented efficiently in hardware.) To see how it operates, consider the three cases. If X and Y are both 0, the carry output will be 0. Otherwise, the first OR gate will output 1. If carry-in is 1, the upper AND gate will output 1 and the carry-out will be 1. (This is the propagate case.) Finally, if both X and Y are 1, the lower AND gate will output 1, and the carry-out will be 1. (This is the generate case.)

This circuit computes the carry-out given the carry-in and two input bits X and Y.

To compute the carry into a higher-order position, multiple instances of this circuit can be chained together. For instance, the circuit below computes the carry into bit position 2 (C2). The gate block on the left computes C1, the carry into bit position 1, from the carry-in (C0) and low-order bits X0 and Y0, as explained above. The gates on the right apply the same process to the next bits, generating the carry into position 2. For other bit positions, the same principle is used but with additional blocks of gates. For instance, the carry into position 7 is computed by a chain of seven blocks of gates. Since the circuit for each successive bit is one unit longer, the carry structure has the triangular structure seen on the die.

Computing the carry into position 2 requires two stages of carry prediction.

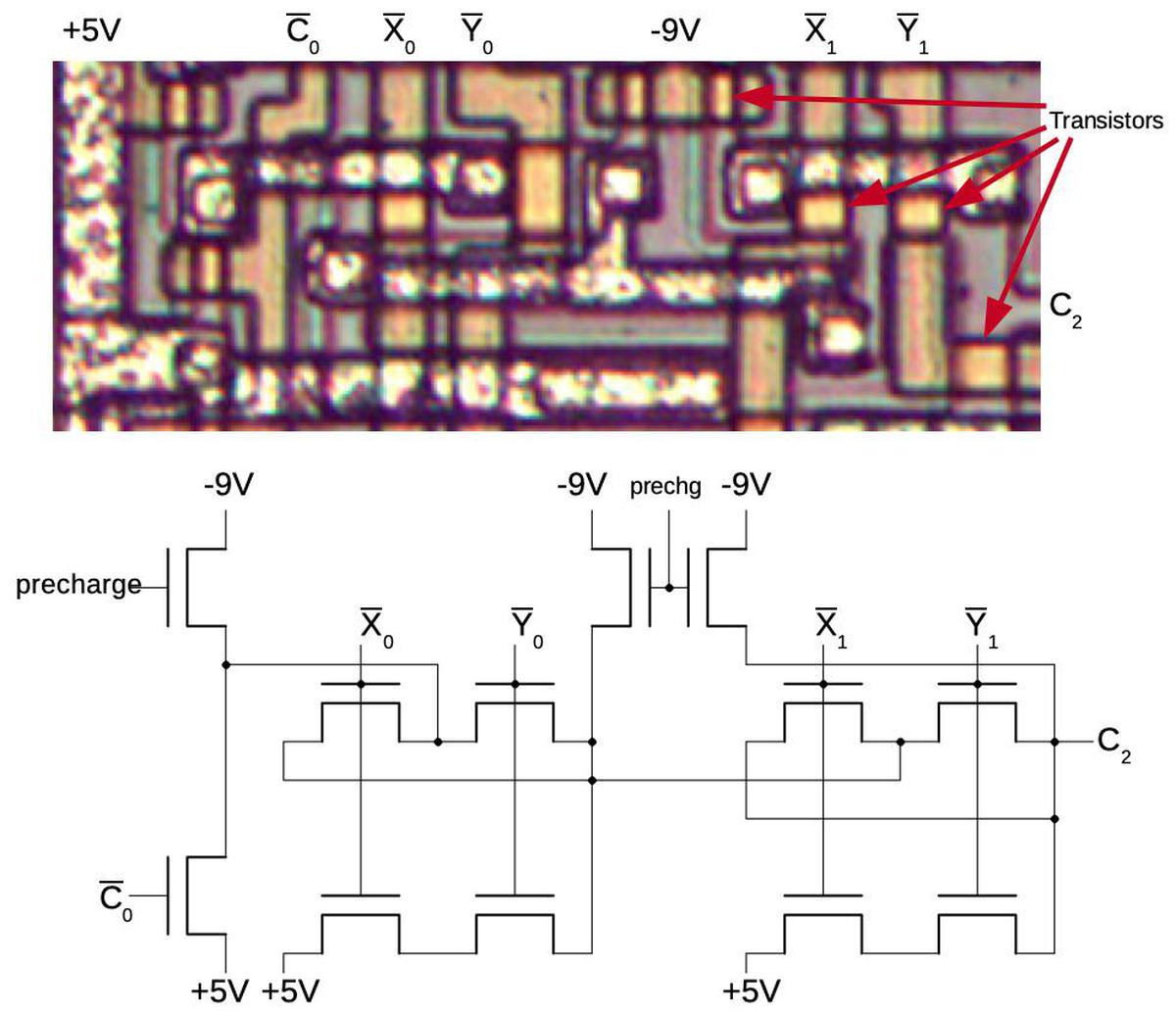

The diagram below shows how the carry circuit for bit 2 is implemented on the die; the circuit for other bits is similar, but with more repeated blocks. In the photograph, the metal wiring on top of the die is silverish. Underneath this, the polysilicon wiring is yellow. At the bottom, the silicon is grayish. The transistors are brighter yellow; several are indicated. The schematic underneath shows the wiring of the transistors; the layout of the schematic is close to the physical layout.

Implementation of the carry lookahead circuit for bit 2.

I'll give a brief outline of how the circuit works. The 8008 is implemented with a type of transistor called a PMOS transistor. You can think of a PMOS transistor as turning on if the input is 0, and off if the input is 1.4 Instead of standard logic gates, this circuit uses a technique called dynamic logic, which takes advantage of capacitance. In the first step, the precharge signal connects -9 volts to the circuitry, precharging it. In the second step, the input signals (top) are applied, turning on various transistors. If there is a path through the transistors from the +5 supply to the output, the output will be pulled high. Otherwise, the output remains at the precharge level; the capacitance of the wires holds the -9 volts. I won't trace out the entire circuit, but the upper X/Y transistor pairs implement an OR gate since if either one is on, the carry can get through. The lower X/Y transistors implement an AND gate; if both are on, the +5 signal will get through, generating a 1.

You might wonder why this carry lookahead circuit is any faster than a plain ripple-carry adder, since the carry signal has to go through up to seven large gates to generate the last carry bit. The trick is that the entire circuit is electrically a single large gate due to the dynamic design. All the transistors are activated in parallel, and then the 5-volt signal can pass through them all rapidly.5 Although there is still a delay as this signal travels through the circuit, the circuit is faster than the standard ripple carry adder which activates transistors in sequence.

A brief history of carry circuits

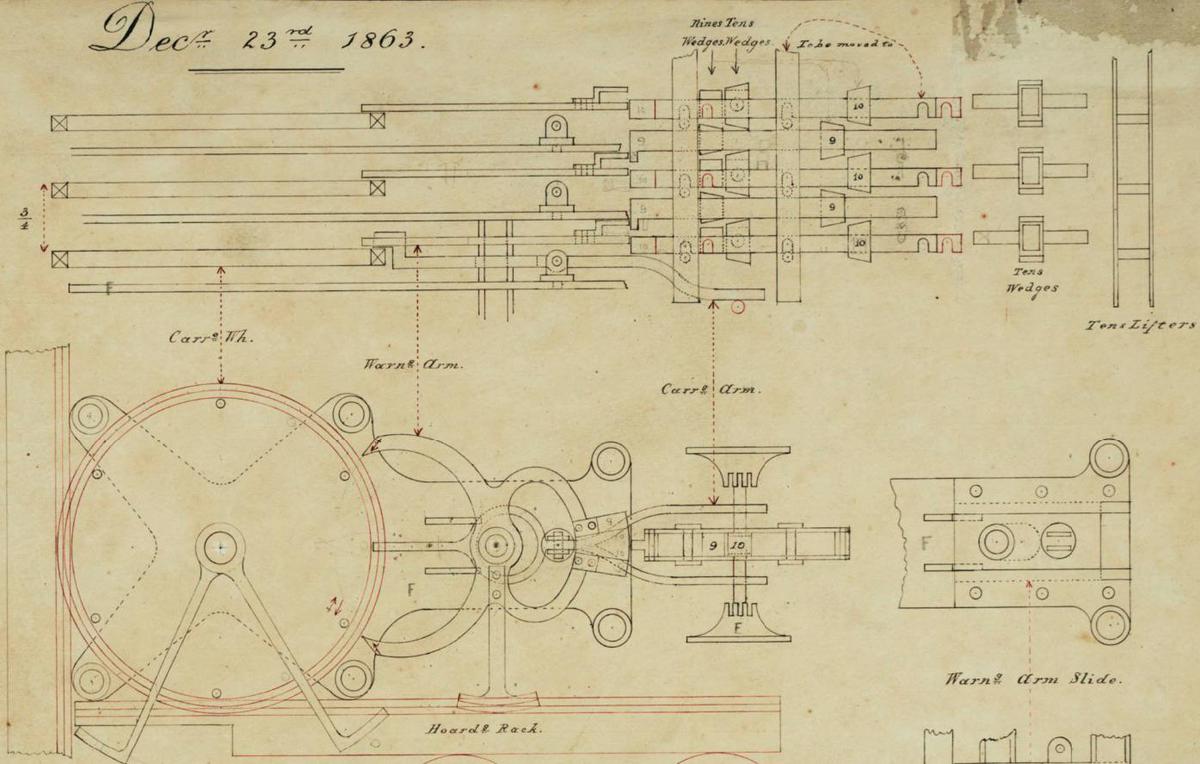

The efficient handling of carries was an issue back to the earliest days of mechanical calculation. The mathematician Blaise Pascal created a mechanical calculator in 1645. This calculator used a mechanical ripple carry mechanism powered by gravity that rapidly propagated the carry from one digit to the next. Almost two centuries later, Charles Babbage designed the famous difference engine (1819-1842). It used a slow ripple carry; after the addition cycle, spiral levers on a rotating shaft activated each digit's carry in sequence. Babbage spent years designing a better carry mechanism for his ambitious Analytical Engine (1937), developing an "anticipating carriage" to perform all carries in parallel. With the anticipating carriage, each digit wheel had a sliding shaft that moved into position when a digit was 9. When a digit triggered a carry by moving from 9 to 0, it raised the stack of shafts, incrementing all the appropriate digits in parallel (see video).

The first digital computers used ripple carry. The designer of the Bell Labs relay computer (1939) states that "the carry circuit was complicated" due to the use of binary-coded decimal (BCD). The groundbreaking ENIAC (1946) used decimal counters with ripple carry. Early binary electronic computers such as EDSAC (1949) and SEAC (1950) were serial, operating on one bit at a time, so they computed carries one bit at a time too. Early computers with parallel addition such as the 1950 SWAC (the fastest computer at the time) and the commercial IBM 701 (1952) used ripple carry.

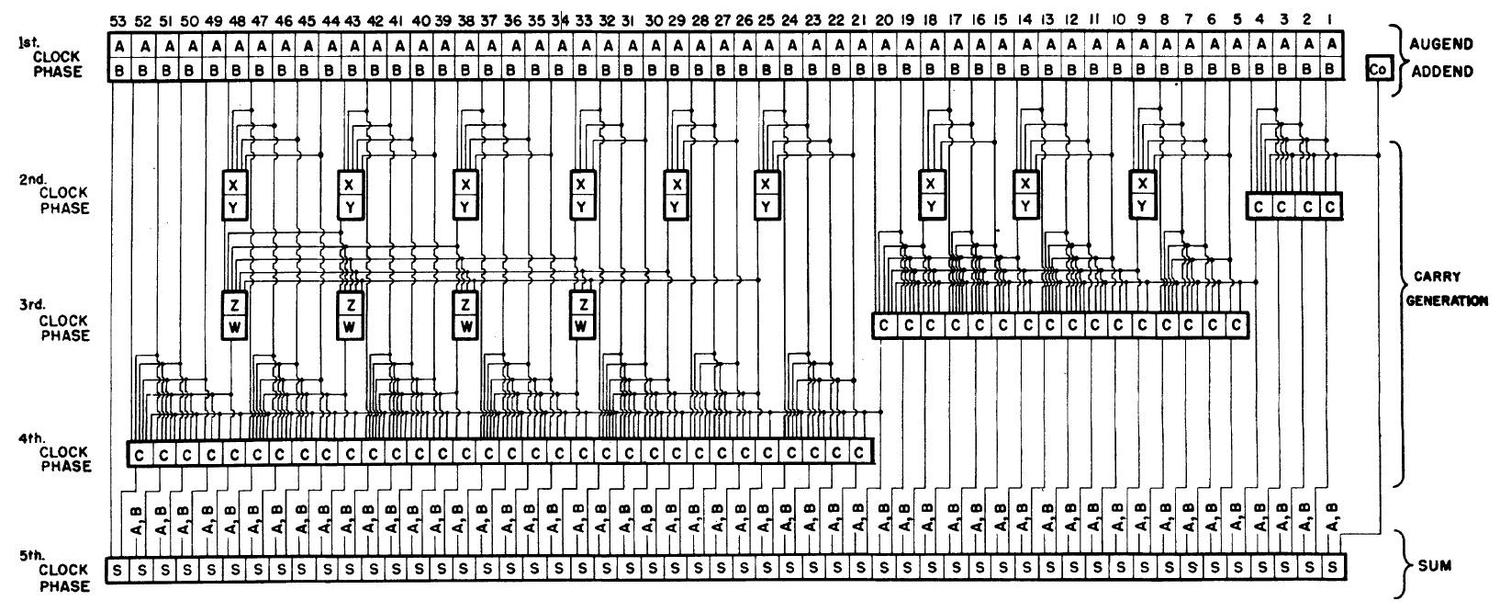

As computers became faster in the 1950s, ripple carry limited performance so alternatives were developed. In 1956, the National Bureau of Standards patented a 53-bit adder using vacuum tubes. This design introduced the important carry-lookahead concept, as well as the idea of using a hierarchy of carry lookahead (two levels in this case). The diagram below illustrates the complexity of this adder.

The development of supercomputers led to new carry techniques. The transistorized Atlas was built by the University of Manchester, Ferranti and Plessey in 1962. It used the influential Manchester carry chain technique, described in 1959. The Atlas vied with the IBM Stretch (1961) for the title of the world's fastest computer. The Stretch introduced high-speed techniques including the carry-select adder and the patented carry save adder for multiplication.

As with mainframes, microprocessors started with simple adders but required improved carry techniques as performance demands increased. Most early microprocessors used ripple carry, such as the 6502, Z-80, and ARM1. Carry-skip was often used for the program counter (as in the 6502 and Z-80); ripple carry was fast enough for 8-bit words but too slow for the 16-bit program counter. The ALU of the Intel 8086 (1978) used a Manchester carry chain as well as carry skip. The large transistor counts of VLSI chips permitted more complex adders, fed by research in parallel-prefix adders. The DEC Alpha 21064 (1992) combined multiple techniques: Manchester carry chain, carry lookahead, conditional sum, and carry select (details). The Hewlett-Packard PA_8000 (1995) contained over 20 adders for various purposes, including a Ling adder, a type developed at IBM in 1966 (details). The Pentium II (1997) used a 72-bit Kogge-Stone adder while the Pentium 4 (2000) used a Han-Carlson adder.6

This history shows that carry propagation was an important performance problem in the 1950s and remains an issue today with continuing research and improvements. Many different solutions have been developed, first in mainframes and later in microprocessors, growing more complex as technology advances. These approaches have tradeoffs of die area, cost, and speed, so different processors choose different implementations.

Die photo of the Intel 8008 processor. Click for a larger version.

If you're interested in the 8008, I have other articles about it describing its architecture, its ALU, its on-chip stack, bootstrap loads, and its unusual history. I announce my latest blog posts on Twitter, so follow me at @kenshirriff. I also have an RSS feed.

Notes and references

from Hacker News https://ift.tt/38nudE0

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.