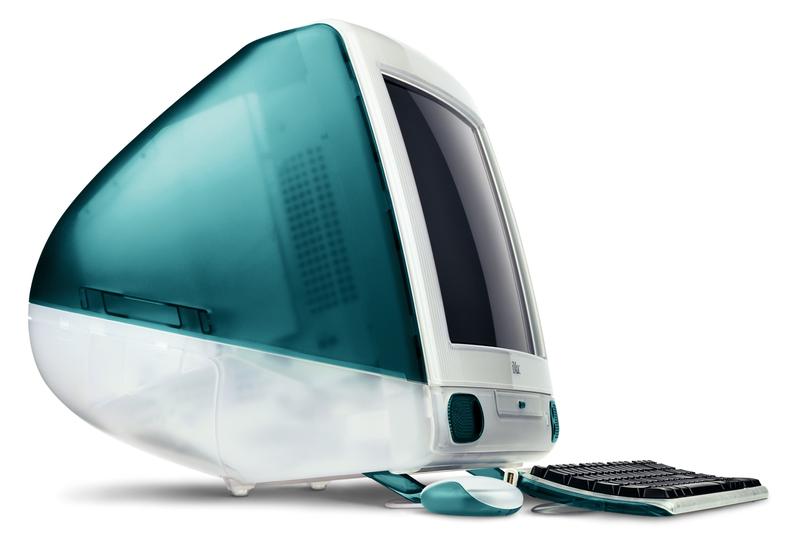

When most people hear Apple in a sentence, their next thought is likely the color. This is because color has always played an exciting role in Apple’s history: from the original Mac to the iMac, from the G3 to the Cube, from Bondi Blue to Snow White. The introduction of color played a vital part in these products' success as it helped create strong visual brand recognition.

The prominent use of color no doubt stems from Steve Jobs obsessing over the smallest details. For instance, there was a time when Steve spent 30 minutes picking the perfect shade of gray for the restroom signs! and another time when he insisted on making the circuit board inside the Mac look great. An engineer told him:

“The only thing that matters is how well it works. Nobody is going to see the PC board.”

Jobs response was:

“I want it to be as beautiful as possible, even if it’s inside the box. A great carpenter isn’t going to use lousy wood for the back of a cabinet, even though nobody’s going to see it.”

iMac G3 in Bondi Blue released in 1998 [Wikipedia]

For as long as we’ve had TVs, color has been an important metric to judge the display’s quality. We’ve witnessed technologies such as CRT, Plasma, and LCD compete to display the most life-like color. The newest technology to enter this fray is High Dynamic Range, aka HDR. The aim remains the same i.e. to recreate an image closer to that seen by the human eye. Traditionally, every video has been delivered in an 8-bit specification known as Rec. 709, displaying up to millions of shades of colors. HDR improves upon this by stepping up to 10 or 12-bit standard known as Rec. 2020 or BT.2020, representing 60 times more color combinations with smoother shade gradations aka “Billions of colors”.

Apple has generally been at the forefront of adopting the latest display technology. Some notable examples include - introducing high-resolution Retina Displays when 1366x768 was a very common resolution for Windows laptops, Shipping 2015 Retina iMacs with 10-bit displays i.e. “Billions of colors”, Apple TV 4K, which was one of the first consumer devices to support Dolby Vision HDR in 2017, automatic upgrades of HD titles to Dolby Vision for iTunes users - a move that is still unrivaled, iPhone 12 which is the first smartphone to be able to record videos in the Dolby Vision HDR format. Apple also sells Pro Display XDR, that costs upward of ~$5000 and ticks all the right boxes for any professional doing color-accurate work.

Therefore, with Apple’s color pedigree, it was surprising to see that on the M1 device specification page, there is no mention of whether M1 devices can output “Billions of colors”. In contrast, the Intel-based devices clearly outline this support.

Specs for the Intel MacBook Pro show that it can output “Billions of Colors” [Source: Apple Technical Specifications]

No mention of “Billions of Colors” for M1 MacBook [Source: Apple Technical Specifications]

EDIT: 1/25/21 - Adding specs for Mac Mini as that’s what was tested. The MacBook spec comparison was for apples-to-apples similar device class purposes.

No mention of “Billions of Colors” for M1 Mac Mini [Source: Apple Technical Specifications]

Even the trusty method of viewing the value in "About this mac" -> "System Report" -> "Graphics/Displays" doesn’t show anything. Usually, this would list a value of 30-bit here (i.e. 10-bit per RGB channel). On M1 devices, the below is what you see when connected to an HDR capable display i.e. no mention of whether the M1 Mini is outputting a 10-bit video signal.

So I set about testing 10-bit output support myself.

Test Setup#

Test 1: Connect to HDR TV#

For this test, I used my trusty 2017 Vizio M65-E0 TV that has a 10-bit panel. I connected the Mac Mini to my Denon AVR-X1300 receiver. The “Displays” settings on Mac OS immediately gave me an option of enabling HDR.

Apple TV HDR test#

Next, I fired up the Apple TV app. Immediately on the “Library” tab, I saw another menu item for HDR titles that’s not visible when connected to a non-HDR display.

Then I used the Apple TV app to play 2017’s Wonder Woman and confirmed through the TV info that it received an HDR10 signal.

YouTube HDR test#

Next, I played an HDR video from YouTube on Safari. I confirmed that I could select the HDR formats for this video, and the video was playing in HDR mode using “Stats for nerds” info. The “Color” values of smpte2084 (PQ) / bt2020 confirmed that the video was playing in HDR mode.

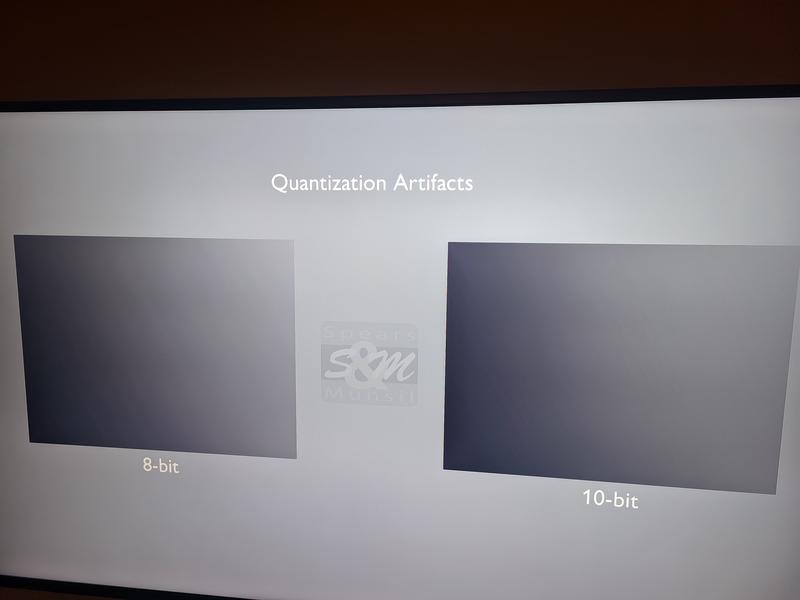

Spears Munsil quantization test#

I also found a video with an 8-bit and 10-bit quantization artifact test pattern here. When I played this video on the TV, the 10-bit pattern was completely smooth.

NOTE: In the image below, both 8-bit and 10-bit appear equally banded because of the 8-bit capture from my smartphone. In reality, the 10-bit pattern is completely smooth compared to the 8-bit pattern.

Test 2: Connect to a 10-bit Monitor#

At this point, it’s clear that the Mac Mini can output an HDR10 signal when connected to an HDR capable display. Next, I explored if it can output a 10-bit signal / Billions of colors to a non-HDR 10-bit display. This was done by connecting to the Philips Brilliance P272P7VU monitor.

10-bit PSD test#

The first test was done by displaying a 10-bit PSD file in “Preview”, which supports 10-bit files. If the M1 outputs a 10-bit signal, then the gradients in the file would be smooth. Opening the file in “Preview” confirmed the 10-bit output as the test pattern was completely smooth. I also opened the same file in another image viewer called “Pixea” and observed banding which most likely is because “Pixea” doesn’t support 10-bit files. In the image below, the same file is open in “Preview” on the left and “Pixea” on the right.

NOTE: You might not observe the difference between the two images below as during the upload and publishing this image might get converted to 8-bit. On my machine the left image is completely smooth while the right displays banding.

Download the 10-bit PSD file from here

Spears Munsil quantization test#

I also ran the “Spears Munsil” quntaization pattern on this monitor and observed the 10-bit pattern had less banding. I ran the test with the latest version of VLC Player - 3.12.1 that is a native Apple Silicon binary.

Spears Munsil Quantization Test shows 10-bit pattern has less banding

SwitchResX test#

Using SwitchResX, I confirmed that the display was set to “Billions of colors” mode i.e. outputting a 10-bit signal.

Reach out if you have any questions! Feel free to follow me on

from Hacker News https://ift.tt/39VcJhi

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.