This is a written version of a presentation I gave live at the a16z Summit in November 2019. You can watch a video version on YouTube.

My day job is to think about the future, so I’ve been thinking a lot about what life will look like in the 2030s. What do we need to build? What social acceptance do we need? What business models do we need?

My daughter, Katie, is a high school junior, which means that when we hit the year 2030, she’ll be in her late-20s, heading into her mid-30s. In this presentation, I’ve imagined what a day in her life might be in the year 2030.

Like most of us, Katie has a morning routine to get ready for the day. It typically starts with a piece of technology—the toilet. Now, it may not look like it’s changed much, but in 2030—like most other things in our house—it will have become a smart toilet. That means it measures 10 properties of your urine, including glucose, and it looks for health problems before they present themselves. After Katie is done, she’s training for the marathon, so she’s ready for her run. Sorry, even in the 2030s there is no train-for-a-marathon pill that you can buy, so she has to log her miles. She slips on her exercise gear, including a shirt is made out of spider silk, engineered by DNA. It’s got sensors sewn into it that pick up health stats like temperature, EKG, and heart rate. It also has actuators, so it can bump her on the wrists to give her directional cues: turn left, turn right, slow down.

As more and more of us in the world crowd into cities, we need to be smarter about the space that we use. And so while Katie is out running, her room is reconfiguring itself from “sleep mode” to “eat mode.” It’s also got “work mode” and “party mode.”

When Katie is back from her run, she’s ready for breakfast. This meal is personalized specifically for her. It understands her genetic makeup, as well as the makeup of her microbiome, so it knows exactly how much lactose her body can break down. It’s also tailored specifically for her training regimen. When you’re training for a marathon, you need more protein. The amount of carbs that you need depends on how many miles you’re running that day. And you need a baseline amount of iron to make sure you’re carrying enough oxygen in your blood. So we take her workout plan and her health stats and we send them to the roboticized kitchen, which is going to build a meal specifically for her. It will deliver the food to her autonomously, via a delivery robot.

Now, one intriguing place where she could get protein is with something called cultured meat—meat that we’re going to grow in a bioreactor. After breakfast, Katie is ready to pick out her clothes for the day, which she does in conjunction with her smart mirror. Actually, the smart mirror is helps her to pick two looks: a physical-world look—that’s her hair, makeup, and clothes—as well as a virtual-world look. As Katie spends more and more time in virtual meetings, the way her avatar looks has become as important as her physical-world look. The mirror can preview both the avatar look and the physical-world look, and she can swipe through a couple options and select the look that she wants.

The last piece of her wardrobe is augmented reality contact lenses. In 2030, we’ll have tiny projectors and cameras built into contact lenses that will sync with nearby computers to basically give us a heads-up display on the real world. As people come into Katie’s field of view, she’ll get reminders about their names and the last time she had contact with them.

So now Katie is fueled up and ready to go to work. First, she consults her smart coach. Today, if you are a CEO, or a five-star general, or maybe director of the CIA, you probably have a chief of staff follows you around to all your meetings and helps you be the best you. In 2030, Katie’s chief of staff is software. It listens to every meeting she has and gives her real-time feedback. For instance, her smart coach might say, “Hey, you might have wanted to ask a question in that situation, instead of stating opinion.” The smart coach is also listening to every call, so it can automatically extract action items and block calendar slots for them.

Those calendar slots get booked depending on what kind of action it is, when it’s due, and how important it is. The smart coach knows Katie is a morning person. If you’re a morning person, it’s best to do your focused, analytical work in the morning and your creative work in the afternoon. But if you’re a night-owl, it’s actually reversed. The smart coach knows all of this and can block out all the right time slots on your calendar.

Katie has a big day today. She works in sales, and they’ve been preparing for a big call, which she’ll do in virtual reality. (Yes, there are still sales people in the 2030s.) Katie works at a company called Influencers, Inc., which builds products for influencers to sell to their fans. Think of it as an entire supply chain that can get behind somebody who’s becoming popular on social media.

The team has been preparing by doing a bunch of customer research and product development. If you wanted to build custom clothes to sell to an influencer today, that task would probably be a lot of summer interns, Excel spreadsheets, focus groups, and conversations spanning over the course of months. In the 2030s, this is all going to be automated with software in a matter of hours. We can ingest the influencer’s social media feeds to get a sense for her style, then we can adjust the social media feeds of her fans to figure out what’s popular in certain neighborhoods. We can then automatically generate candidate clothes to build and put those options in front of a human designer who can pick the best one and then put his or her own finishing touches on it. As a result, Katie’s team has three outfits that they’re ready to pitch the influencer when they have an audience with her.

Katie wants her team to practice for the sales pitch, so they head into a VR conference room. Katie’s team is global: she lives in Portland, her clothing designer is in Paris, the clothing manufacturer is in Phnom Penh, Cambodia, and the data analyst is in Lagos, Nigeria. But the VR room will do real-time translation, so the team members all hear each other in their native languages. But the system doesn’t automatically translate cultural nuance, so the team gathers in the VR room to build their cultural understanding.

The influencer herself lives in Tokyo, but none of the team has spent enough time in Japan to understand the nuances of the culture. So the VR room can simulate the influencer talking with various tones of voice and body language so the team can figure out her state of mind. Is she into it? Is she not into it? Are we in the right price range? Does she like these designs? When she says yes, does she mean “I love it and I want it,” or does she mean, “yes, I understand you, but I disagree”? Katie’s team practices all these things in VR simulation, so that when they meet the real influencer they have a much higher sense of cultural understanding.

When it’s time for the actual call, they do that in VR, as well. That way they can view the clothes in 3D, preview how the supply chain would work, and assess how would they actually manufacture these clothes. While this is happening, Katie’s AR contact lenses can superimpose an engagement meter over everybody in the room. When somebody’s engagement level falls too low, Katie knows to prompt them to lean in and speak up.

So the influencer seems pretty excited about the clothes, but she has some ideas of her own. Instead of just incorporating that feedback, the team offers to run an instant poll. If we were trying to set up an A/B test with a global audience today, this might take weeks. But in the 2030s, software automates this process so it can be completed the same afternoon. The data scientist finds people who have purchased clothes before and their lookalikes—that is, people who are likely to buy the clothes as well. Influencer’s, Inc. software creates and delivers an A/B test within a social media app and gives her fans the opportunity to provide feedback. In this way, the influencer can reach hundreds of her fans instantaneously. In turn, the fans can get paid instantly for giving this feedback. They can choose to get paid in a cryptocurrency like Bitcoin or Libra, or they can choose to get paid in Influencer Coin. If fans are convinced this influencer is only going to get more popular over time and her Influencer Coin appreciates over time, this gives the fans a way to participate in the upside of an influencer’s rising popularity. It’s similar to the way startup employees get to participate in the upside of their startups through equity.

Because we’re doing this with cryptocurrencies powered by the blockchain, we can do this globally and instantaneously. As soon as the fan gives feedback, they get rewarded. Once the feedback is returned, software automatically updates all of the documents associated with getting the supply chain to build the clothing, starting with the sales contract. The sales contract goes to the influencer, she sees the results of the instant poll, and she’s excited to see that a lot of her fans agree with her own taste. She digitally signs the sales contract.

That digital signature unlocks a chain reaction of smart contracts that are built and distributed throughout the supply chain. Everybody’s who’s going to have a hand in creating these clothes, from the fabric supplier to the button supplier to the dye supplier, will get a smart contract that stipulates the terms of their participation. All of these smart contracts are essentially pieces of software that tie the supply chain together, replacing the hand written or typed or word-processed documents that we’ve been using for centuries. Once the orders start coming in, the supply chain will get paid as soon as they deliver according to the terms of the smart contract (rather than waiting for weeks or months to submit invoices, get human approvals, wait for the foreign currency exchange to take place, and then wait for the local currency payment to arrive), and they too can choose to get paid in Bitcoin or influencer coin. In this way, the supply chain can also participate in the rising popularity of the influencer and experience the upside of her growing popularity.

Back at Influencers, Inc., Katie’s excited to have closed a new influencer contract. She’s going to celebrate with her favorite activity, which is drone racing. She heads to the mall, where a drone racing stadium has replaced the former big box retailers.

In this new style of entertainment, audience participation is woven into the game itself. As fans cheer for their favorite pilots, the volume and coordination of their cheers determines whether the drone pilots get a double or triple point bonus as they fly their craft through obstacle rings. Crowd participation influences the way the game unfolds. Katie’s pilot, Wonder Woman, comes in second. Because she’s such a high finisher, when Katie logs into the drone racing game on her phone, her drone has new capabilities.

After the drone race, Katie stops by a store called the Bionic Suit Store. Katie’s helping a friend move over the weekend, so she needs to get fitted for an exoskeleton that will help her move bigger and bulkier items. She stops by the store to get calibrated and comfortable with the controls. When she’s ready, she schedules the suit to be delivered to her friend’s house when it’s time to move on Saturday morning.

Then Katie’s ready to go home, so she summons an auto-pod. Her city, Portland, has recently replaced their light rail system with an autonomously summoned mass transit system. She can summon a ride just like a Lyft. The auto-pod is a private, one- or two-person pod that’s half the width of a normal car. It turns out that if you do the math, packetizing mass transit in this fashion is an excellent idea, just like packetizing data for the internet was. You can actually move more people from point A to point B in these private pods, even during rush hour, compared to trains.

Now Katie is back home and ready for some sleep. Before bedtime, she’s got one shopping errand and two important connections to make. Katie offered to host the after-move party at her place, and so she needs to rent some furniture. In particular, she needs to have a cocktail bar delivered for the weekend. She fires up her AR shopping app to see what’s available. The AR contact lenses render exactly what the furniture will look like in her space, so she knows if it’ll fit and if she likes the look and feel. She scrolls through some of the options and picks one to be delivered for the party.

Then she has two important connections to make. The first is with her pet kitty. Sadly, kitty’s been having tummy troubles. As soon as Katie’s AR contact lenses see the cat, they remind Katie that kitty is on day 5 of a 10-day course of antibiotics. The AR lenses remind Katie to mix the antibiotics in with the cat’s food. This is a CRISPR-engineered pet, so the cat also glows in the dark. This is awesome because the non-glow-in-the-dark kitty was very good at hiding. Now, jellyfish genes that produce a protein called the green fluorescent protein (GFP) are integrated with the cat. No more hiding.

One more important connection. Her grandfather lives in San Francisco; he loves living independently. But lately, as he ages, he’s been having trouble doing things like getting out of the couch or bending over to pick up a box. So he’s recently gotten himself a robotic tail, inspired by a seahorse, that knows what he’s trying to do and automatically counterbalances to make it easier for him to get up and down stairs, pick up boxes, and leave the sofa. The robotic tail needs to be calibrated every few weeks, which involves changing the counterweights in each of the vertebrae. Katie offers to do it for him.

Today, a lot of us help our parents digitally by logging onto their laptops and helping them with Gmail problems via remote desktop. But in 2030, Katie does that with remote robotic arms. The kitchen robot that’s in her grandfather’s kitchen has a remote control mode, so Katie can “dial in” and control the arms.

There’s one last thing to do before Katie goes to sleep. She’s been having these incredibly vivid dreams in which people are wearing awesome outfits. She wants to remember the details of those dreams, so she can share them with her coworkers. Just before she falls asleep, she slips on a headband will record her dreams in a way that she can share with her friends.

***

So what have we seen? We’ve seen better health through personalized food, richer data sets, and shorter feedback loops. We’ve seen a flipped supply chain that allows people with big fan bases to actually make their own lifestyle brands. We’ve seen easier ad hoc global collaboration that spreads the benefits of ownership much farther than today. We’ve seen tech that gives us superpowers, as well as a deeper empathy for cultures that we haven’t experienced before. And we’ve seen a set of new experiences, such as going to a mall to watch drone racing or having a glow-in-the-dark pet.

I know that predicting the future is a bit of an uncertain enterprise. I may have gotten some of the details of the specific products I’ve mentioned wrong, but I’m pretty confident in the direction of these products. That’s because I’ve seen a lot of work today, either in a startup form or corporate innovation form, that points in this direction.

So: how will we get to this 2030 vision? How do we build it? What technology do we need to build? What business models do we need to build? What privacy frameworks do we need to create? Let’s go back to the beginning of Katie’s imagined day.

For starters, a smart toilet is part of a broad trend towards longitudinal health data. That’s a fancy way of saying: let’s use cheap sensors to get your health data over time and use that data to predict health outcomes, such as kidney stones or diabetes. So what data do we have? We have the data that tracks your urine. We have smart speakers that listen to changes in your voice over time. We’ve got what you look like, in the form of selfies. So we have lots of data. We also have algorithms that can take this data and predict health outcomes. We can use selfies to predict skin cancer, we can use changes in your voice to predict depression or early-onset Alzheimer’s.

What’s missing is a business model, a privacy model, and an ethical framework to tie these two pieces together. Imagine you’re using Instagram or your smart mirror and an alert pops up that says, “Hey, do you want me to send all these pictures to your doctor?” I’m not sure I want to do that. Would it be any better if it offers to send it to an insurance company? It’s not clear what the business model is that ties these two things together.

Let me give you an edge case that shows how tricky this will be to design. Katie takes selfies with her BFF all the time—imagine that Katie’s insurance will pay for the skin cancer detection service, based on her selfies. Now imagine that her BFF’s insurance doesn’t cover that. What happens when the algorithms spot potential melanoma in her BFF? How do we design the right business models, privacy framework, and ethical framework to get the best health outcomes to everybody? It’s going to be tricky.

Next, how do we get to spider silk shirts? This is an amazing biological material. Ounce for ounce, this is lighter than cotton, tougher than Kevlar, not derived from petroleum, and biodegrades naturally. So why aren’t we all wearing spider silk shirts today? The short answer is it’s way too expensive. Spiders themselves don’t generate that much usable silk. You can’t farm it, because if you put enough spiders close together they start eating each other. So: bioengineering to the rescue. Using techniques we’ve recently discovered, like CRISPR, we can copy and paste DNA that codes for spider silk proteins and stick them inside E. coli. Then we can turn E. coli into a factory to build the materials that we want.

Until very recently, a problem with this approach was the DNA sequences that code for spider silk protein are very long and repetitive. So if you just do a copy and paste of that long, repetitive sequence, and then stick it inside E. coli, E. coli will break the sequences down and you won’t get the proteins you want. Recently, a team at the Washington University in St. Louis figured out a way to recode the DNA into shorter sequences and in a way that E. coli won’t reject it. They now have E. coli producing spider silk proteins that, when combined with other ingredients, make spider silk fabric. This is a perfect illustration of where we are with bioengineering today. We’ve discovered the basic techniques like copy and paste DNA. And now we’re working on the higher level engineering design principles that allow us to do things that nature can’t more cost-effectively.

How do we get to robotic furniture? No technology breakthrough needed—you could actually buy this today if you wanted from a company called Ori Living. But this does illustrate a challenge, which is that our homes are turning into data centers. I’m guessing most of us do not want a second full-time job as a data center engineer, managing this fleet of software and equipment that we have in our house. We’re going to need a breakthrough in usability design that makes the experience of upgrading software for all of the devices in our house—every light bulb, every plug, every shirt—simpler. We’re definitely not there today; you probably have 10 devices that are all prompting you for updates. That’s not going to work when we have hundreds of devices in our houses.

How do we build customized foods? In particular, how do we get to the protein in these meals? One intriguing possibility is what’s called lab-grown, or engineered, or slaughter-free meat. Here’s the basic idea: We take a tissue sample from a pig or a cow, harvest its stem cells and put those stem cells in a bioreactor, a nutrient bath. When cells are in a healthy environment, they’ll multiply. If we can do that, we have the possibility to grow the meat that we want without raising and slaughtering animals. Growing meat in this way, in a bioreactor instead of on the field, would have several advantages. One, it’s definitely better for the environment. (Little known fact about global warming: 17 percent of all greenhouse gas emissions are caused by cows burping and farting.)

Obviously, it’s better for the animal. And though the jury’s still out, we suspect that it would be better for us. It’s likely easier to keep this supply chain cleaner than managing the cold chain from a slaughterhouse to your refrigerator.

If we could do this, what should we do with it? We should probably start with the species that are under the most pressure today, like the overfished Pacific bluefin tuna. There’s potential to grow it, instead of fishing it. But we don’t have to stop there. We aren’t constrained by animals that we’ve encountered. From basic biology design principles, we could engineer meats that are nutritious and delicious from animals that have never existed before. That’s the potential of bioengineering.

Next: the smart contact. It turns out that the VR supply chain has already done a great job of shrinking projectors and cameras into very, very small form factors. So the questions are: Can we shrink it even smaller? Can we shrink it into a form that we could embed into a transparent flexible material, like what contact lenses are made of? And can we get them at a high resolution so that even though they’re tiny and embedded in a contact lens, we can see full-color text and images? If we can do all that, how do we add power and data to it? Believe it or not, there are already tons of companies working on each one of these problems.

There’s a Silicon Valley startup called Mojo Vision that demonstrated one piece of this puzzle, the high-resolution tiny projector. It’s made out of micro LEDs and is half a millimeter across. It has a pixel density of 14,000 pixels per inch. The latest iPhone clocks in at just under 500. So this thing has 30 to 40 times the pixel density of the iPhone and is half a millimeter wide. That’s like a 10th of the size of one of the black dots on a ladybug. But now that we’ve gotten it small enough and pixel-dense enough, there are still other problems. Can we put it into a contact lens? Can we get it power and data? There’s incredible engineering going into packaging all of these components in contact lens form.

How do we build a smart coach? If you think about what we want out of a smart coach that we’re not getting from Alexa today, it’s the ability to understand us much better and answer higher-level questions. There is already an analog device that weighs about 3 pounds and draws about 20 watts of power that can answer those questions already. And it can answer those questions despite the fact that it has enormous handicaps compared to modern computers. Its components are a million times slower, and it has a terrible memory subsystem. That device is our brain.

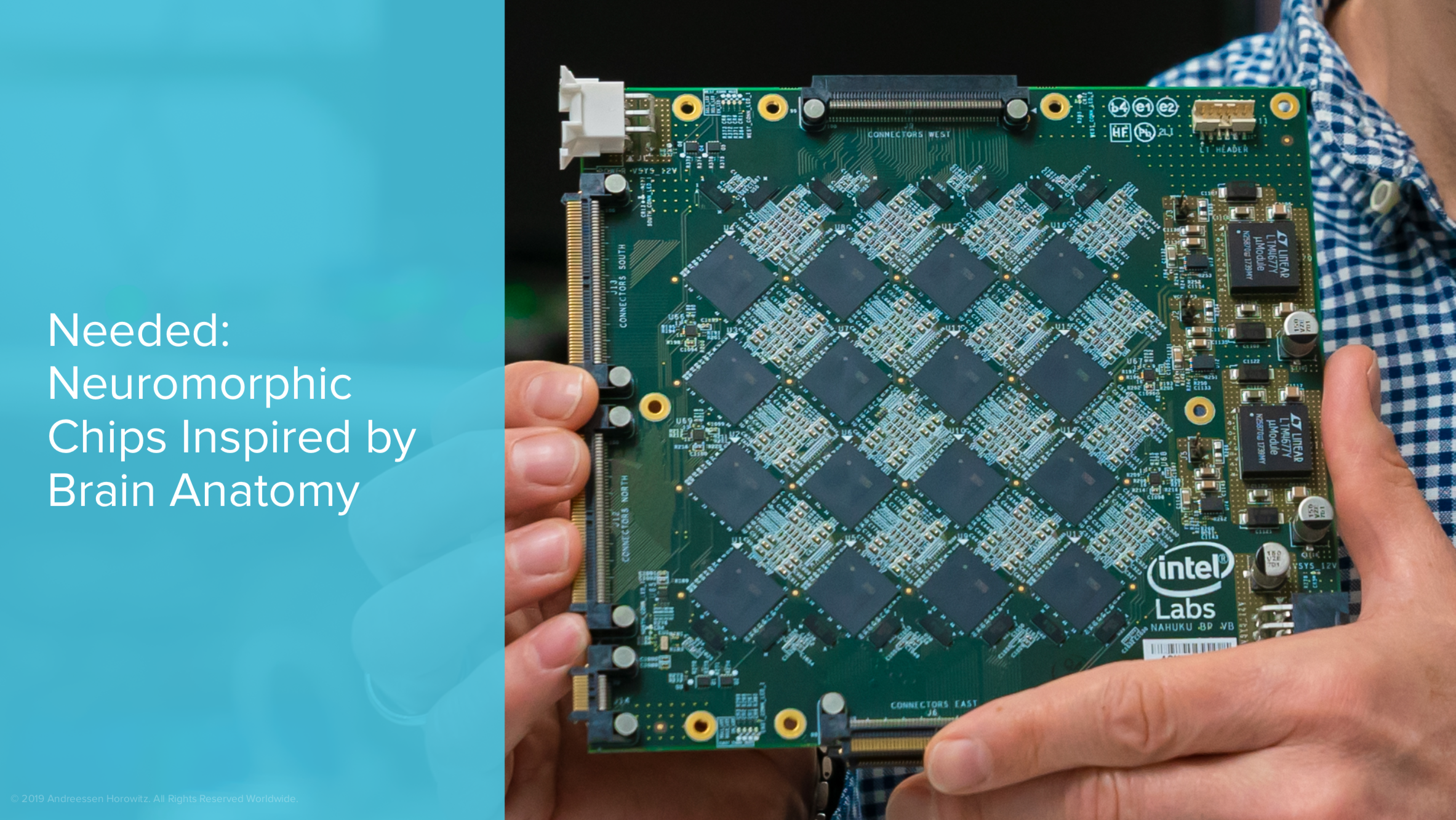

It’s possible that we can build things that are more explicitly brain-like to achieve a smart coach. And it’s certainly possible that we can get there on our current trajectory, which is more data fed to better machine learning algorithms. But there’s a potential shortcut on the hardware side, called a neuromorphic chip. It explicitly models the brain—how neurons are connecting to other neurons and adjusting their weights over time based on stimulus. Instead of using logic gates and zeros and ones, neuromorphic chips have connections with neurons that have different strengths over time. From a hardware point of view, we might be able to build a more brain-like computer.

On the software side, we also have a lot to learn from brains—even teeny brains that are young and relatively underdeveloped. For example, there’s a cognitive psychology experiment that has been conducted with toddlers. An experimenter is hanging up clothes in front of the toddler and drops a clothespin.

The baby reaches over and hands the clothespin back to the experimenter. No prior training, the toddler has never been in an experience like this, and yet, he’s figured out that the experimenter is going to need the clothespin to solve his problem. Not only that, the baby decides he’s going to help, even though there’s no promise of reward. Humanity for the win.

There’s so much going on in the base software of this toddler that we would love to reverse engineer and put into our smart coach. The ability to form what psychologists call a theory of mind, in other words, a prediction of other people’s desires, and goals, and emotions. The ability to break a task down into sub-tasks, without seeing thousands of similar examples. And third, and maybe most importantly, the built-in desire to help when it’s actually helpful. There’s a fascinating variant of this experiment in which the experimenter just throws the clothespin on the floor and doesn’t look at it. You know what most babies do in that situation? They don’t do anything. They don’t pick up the clothespin, because they’ve somehow figured out the difference between “needs help” and “doesn’t need help.”

If you’ve ever been in a room where the smart speaker suddenly interrupts your conversation because it couldn’t figure out the difference between “needs help” and “doesn’t need help,” you understand how valuable this is.

The next 2030 tech is the auto-pod. Most of this technology already exists because it’s a subset of what we need to build for self-driving cars. Because the auto-pods have their own right of way, they don’t have to deal with crazy pedestrians and bad drivers. They have a dedicated right-of-way just like the light rail does today. What we will need, though, is a lot of people to say yes. We need regulators, mayors, regional transit planning authorities, state and federal regulators to all say yes to this technology.

We’re still doing a lot of shopping in the 2030s, mostly online, of course. One thing we need is something called post-quantum cryptography. You might have read Google’s recent announcement that they have a quantum computer that has achieved quantum supremacy. In other words, this quantum computer can do things that a normal computer might take 10,000 years to do. One of the things that people have been itching for quantum computers to do is brute force attack the encryption keys that protect all of our messages and credit card numbers today. So, we’re on the hunt for new algorithms that will withstand brute force attacks against crypto algorithms.

The good news is that a worldwide community of cryptographers has been working hard on this problem. And NIST, the National Institute of Standards and Technologies, has actually organized a Survivor-like process to identify all the candidate algorithms and successively vote them off the island, until we get to the standard named post-quantum crypto. They’ve been running this process since 2015 and we’re already in the second round of votes. We’ve gone from 59 candidates to 26, and the hope is that we’ll standardize sometime in the mid-2020s.

The last technology I want to talk about is admittedly the most speculative and potentially farthest out of all the products I’ve talked about: the dream recorder. But I want to share two amazing research projects that demonstrate we’re on our way, we have a path forward. If you’re going to record dreams, you need to interpret brain signals, the brain’s electrical activities and turn that into audio and video. Let’s take each of them in turn.

Researchers at Columbia University led by Nima Mesgarani have literally turned brainwaves into speech. They found epilepsy patients that were already getting brain surgery. They inserted probes into the brain to capture brain activity and had people listen to people talking. Then they used the resulting brainwaves to train a voice synthesizer—exactly the same thing that’s in Alexa today. It’s an audio stream from your brainwaves.

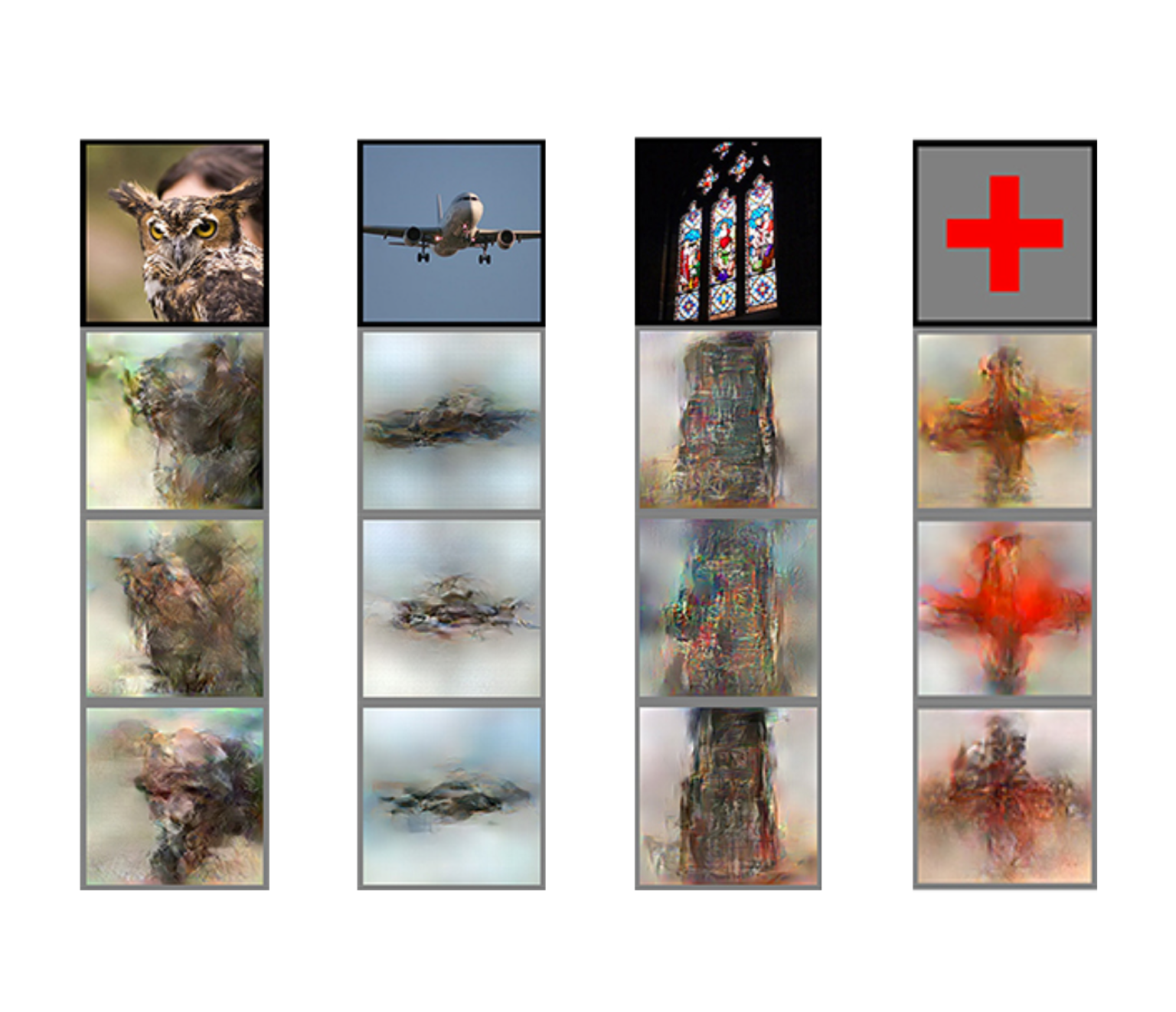

Now, could we do the same thing with video? Researchers in Japan published a paper this April doing exactly that. Instead of epilepsy patients with probes inserted into their brains, they took brain scans of people inside fMRIs and showed them lots and lots of images. It might be pictures, it might be a plus sign, it maybe letters of the alphabet. They recorded the brain activity, then used a popular technique today in machine learning called a deep generated network to build an image generator. It’s kind of the same technology that’s behind deepfakes today. The resulting images look kind of impressionist. Still, it’s amazing that we’ve gotten this far. It suggests that one day we might be able to interpret the brain in a way that we could literally record your dreams.

From this vantage point—when we haven’t built those technologies yet, but are on our way there—the future is amazing. I’m ready for personalized food. I’m ready for a healthier me. I’m ready for a healthier planet. I’m ready for smart coach. I’m ready for AR contact lenses. I’m ready to ride the auto-pod. And I’m ready for superpowers at work. I hope this presentation has made you more and more excited about the future that is coming.

***

The views expressed here are those of the individual AH Capital Management, L.L.C. (“a16z”) personnel quoted and are not the views of a16z or its affiliates. Certain information contained in here has been obtained from third-party sources, including from portfolio companies of funds managed by a16z. While taken from sources believed to be reliable, a16z has not independently verified such information and makes no representations about the enduring accuracy of the information or its appropriateness for a given situation.

This content is provided for informational purposes only, and should not be relied upon as legal, business, investment, or tax advice. You should consult your own advisers as to those matters. References to any securities or digital assets are for illustrative purposes only, and do not constitute an investment recommendation or offer to provide investment advisory services. Furthermore, this content is not directed at nor intended for use by any investors or prospective investors, and may not under any circumstances be relied upon when making a decision to invest in any fund managed by a16z. (An offering to invest in an a16z fund will be made only by the private placement memorandum, subscription agreement, and other relevant documentation of any such fund and should be read in their entirety.) Any investments or portfolio companies mentioned, referred to, or described are not representative of all investments in vehicles managed by a16z, and there can be no assurance that the investments will be profitable or that other investments made in the future will have similar characteristics or results. A list of investments made by funds managed by Andreessen Horowitz (excluding investments for which the issuer has not provided permission for a16z to disclose publicly as well as unannounced investments in publicly traded digital assets) is available at https://a16z.com/investments/.

Charts and graphs provided within are for informational purposes solely and should not be relied upon when making any investment decision. Past performance is not indicative of future results. The content speaks only as of the date indicated. Any projections, estimates, forecasts, targets, prospects, and/or opinions expressed in these materials are subject to change without notice and may differ or be contrary to opinions expressed by others. Please see https://a16z.com/disclosures for additional important information.

from Hacker News https://ift.tt/2F6374v

No comments:

Post a Comment

Note: Only a member of this blog may post a comment.